This blog dissects the ML vs. LLM debate, weighing the relevance of traditional Machine Learning models against the rising dominance of Large Language Models, and highlights their distinctions and optimal use cases in AI applications.

The observability blog

Learn how model observability can help you stay on top of ML in the wild and bring value to your business.

This blog dissects the ML vs. LLM debate, weighing the relevance of traditional Machine Learning models against the rising dominance of Large Language Models, and highlights their distinctions and optimal use cases in AI applications.

Let’s talk about LLM size vs performance, scalability, and cost-effectiveness in real-world applications of LLMs.

In this post, we’ll dig into the LlamaIndex and LangChain frameworks to highlight their various strengths and show where, when, and how developers should go about making a choice between the two (if at all).

In this blog, we recap our recent webinar on Unraveling prompt engineering, covering considerations in prompt selection, overlooked prompting rules of thumb, and breakthroughs in prompting techniques.

Interested in how Kubeflow vs. MLflow stack up against each other? Let’s delve into our analysis of these two prominent open-source MLOps tools

In this blog, we dive into LLM architectures from data ingestion to caching, inference, and costs, and the vital role they play when it comes to deploying LLMs in real-world applications effectively.

When it comes to LLM training businesses face a crucial question: To train from scratch or leverage foundational models? Let’s go through the options and their pros and cons.

Vertex AI vs. Azure AI – Let’s take a look at the shift in the cloud AI landscape, examine the strengths and weaknesses of both and what practitioners and developers should evaluate when choosing to go with one or the other.

Model-based techniques for drift monitoring offer significant advantages over statistical-based techniques. Let’s look into the different techniques, their pros and cons, and considerations for when and how to use them.

KServe vs Seldon Core – What are the main considerations when choosing between these two popular model deployment frameworks.

[2023 update] In this blog post, we will take you through the major fundamental differences between GCP’s Vertex AI and AWS’s Sagemaker

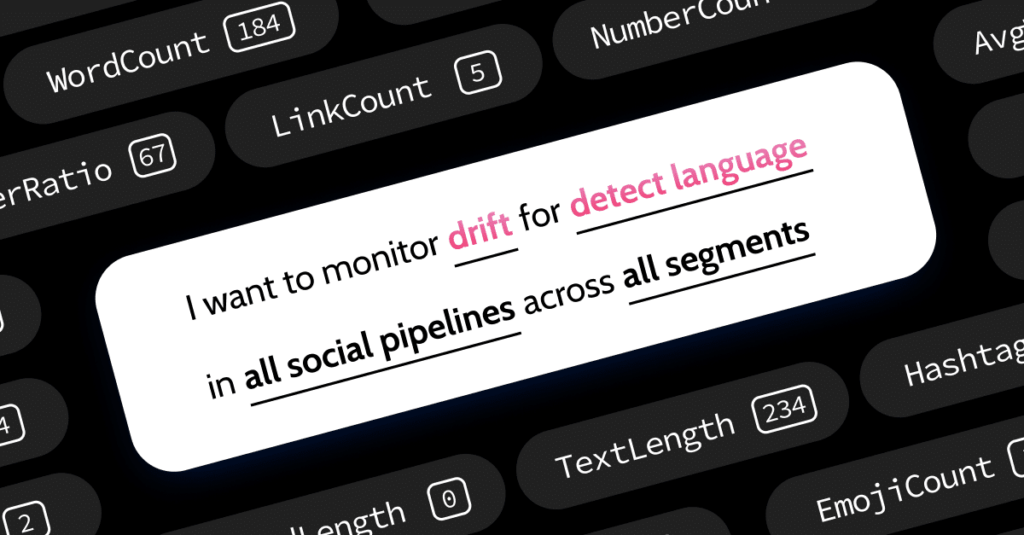

In this post, we’re going to show you an example of how to use Elemeta together with Superwise’s model observability community edition to supply visibility and monitoring of your NLP model’s input text.

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.

Page [tcb_pagination_current_page] of [tcb_pagination_total_pages]