Large Language Models (LLMs), with mainstream solutions like ChatGPT leading the charge, are increasingly reshaping business landscapes. Integrating them offers an unprecedented opportunity for businesses to enhance efficiency and benefit from their untapped potential. Whether it’s streamlining customer support with chatbots, semantic search, or tapping into an expansive in-house knowledge base—LLMs are set to play a transformative role.

Within this rapidly evolving world of LLMs, businesses face a crucial question: To train from scratch or leverage pre-trained models? This blog takes you through some of the options—along with their pros and cons—to help you understand how to apply LLMs to your specific use cases, as discussed in the recent Superwise webinar To Train or Not to Train Your LLM. We’ll go through some of these exciting possibilities, be it the allure of custom LLM training, the simplicity of prompt engineering, the precision of labeled data, or the balanced prowess of PEFT. We’ll wrap up with some key thoughts on the LLM lifecycle and how to monitor and support LLMs once you’re in production.

Follow along with the slides here.

More posts in this series:

- Considerations & best practices in LLM training

- Considerations & best practices for LLM architectures

- Making sense of prompt engineering

The art and science of model tuning

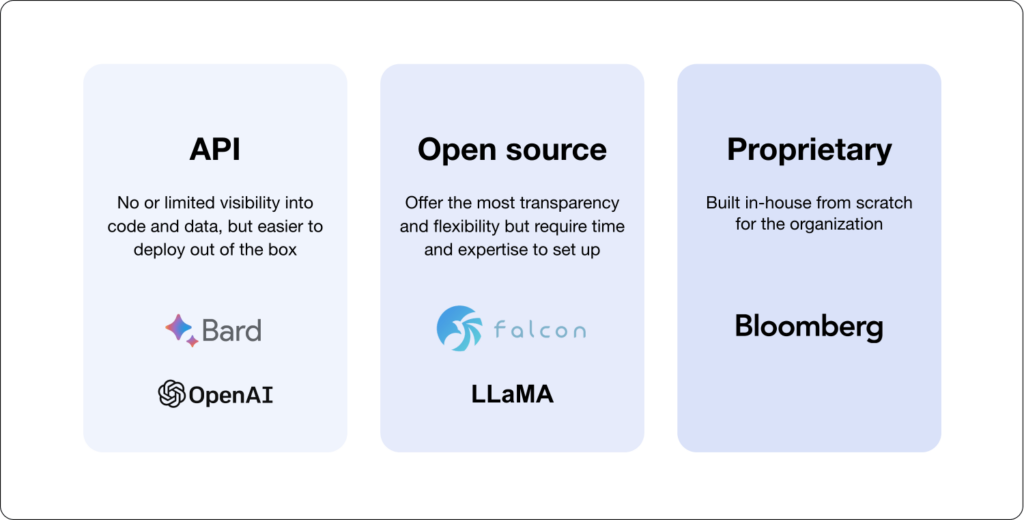

Today’s marketplace offers a gamut of options—open-source models, proprietary ones, or models-as-a-service. We’re already seeing excellent open-source models being released, like Falcon and Meta’s Llama 2. And recent news about proprietary models, like Bloomberg GPT, has surfaced. Businesses need to weigh the benefits of training on proprietary data against fine-tuning pre-existing giants; it all boils down to what’s right for your specific use case.

As an example, let’s take a fictional company that we’ll call MailProtect. The corporation wants to use a custom GPT application that detects and blocks phishing emails. After all, LLMs can perform classification, but they can also generate new content from existing content—which could lead to better insights. It’s true that an LLM with a chat-style interface like ChatGPT can understand the content of the emails and will do a great job of identifying phishing emails. But say one of the company’s largest customers is in the crypto exchange business. In that domain, LLMs like GPT tend to return way more false positives.

One possible solution is for MailProtect to tune its GPT application by asking it questions about financial data. True, the model will work better as the result of the tuning, but it’s not a magic solution. The model will be more accurate with fine-tuning, but is it right for your business?

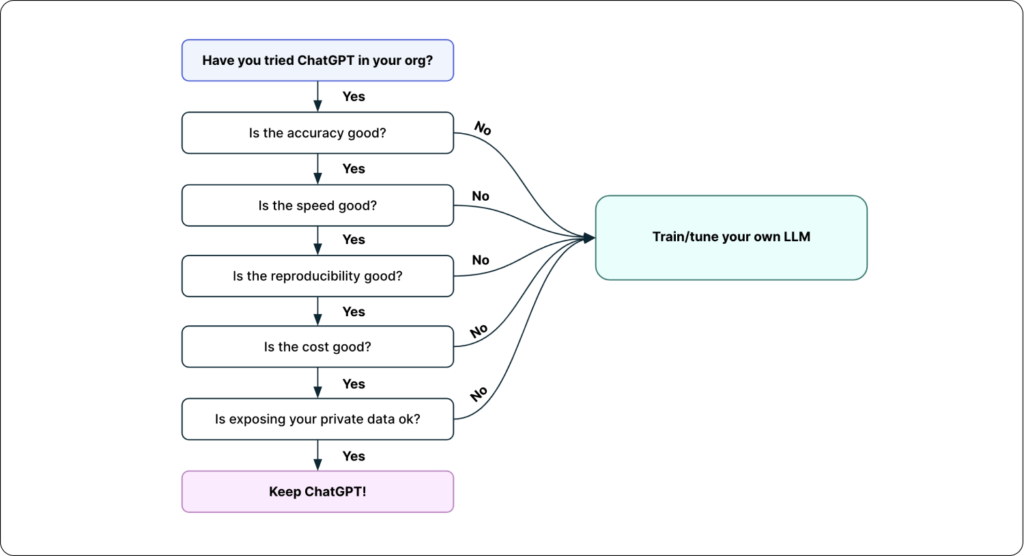

For starters, just a few of the questions you need to ask yourself are: Is the model’s accuracy good enough? Do I have enough training data for my specific use case? Is it fast enough? Is it reproducible, such that I get consistent results for the same prompts? Is the quality high enough over time? Do the economics of the model work?

Why tune or train? The pros and cons

Training your own LLM offers distinct advantages:

- Comparable performance

- Tailored accuracy and improved relevance

- Potential to reduce inference costs

- Greater control over data and infrastructural control mitigates issues such as data privacy concerns or service availability and latency problems.

But with control comes responsibility, especially concerning data leakage. The risk of sensitive information inadvertently making its way into model responses, especially when trained on private organizational data, is a serious consideration.

Here are some of the cons to consider when it comes to training your own LLM:

- Extra caution is needed for the data going into the model

- Requires serving infrastructure or training infrastructure

- Demands huge amounts of good-quality data

- Difficult to measure quality

- Possible reduced performance in certain cases

- Increased complexity of operations

The decision boils down to a balance between performance, price, and privacy. But, as pointed out in the webinar, there’s more than one way to train an LLM.

There’s more than one way to train/tune an LLM

In the webinar (BTW, you can follow along with the slides here), we highlighted four approaches to training/tuning your own LLM:

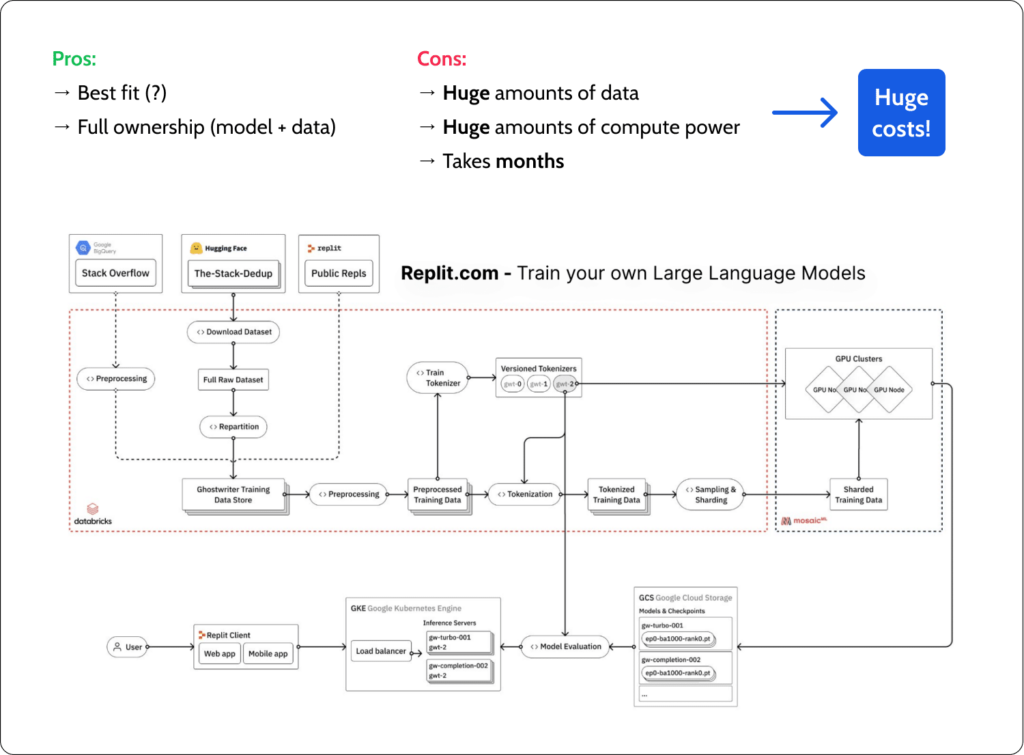

Train an LLM from scratch

It is a Herculean effort with unmatched accuracy but at a steep price in both dollars and time. For this option, you’ll need massive amounts of data along with mountains of computing power, and you’ll have to select an architecture – hopefully one that’s already been researched. Aside from demanding a lot of technical ability, you’ll need to own the infrastructure and the data pipeline, including pre-processing, storage, tokenization, and serving. The costs are enormous. Just to give you a feel for what’s involved, let’s look at Bloomberg GPT. It’s estimated that the training cost was around three to four million dollars, and the entire training process took around three to four months. And we’re talking boatloads of data. In BloombergGPT, only 5% of the data specifically covered financial expertise. The other 95% included Wikipedia, news, Reddit, dictionaries, and other datasets. This option is clearly not for everyone.

Open-source model tuning

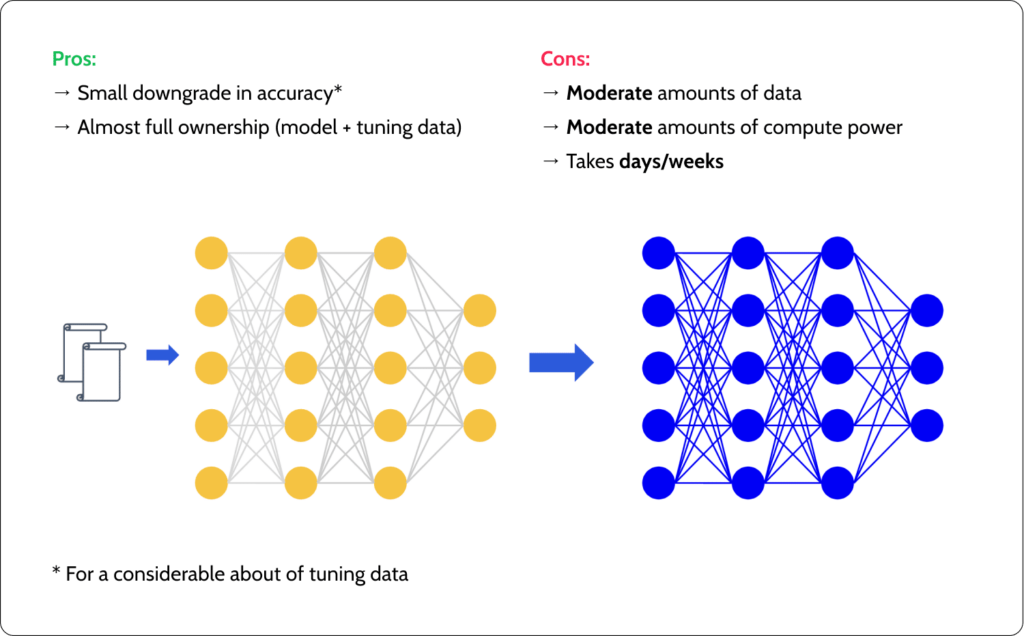

Start with a base LLM for your foundation, sprinkle on some domain-specific data, and voila! The resulting model is now more familiar with the notions and nuances of your specific industry. This can be done by starting with an open source or foundational model such as LLaMA, Falcon, Mosaic, or Bard – and tuning it by feeding the model with examples from your specific use case. Essentially, the model comes pre-trained, and you’re fine-tuning it to improve the accuracy for your specific use cases. The model accuracy won’t be quite as good as training from scratch. It’s a small downgrade, but this option involves less data and more moderate amounts of computing time. Of course, not every company has the budget or resources needed to put this option into play.

Few-shot prompt tuning

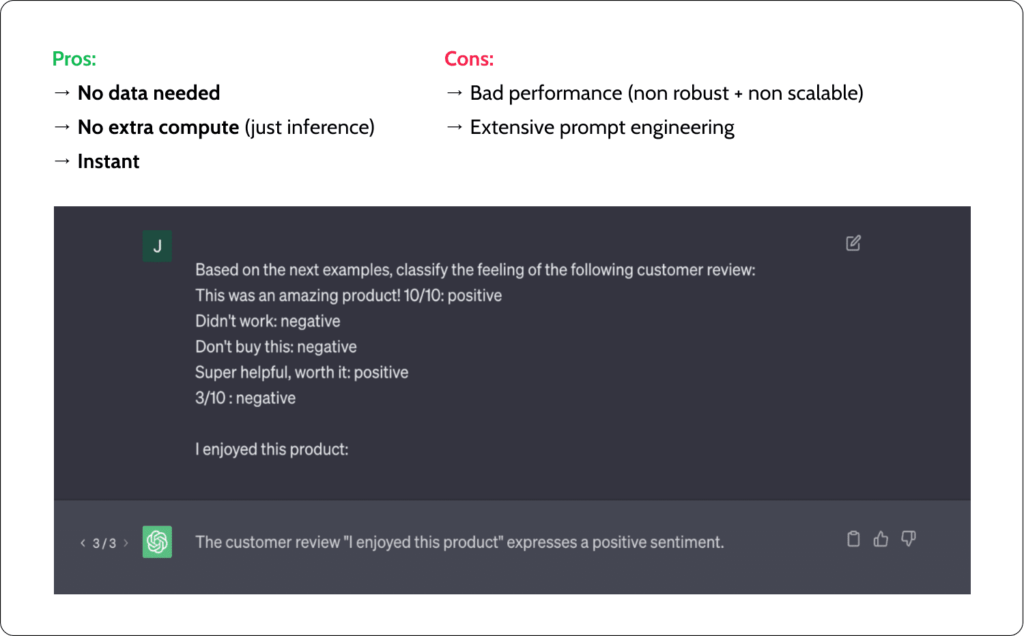

The quick-fix approach—instant but less robust. With this option, you’re training the LLM inside the prompt. Every time you query the LLM, you give examples of how to behave and how not to behave. These examples consist of pairs of sample input and expected model output, more formally known as “shots.” Also known as “prompt engineering,” this solution is not an exact science, but it has the advantage of being fast and doesn’t involve any extra computing power. The downside is that it’s not robust, scalable, or reproducible. This might work well during research but is clearly not up to standard for a solution going into production.

Parameter-efficient fine-tuning (PEFT)

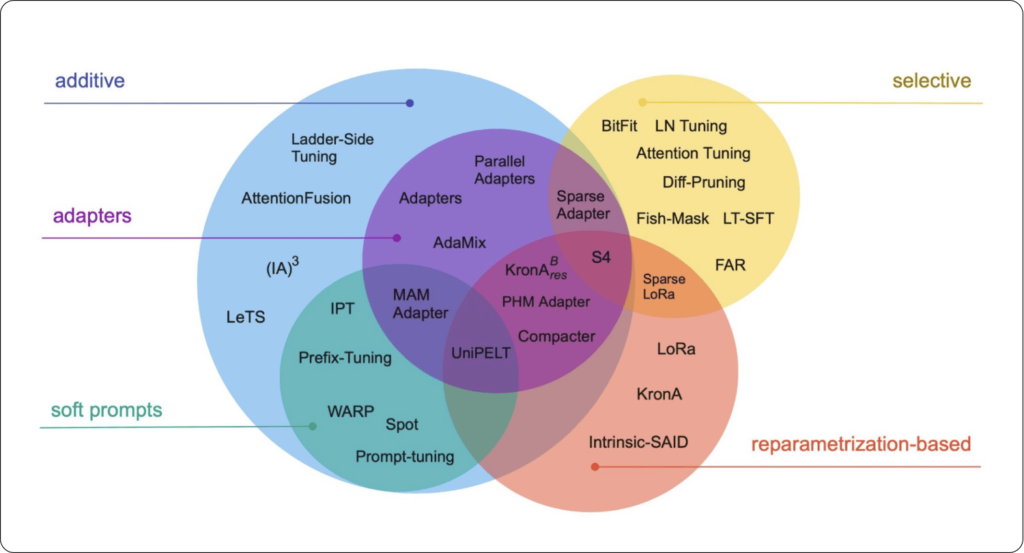

A promising bridge between cost-efficiency and performance using less data and computing power to achieve comparable performance. The model can adapt to new information by fine-tuning select parameters without giving up the vast knowledge acquired during its initial training. PEFT covers three methods: selective tuning, additive tuning, and reparameterization. Starting with selective methods, you select a few layers or parameters that are critical to your use cases. These are the only things that are changed during tuning–the rest of the model remains constant, allowing for significant time and computing power savings. Next comes additive tuning, where you add a few new layers or parameters closer to the first input layer. Although this step will slightly increase the inference time, the time you save on training should be more than enough to compensate for the increased inference time. The model is then ready for reparameterization tuning, in which you downsize the model by either performing low-rank approximation or lowering the precision of the parameters. Although both of these methods involve a downgrade in accuracy, the model is smaller and much faster. No doubt, these tradeoffs need to be weighed and considered for each specific case. In essence, by changing 1% to 2% of the parameters, you can get satisfactory performance while putting much less effort into tuning.

Sadly, there’s no rule of thumb that can apply to all business use cases. It’s hard to compare methods when there isn’t a single metric or value that can be measured.

LLMs in production – what to monitor?

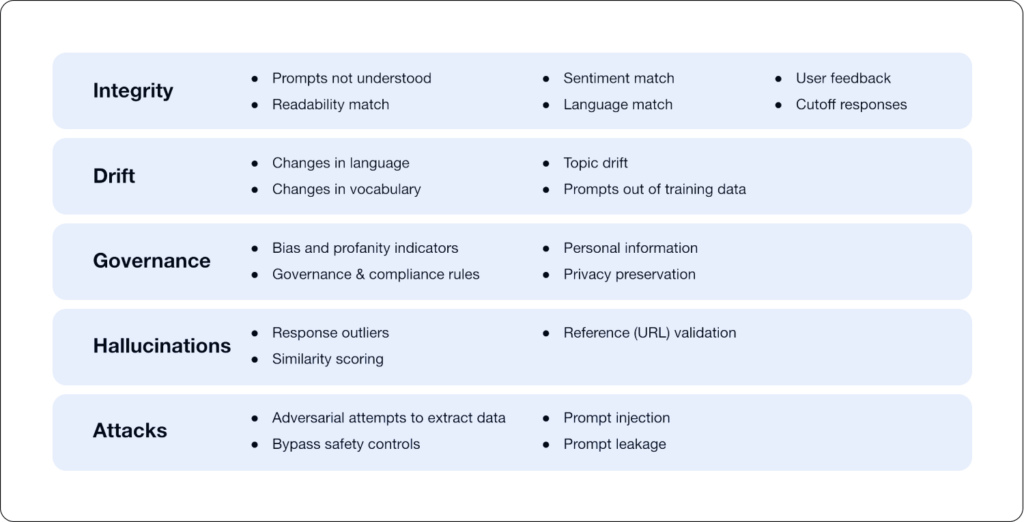

LLM technology isn’t a “set it and forget it” affair. Maintaining an LLM requires regular updates and refinements, especially as the application’s nature or the data it interacts with evolves. Monitoring LLMs post-production is paramount.

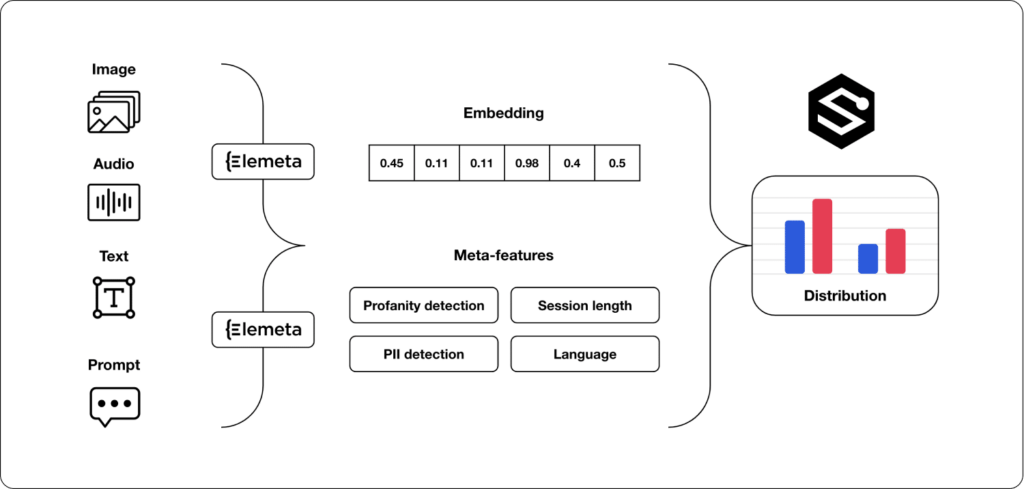

As generative models like LLMs take center stage, their behavior becomes even more critical. Superwise knows how to monitor any kind of structural use case using its best-of-breed monitoring and anomaly detection capabilities. With tools like Elemeta, tracking these models becomes more streamlined. Elemeta can be applied on top of any type of unstructured data, whether it’s image, data, audio, text, or comms. Using Elemeta helps you identify features that can be monitored and measured.

But that’s just the tip of the iceberg. With LLMs, we’re not only looking at data integrity and drifts but more intricate challenges like model hallucinations. And as the industry gears up for more advancements, topics like prompt injections and fine-tuning are sure to stir up some engaging debates.

Looking ahead

When bringing LLMs to life in a production environment, many things need to be in position. Making sure that happens requires sophisticated capabilities, including a best-of-breed vector database, the ability to do fine-tuning, some kind of orchestrator, and a way to create the complex tasks involved. Many moving pieces need to be applied– much like when we started monitoring ML models and got into MLOps a few years ago. Stay tuned for more insights in our upcoming webinars. We’re going to be talking about general deployment strategies, architectures for deploying LLMs, and more ways to tune LLMs so they are relevant for your specific business cases.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo here, and our team will show what Superwise can do for your ML and business.