In this series’s previous posts, we discussed drift extensively, covering data drift, concept drift, as well as the various drift metrics themselves. As we explained, drift can happen for many reasons, whether technical issues in the pipeline, external factors, or even internal business changes. Now that we’re well versed in the differences between drift types, why they happen, and how to identify them, we need to understand how to troubleshoot model drift before it impacts our business’s bottom line.

More posts in this series:

- Everything you need to know about drift in machine learning

- Data drift detection basics

- Concept drift detection basics

- A hands-on introduction to drift metrics

- Common drift metrics

- Troubleshooting model drift

- Model-based techniques for drift monitoring

Methods for troubleshooting model drift

Many businesses consider retraining the “go-to solution” when it comes to drift (Spoiler, retraining is not always the answer). But, the fact that there are many causes of drift and that it occurs in many different forms implies that there is no one right way to resolve drift. To convince you of this, we’ll review the possible actions you can take when you detect drift and discuss the decision-making process to determine which resolution path is preferred based on the drift context.

Retraining

Retraining is the most obvious option, but it can also present a significant challenge. The general idea behind periodic retraining is that fresh is best, and rerunning the same training flow as was initially implemented only with fresh data will naturally correct the drift. In theory, this sounds like it makes sense and should be straightforward, but in reality, retraining is doubly complex both from an automation and data relevance point of view.

The process of retraining and deploying a new model is highly sensitive and can be time and effort-intensive if not automated. However, retraining automations can leverage anything from simple algorithm refits with a fresh dataset to automated preprocessing steps alongside feature selection, data normalization, and so forth. On the deployment side, the process could be automated to replace the active model version pickle and even conduct an automated canary deployment or A/B configuration. These automations require some effort, but applying these steps manually tends to introduce issues resulting from human error. Even something as simple as copying the wrong pickle and using it as the new model version can introduce major problems. Automation expertise aside, another key challenge of retraining to solve a drift issue is data relevancy; Do we even have the relevant data needed to retrain? In many use cases, such as LTV prediction, the actual label is only collected after days, weeks, or even months. Even if we discover drift, collecting enough examples of this new reality might take time. To read more on how to build a successful and automated retraining strategy, check out these blogs on data-driven retraining with production insights and a framework for a successful continuous training strategy.

Fix the source

Let’s take, for example, a use case where insurance companies use ML to detect fraudulent claims. In our digital claim submission form, a bug was introduced during the last website update. Whenever new claims are submitted and processed, only the region is passed to the database instead of the entire incident location, as reported by the customer in the submission form. Our classifier model uses exact location, and it’s an important attribute to classify the claim. As it’s missing, this increases the chance of a claim being classified as fraud. In this case, the root cause for the drift in the location field is due to a bug, which eventually caused an input drift that also affected the model’s decisions. The right way to solve this would be to fix the bug in the submission form, of course, not to retrain.

Always examine the drift; it should give you a better understanding of why you are experiencing a different set of values from the expected ones. Assuming that the cause for change is unwanted, you will need to fix the source. This kind of fix can be done in the source system when a bug needs to be fixed (like in the example above). Or, it might be something you can fix in the model preprocessing steps if the issue is due to an unexpected change in the schema. For example, this could happen if some component in the source systems changes the scale measure of distance from inches to centimeters. In these cases, you don’t usually need to touch the model. It’s just a matter of fixing the data that is input to the model.

Do nothing

There are cases that, in the time it will take you to resolve the issue, the issue will have disappeared on its own; for example, holidays or special events such as Chinese Singles’ Day. In these seasonal cases, we can expect considerable changes in customer behavior, and if we don’t account for them in advance, they’re likely to set off drift alerts. These types of drift will blow over in a few hours or a day after its discovery. While the drift will “correct” itself, the decision to do something or nothing is business driven. Is even one hour of sub-optimal performance too costly or risky? If that’s the case, perhaps you should have a model specifically for this period. Or run a safety net in advance?

Split the model

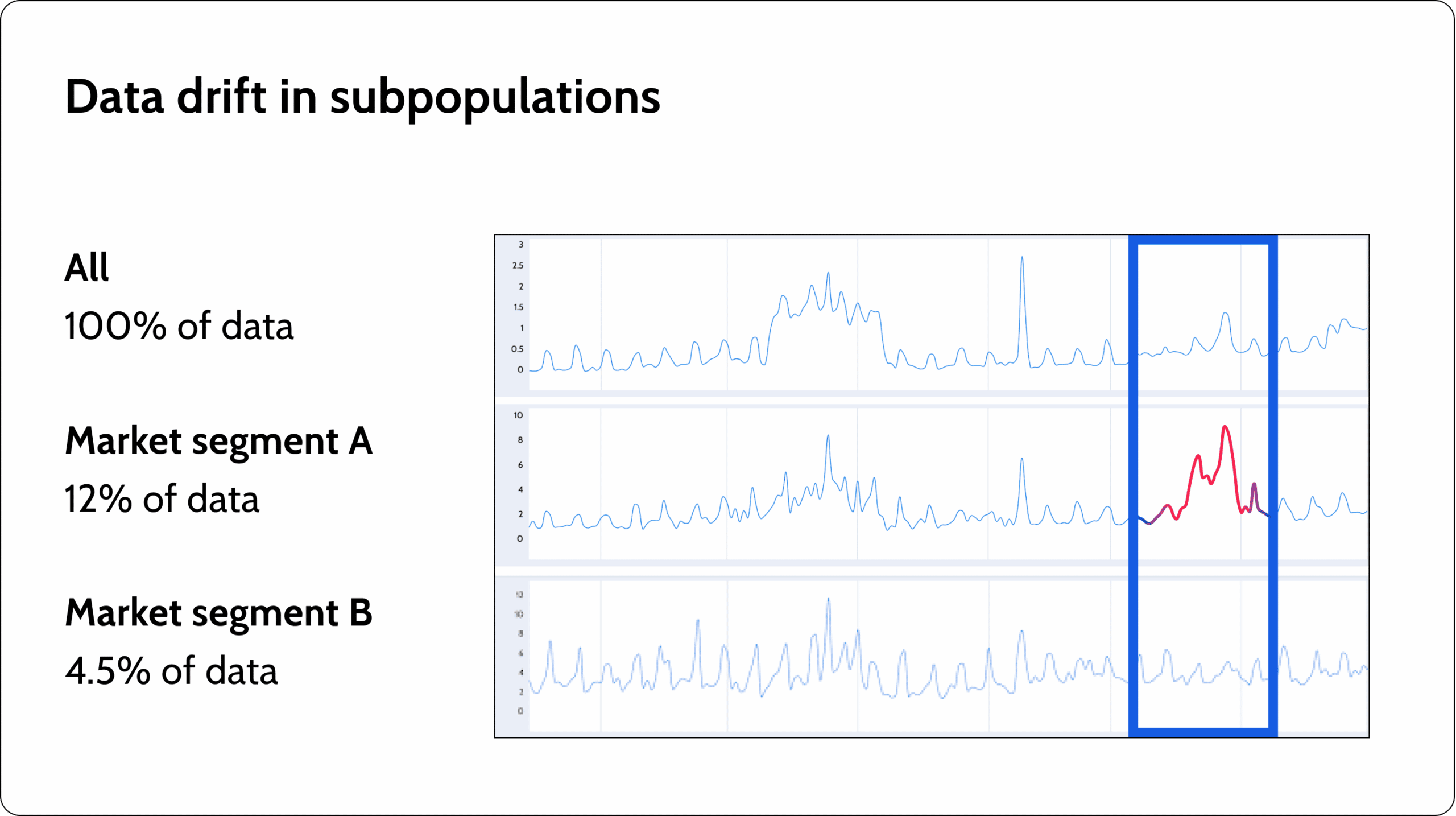

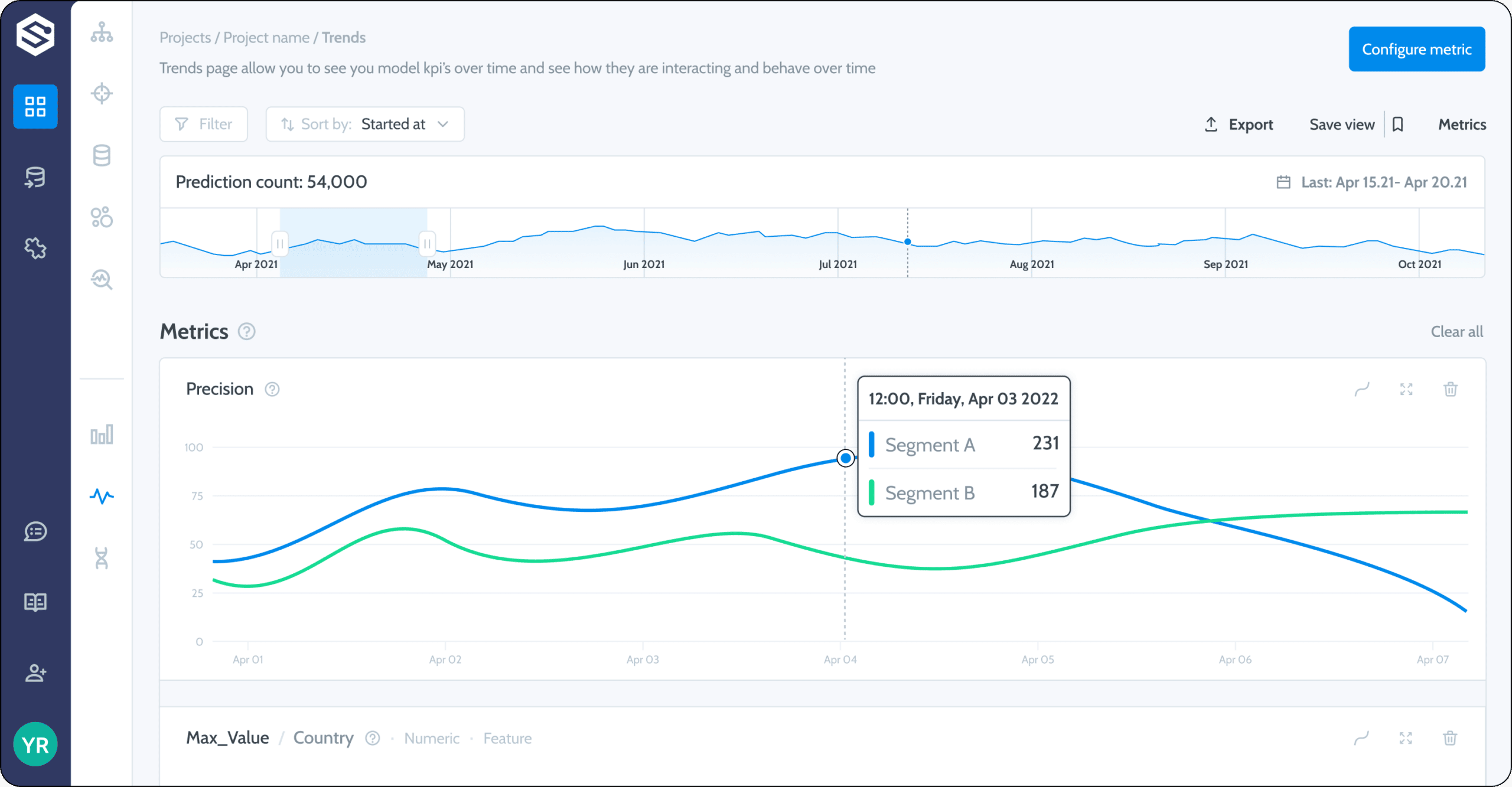

If the drift is unique only to a specific subpopulation, you may want to use a dedicated model for the drifted segment. By identifying the segments where the model performs suboptimally, you can better understand what caused the drift and where exactly it happened. The knowledge that the drift we are experiencing affects only a specific subpopulation may lead us to split our model into sub-models, each dedicated to a specific segment of our data. There is always the tradeoff between having a more generalized model on the broader data vs. building many small “local” models. However, this tradeoff should be considered in some instances of major drift.

Recalibrate the model

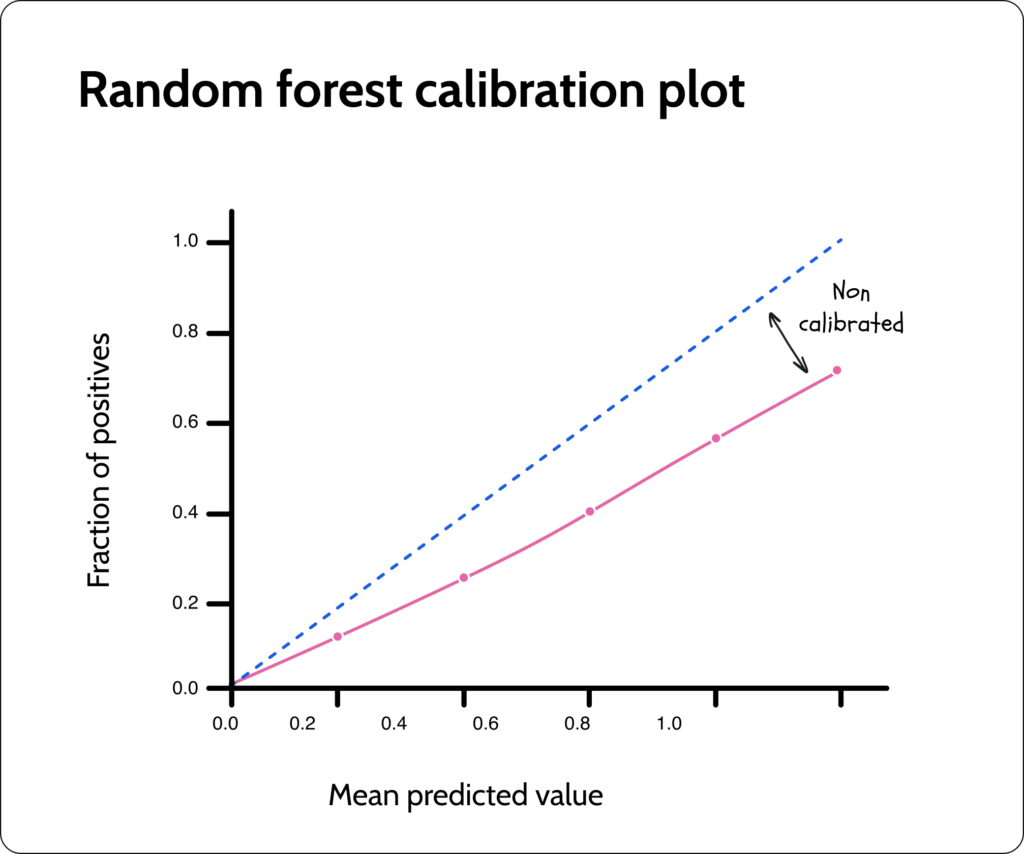

Recalibration is a simple yet powerful technique that can be used in classification or regression models. Let’s say we have a regression task for our customers’ expected lifetime value (LTV). Due to a very successful campaign, the company has tapped into a new, higher-income population – with a higher expected LTV. The fact that the a priori LTV of our new incoming population is significantly different from the LTVs that the model trained on could easily lead to an overall under-assessment of the real expected customer LTV. While the model still detects the correct pattern of what should be considered a high-value customer, it’s not accurately predicting the LTV scale. A scale calibration method could be used as a post-processing step on the model prediction itself. An excellent way to detect if calibration is indeed needed is to use a calibration chart. The chart below shows that the evaluated model continuously underestimates the chance of a class being included in the range of probabilities. This is also a simple case where a linear calibration (adding 20% to each prediction) will calibrate the model.

Safety net

ML usually replaces some existing suboptimally automated or semi-automated processes. These ‘old-school’ processes are typically simple rule-based engines or human-in-the-loop review and escalation processes. In either case, obviously, if we’ve moved to ML, we believe that our models will produce significantly optimal results. That said, under specific circumstances, especially when an issue is detected, it may be a good idea to take a step back and leverage the previous process until the model until relevant data pipeline is fixed. If you’re monitoring is alerting on missing values on all of your top 5 features, pause the model and go to a safety net ASAP. People may be slower and more prone to error, and rule-based engines may make superficial decisions, but in all likelihood, both will perform better than a model missing its top 5 features.

Using monitoring to troubleshoot model drift

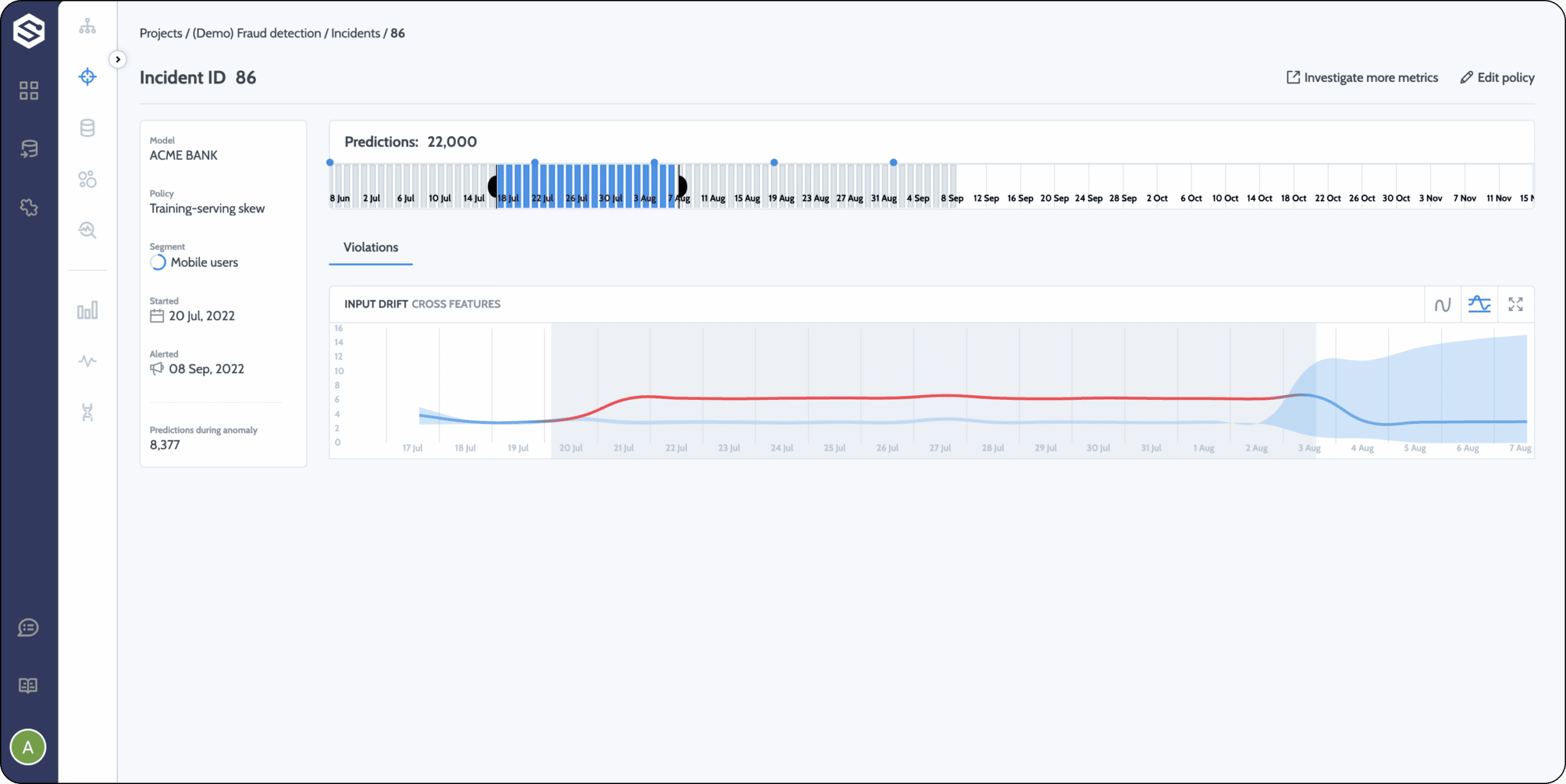

Step 1: Detect the drift

Detect when it starts and try to understand what kind of drift you are experiencing.

Step 2: Determine the impact of the drift

Is it an isolated incident, or does it happen only within a specific sub-population? Is it a macro event? And how badly is it impacting performance?

Step 3: Understand why model drift happened

Use your domain knowledge to get a clear understanding of where the drift originated—whether it’s an external change (something changed in the ecosystem), a desired internal change (the company is targeting a new type of customer), or an undesirable internal change (bug or unwanted schema change). Look carefully at when it started and what data elements have been affected. Usually, when a data issue occurs, it will be reflected in more than one feature. The basic idea is to detect and deeply understand the root cause.

Step 4: Decide what’s next

What comes next depends on the tradeoff between the impact of the drift, the potential effort required to correct it, and the business impact if nothing is fixed. As you can see from the different options discussed, each one involves a different level of effort when it comes to execution. If it’s not that important, it doesn’t pay to invest much effort into figuring out what caused the drift. On the other hand, if it’s influencing business performance and could harm the company, it’s worth doing whatever it takes to fix the problem.

Summary

Although not all data drift will necessarily impact the performance of your business process, it’s usually a sign that something between the model and the data isn’t optimized for your real-world environment. Having the right tools and platforms to measure and monitor for drift is fundamental to machine learning operations. Your ML observability platform should give you the ability to easily choose what you want to monitor, help you measure your distributions, point out anomalies or trends that are coming up, and understand what factors are causing them.

Want to monitor drift?

Head over to the Superwise platform and get started with drift monitoring for free with our community edition (3 free models!).

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.