In the rapidly evolving landscape of Large Language Models (LLMs), size and deployment have emerged as pivotal factors for practitioners and businesses. Is bigger necessarily better? How can we balance the computational horsepower and advanced capabilities required by the larger models against the resource efficiency and nimbleness of smaller ones?

In this blog post, we’ll dive into the practical details and key factors guiding these important decisions and provide practical, hands-on guidance focusing on LLM size vs performance, scalability, and cost-effectiveness in real-world applications of LLMs. Whether you’re building enterprise solutions or innovative startups, understanding these trade-offs is key to leveraging the full potential of LLMs.

What is LLM size, and why is it important?

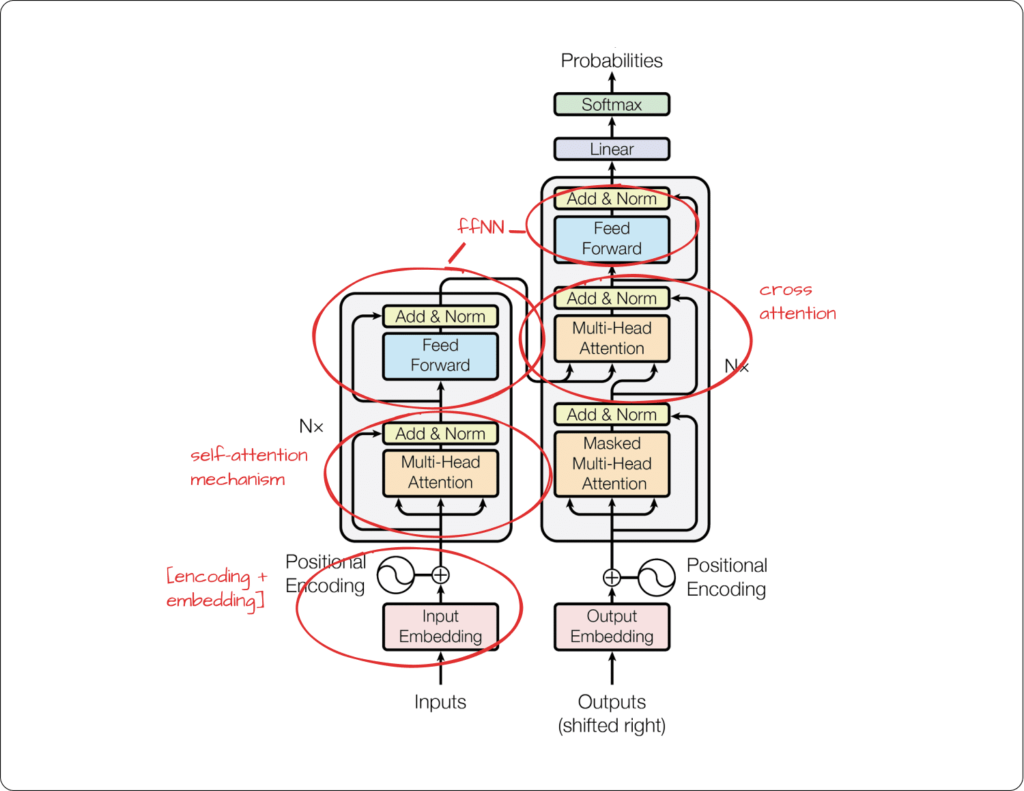

Let’s take a step back and revisit what LLMs are. Coming in many flavors, LLMs derive from transformer models like the one proposed by Vaswani et al. on “Attention is all you need.”

Adapted from Vaswani et al. on “Attention is all you need.

Adapted from Vaswani et al. on “Attention is all you need.Everything in a neural network, such as the transformers, are tensors (multi-dimensional matrices) where every element will be counted as a parameter. Typical transformers architectures like the one depicted in the figure above include the weights and biases learned in the multi-head self-attention mechanisms, position-wise feed-forward networks, and layer normalization components. Thus, the parameters are the numbers (floats or ints) the model adjusts during training to fit the data.

Note that the memory size occupied by the model is not directly related to the number of parameters when techniques such as quantization are applied to the tensors learned. This technique “converts” the tensors typically composed of floats of 16-bit precision to integers of 8 or 4 bits. So, we may have a 70B parameter model smaller in memory than a 50B parameter model due to this quantization process. Further down this blog post, we’ll dive into the details and implications of quantization and other model size reduction techniques.

Moreover, the overall size of a Large Language Model (LLM), or transformer model, is determined by various factors:

- Parameters count and layer architecture: The architecture of transformer models includes a sequence of identical layers, each adding to the model’s overall depth. Every layer contains its unique set of parameters, making the depth (number of layers) a crucial factor in determining the model’s size.

- Embedding dimensions: In transformer models, the input and output embeddings play a vital role. These embeddings transform tokens into fixed-sized vectors, known as the embedding dimensions. The dimensions of these vectors are directly proportional to the total parameter count, thereby influencing the model’s size.

Research in this area is ever ongoing, and many different mechanisms have been introduced and redesigned to optimize the model abstractions of the inputs passed. Ultimately, neural networks like transformers will always work by multiplying multiple layers of matrices. A clear understanding of its function will allow us to predict the implications of LLM sizes. The most important aspects of LLM sizes can be split into two main parts: model quality and technical challenges:

Model quality

- Learning capability and complexity handling: Larger models can encapsulate and learn more complex patterns in data due to their expansive parameter space. This is particularly pertinent in tasks involving intricate language structures, multi-modality (CLIP or GPT4), or subtle context dependencies such as long conversations.

- Generalization and performance: A larger parameter space allows for better generalization capabilities. This means that the model can perform well on a broader range of tasks and datasets, often achieving state-of-the-art results in various NLP benchmarks simultaneously. For example, the typical scorecard of a new LLM involves testing sets of many different tasks, such as translation, question answering, summarization, sentiment analysis, etc. Larger models tend to excel in these tasks, demonstrating their ability to understand and process diverse types of information more effectively. When it comes to the ability of LLMs to generalize and handle a wide range of tasks, size does play a significant role. With their vast parameters and deeper learning capabilities, larger models are better at generalizing across diverse datasets and scenarios. This enhanced generalization means they can adapt to more varied inputs and produce more nuanced outputs. For applications where versatility and broad understanding are essential, investing in larger models can be a wise decision, offering a level of adaptability that smaller models cannot match.

- Risk of overfitting: The classical bias-variance trade-off applies here as well. With an extensive number of parameters, large models might overfit to the training data, especially if the data lacks diversity or if it is not big enough. This requires regularization techniques and careful training data selection even though the number of “samples” used for training these models are bigger than ever.

Technical challenges

- Computational and memory requirements: Size directly correlates with the computational burden. Larger models need more memory for storing parameters and intermediate computations, especially during training/fine-tuning, where backpropagation requires gradient storage. This translates to higher GPU/TPU requirements as well as VRAM.

- Training and inference latency: Larger models generally require more time for both training and inference. This can be a limiting factor for applications needing real-time or near-real-time responses. Inference, although more straightforward and less “expensive” than training, requires complex solutions for solving latency issues at scale. In fact, AWS and NVIDIA estimate that more than 80% of total cloud costs are due to inference tasks and not training.

- Environmental and economic costs: Tied to the previous two points, the training and deployment of large models entail significant energy consumption, leading to higher environmental and economic costs. The carbon footprint and operational costs become crucial factors in model selection and deployment and an issue to consider in the current climate crisis.

In essence, the size of an LLM is a critical technical aspect that influences its learning abilities, computational demands, and overall effectiveness in real-world applications. Balancing these factors is vital for the optimal utilization of these models in any application domain.

The ChatGPT ”addiction” and the one-size-fits-all era

The widespread adoption and acclaim of ChatGPT, particularly since the advent of GPT-3.5, have led many to believe that a one-size-fits-all model approach is the way to develop LLM-based applications. However, this perspective is often suboptimal in terms of resource management. Each application has unique needs and constraints, and what may work for one may not be suitable for another. Relying solely on a single, large-scale model like GPT4 can lead to inefficiencies and unnecessary resource expenditure in scenarios where a smaller, more specialized model would suffice. This applies to the costs of using LLMs, but it is particularly relevant if your application is using an open-source model deployed in-house. It’s important for developers and businesses to critically evaluate their specific needs and choose a model size and approach that aligns with their operational goals and resource capabilities.

In this landscape of LLM applications, it’s a common misconception that bigger always means better. However, this isn’t always true, especially when the tasks are relatively simple. Oversized models can lead to unnecessary computational overhead and increased operational costs without a proportional benefit in performance. Smaller, more specialized models often provide a more efficient and equally effective solution for straightforward tasks. It’s crucial that we practitioners assess the complexity of the task before choosing the model size, ensuring a balance between capability and efficiency.

For example, when building a RAG (Retrieval Augmented Generation) solution, OpenAI’s off-the-shelf embeddings model may have overhead if your application runs on a particular and specialized domain. If that’s the case, a version of miniLLM could probably suffice, reducing model and infrastructure costs for storing the embeddings.

Another example is the recent Microsoft’s Phi-2 LLM. This model emerges as a notable model that challenges conventional scaling laws by leveraging strategic training choices and prioritizing high-quality, “textbook-style” data. This approach enables Phi-2, with its 2.7 billion parameters, to surpass the performance of significantly larger models in various benchmarks, highlighting its efficiency in common sense reasoning, language understanding, and other areas. Despite its achievements, the model faces limitations due to its focused training dataset, which may impact its adaptability in diverse real-world scenarios. Moreover, the challenges in evaluating such models accurately, considering potential overlaps between training data and public benchmarks, add complexity when assessing its true effectiveness.

Model selection best practices

While OpenAI’s GPT4 model stands as the leading and the most popular and performant model in the realm of LLMs, an also notable trend in the deployment of LLM applications is the lack of rigorous data science methodologies in selecting the best suitable models for specific tasks. More often than not, there isn’t a comprehensive “test set” in place to validate the relevant metrics for choosing the best model with high confidence. However, constructing such test sets, though crucial, can be time-consuming and financially demanding, especially when it involves Human Intelligence Tasks (HITs). As an alternative, some opt to use top-performing models like GPT4 for validating the results of smaller models like Llama70B, but this approach can introduce model bias and should be considered with caution, especially when performing model distillation.

In the context of task complexity and model selection, it’s essential to understand how to effectively gauge the complexity of various tasks and align them with models of the correct scale. This includes exploring situations where smaller, specialized models could surpass or be more efficient than their larger counterparts. The focus should also be on developing methods to measure and compare the performance of models of differing scales accurately, such as LLMStudio.

Moreover, a comprehensive cost-benefit analysis of large models is imperative. This involves evaluating the balance between the computational expenses of large models and the benefits they offer in terms of performance. Considering the broader implications, including infrastructure requirements, ongoing maintenance, and energy usage, is crucial. Understanding the correlation between the scalability of applications and the size of employed models is also vital, with a specific emphasis on environmental impact, notably energy consumption and carbon footprint, associated with deploying large-scale LLMs.

Practical considerations for deploying multiple models

The strategy of employing multiple models in a solution can be significantly enhanced by investing in techniques like quantization and fine-tuning. Quantization, the process of reducing the precision of the model’s parameters, can dramatically decrease the model’s size and increase inference speed without a substantial loss in accuracy. Coupled with fine-tuning, which adapts a model to specific tasks or datasets, this approach allows for a versatile and resource-efficient deployment. Utilizing a multi-model solution with these techniques enables a tailored response to varying requirements, effectively balancing performance and resource usage. However, there is an increase in maintenance, prompt engineering, and software development effort to allow all parts to work together seamlessly.

Addressing high computational requirements: Running advanced NLP models on minimal hardware is a significant challenge, as evidenced by even large-scale organizations like OpenAI encountering latency issues during high-traffic periods. This complexity partly stems from the immense storage requirements. For example, you will need a disk of 2TB to store a trillion parameters at 16 bits each.

Reducing model size with quantization: Quantization involves converting a neural network’s high-precision weights (typically 32-bit floating points) into lower-precision representations (such as 16-bit or 8-bit). This process helps speed up inference and reduce memory usage without significantly compromising the model’s performance, as seen on the HuggingFace leaderboard. This method can be performed statically (before model deployment) or dynamically (during runtime), and it often involves techniques to minimize the loss of information. This is crucial in maintaining the model’s ability to understand and generate human-like text despite the reduced precision. The success of quantization in LLMs demonstrates a balance between efficiency and performance, making advanced AI models more accessible and practical for a wide range of applications. However, we need a good test set to guarantee that a “quantized” is good enough for our application.

Strategies for efficient inference: The task of input processing in models can lead to substantial memory consumption. To mitigate this, implementing batch prediction and dynamic batching is essential. Utilizing web frameworks such as FastAPI or Flask to create online prediction endpoints introduces challenges due to their reliance on asynchronous programming or multiprocessing. These can conflict with machine learning models that block the event queue or are too large for multiple instances. Dynamic batching offers a solution by enabling the processing of inputs from various requests simultaneously, utilizing a single model instance. This approach efficiently leverages the web framework’s capabilities while maximizing hardware utilization.

Distributed inference for enhanced performance: The complex nature of LLMs, highlighted by the exponential increase in memory requirements due to the attention mechanism and the layering of models, necessitates advanced solutions. Distributed Inference, where the LLM is distributed across multiple machines, each handling a portion of the input, is a practical approach. Model Parallelism (MP) involves distributing the computation and parameters of each layer across several devices, requiring significant memory for processing multiple samples and a GPU with sufficient throughput to handle large batch sizes without computational bottlenecks.

Effectively optimizing the infrastructure for LLM models requires a strategic approach that balances computational demands with advanced techniques like dynamic batching and distributed inference, ensuring both efficiency and scalability in processing.

Conclusion

In conclusion, building LLM applications requires a deep understanding of model size and its impact on performance, efficiency, and practicality. While the quality of large models like GPT-4 is evident in their generalization power, it’s crucial to consider the associated challenges of finding a single self-deployed model that can be equivalent in quality. When hosting a single LLM, one needs to account for the computational and memory requirements, inference latency, and environmental and economic costs. The trend towards a one-size-fits-all approach, as seen with the popularity of models like ChatGPT, doesn’t always align with individual applications’ specific needs and constraints. Smaller, specialized models can often provide a more balanced solution, particularly for tasks of limited complexity.

Key practices for effective LLM deployment include rigorous task complexity assessment, precise model scaling, and cost-benefit analysis. Techniques like quantization and fine-tuning are essential for optimizing models for specific use cases, ensuring performance-resource balance. Infrastructure-wise, adopting distributed inference and dynamic batching is critical for managing high computational loads efficiently.

Ultimately, LLM selection should align with a blend of good data science practices, technical feasibility, economic viability, and environmental sustainability. Staying agile and informed in this rapidly evolving field is crucial for maximizing LLM potential across various applications.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo here, and our team will show what Superwise can do for your ML and business.