Model-based techniques for drift monitoring offer significant advantages over statistical-based techniques in scenarios where the data distribution is complex, non-stationary, or high-dimensional. These techniques excel in capturing complex relationships, handling non-linear data, and adapting to dynamic environments by leveraging machine learning models themselves to measure changes in data distribution and detect drift.

In this blog post, we’ll look in-depth into the different model-based techniques for drift monitoring, their pros and cons, and considerations for when and how to use them.

More posts in this series:

- Everything you need to know about drift in machine learning

- Data drift detection basics

- Concept drift detection basics

- A hands-on introduction to drift metrics

- Common drift metrics

- Troubleshooting model drift

- Model-based techniques for drift monitoring

Techniques for measuring drift

There are many techniques to measure drift in machine learning, but roughly speaking, these techniques can be divided into statistical and model-based techniques. Both of these aim to assess changes in the underlying data distribution and detect when the performance of a trained model deteriorates over time.

Statistical-based techniques

Statistical techniques for measuring drift rely on analyzing the statistical properties of the data. They typically involve computing summary statistics such as mean, variance, or higher-order moments and comparing them across different time periods or datasets. There are many positive points credited to statistical-based techniques, namely:

- They are grounded in statistical knowledge and subject to hypothesis acceptance tests, and are quantifiable in terms of certainty/uncertainty. For example, how certain are we that two distributions are identical or not.

- Because we have statistical knowledge of the process and how it should distribute, we can tailor our hypothesis acceptance tests to receive more exact insights.

- Statistical-based techniques can work with small amounts of data as they will take into account the sample size when defining the statistical significance.

On the flip side, there are a few downsides to statistical methods:

- Most statistical tests assume something regarding the distributions they test, and in the real world, these distributions are rarely a known quantity but something that practitioners are hypothesizing and assuming.

- Statistical significance becomes irrelevant when dealing with very large datasets.

- Most statistical-based techniques are univariate, not multivariate.

Model-based techniques

Model-based techniques focus on analyzing the performance of the ML model itself. These approaches involve monitoring the model’s predictions on new data and comparing them to the expected outcomes.

Pros of model-based techniques

- Makes no assumptions about the type and structure of the underlying input data that it models.

- Can easily handle very large datasets where noise or deviation would appear statistically significant to statistical-based drift techniques.

- Contextual generalization of drift by identifying specific areas where the model’s performance has degraded. This granular analysis helps pinpoint the root causes of drift, for example, a drift in a specific sub-population over the weekend.

- Inherently multivariate, considering multiple features or dimensions simultaneously.

Cons of model-based techniques

- Struggle to generalize in scenarios with limited observations.

- Their ability to generalize is measured in terms of model performance metrics but not anchored by confidence intervals or degree of uncertainty in regard to their stability.

- Do not take into account the scale of observations as part of their results.

- Model-based techniques can be challenging to understand, and while they may be used to detect drift, it may not always be possible to interpret the reasons behind the detected drift.

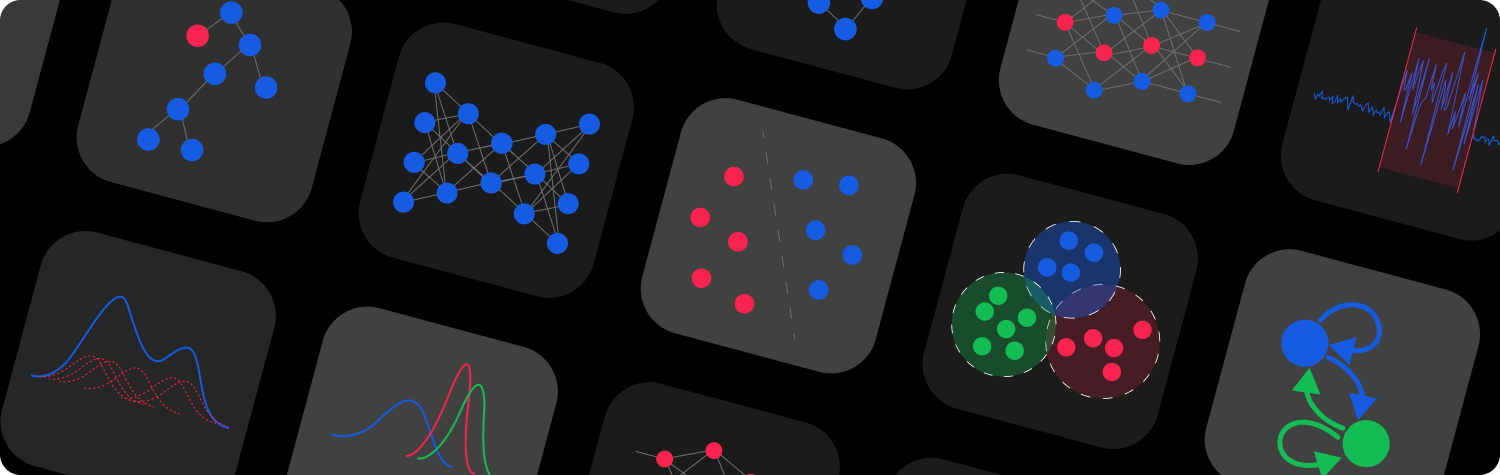

Model-based techniques

Discriminative classifier (Domain classification)

Discriminate classifiers can identify and distinguish between the sources of data involved in the ML training process. All training data will be labeled “training,” and production data will be labeled “inference.” Drift can be measured with a discriminative classifier by monitoring changes in its performance metrics over time.

Auto-encoders (Embedding space)

Autoencoders are often used for dimensionality reduction, denoising, or anomaly detection. The encoder learns to extract meaningful features from the input data, and the decoder reconstructs the input data from the encoded representation. In the context of drift, the assumption is that the better the model learns the underlining real distribution, the loss resulting from passing a new observation through the encoder-decoder will be smaller. If the underlining distribution is shifting, then the loss will begin to increase.

To calculate loss, we’ll use the reference distribution to train the autoencoder and measure the loss. When applied to a new distribution for testing, significant deviations indicate a potential drift or change in the data distribution. The higher the deviation, the more likely drift has occurred.

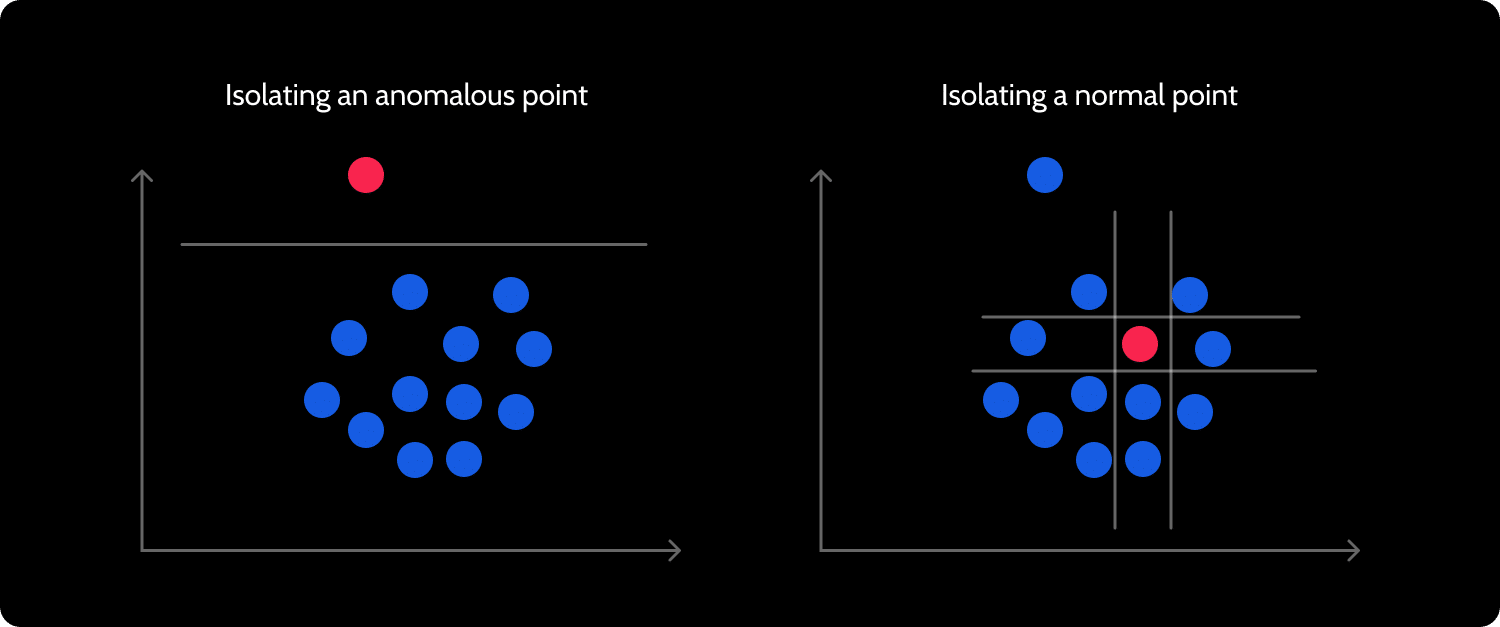

Isolation forest (Outlier detection)

Use an isolation forest algorithm to identify the normal patterns and structure of the data and detect outliers. The model constructs an ensemble of isolation trees, which recursively partition the data by randomly selecting features and splitting values until isolated instances (outliers) are separated faster than regular instances. The model assigns an anomaly score to each data point, indicating the degree of abnormality or outlier status, with the underlining assumption that the more “normal” a point is, the more random splits will be needed until it can be isolated. Increases in the percentage of outliers indicate a deviation from the expected normal behavior, which could indicate drift. If we pass each new instance via the isolation forest and give each data point an anomaly score based on it – the more anomalies we see in a sequence could be a good indication of drift.

Clustering

Cluster-based techniques involve clustering the data with a clustering algorithm to partition the data into distinct clusters based on their similarities, where each cluster represents a group of data points that share common characteristics. This produces archetypes that represent the different common data points (centers in the case of k-means) and also produces the frequency of each cluster (meaning the percentage of points falling into each cluster). For drift calculation, we can train clusters based on the reference distribution, analyze the frequency of each cluster in that reference distribution, and then apply the clusters to our newly tested distribution. Then we will get the frequency of each cluster in the newly tested distribution. If the frequency looks very different, we probably are experiencing a drift.

Considerations when choosing model-based techniques for drift monitoring

Practitioners should consider the following questions when deciding between model-based techniques and statistical-based techniques for drift detection.

Do we require interpretability and explainability?

There are two sides to “requiring” interpretability and explainability. Your use case and a requirement on the part of your stakeholders. If your use case requires explainability, let’s say for regulatory reasons, or if your stakeholders will not trust a solution where they cannot understand the reasoning behind predictions, then statistical-based techniques may be a better choice. It should be noted that there are explainable model-based techniques as well, such as building a decision tree as a classifier, but the available, explainable options in this space are limited.

Is domain-specific knowledge important?

In industrial manufacturing, domain-specific knowledge about the underlying processes can be leveraged in model-based techniques. For instance, in monitoring equipment performance, a model-based technique like a physics-based simulation model can be utilized to capture the complex interactions and physical relationships within the system.

Are multivariate relationships important?

In image analysis for autonomous vehicles, model-based techniques like deep CNNs are effective at capturing multivariate relationships among pixels. This enables accurate object detection, localization, and tracking. Statistical-based techniques that consider univariate features may struggle to capture the complexity of the visual data.

Choosing the right drift technique for your use-case

At the end of the day, model-based techniques for drift detection are just a different way to go about measuring drift than statistical-based drift measures. Before you dive into functions, you should understand what type/s of drift you want to measure and what comparable datasets and/or timeframes should be used. Only after considering these questions and how they could potentially materialize in your domain and specific use cases should you begin to evaluate which techniques and measures you should use to quantify the distance.

There is no absolute truth here as to which technique or measure will best encapsulate a domain or use case. You may find that one works better than the others for you, or you may decide to use a set of measures for different types of drift evaluation. Regardless, it should be a question is which is “good enough” from a business perspective to avoid risk, not which measure is as perfect as mathematically possible. Just like with your actual model, if you can achieve similar results with simpler models, then use the simpler models, or as in this case, drift monitoring techniques.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo here, and our team will show what Superwise can do for your ML and business.