I want to monitor output drift for prediction in all LTV models across all segments

Catch changes in your model’s predictions before they disrupt outcomes. Pinpoint where real-world data diverges from expectations to act swiftly and maintain control.

I want to monitor distribution shift for prediction probability in risk model across all segments & entire set

Detect performance dips—sudden or gradual—before they impact your business. Measure what matters with tools tailored to your goals.

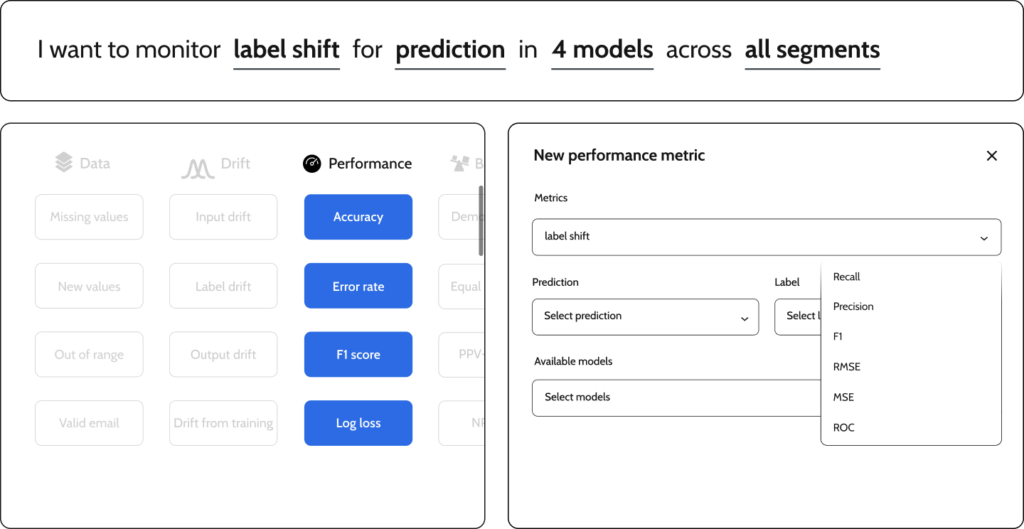

I want to monitor label shift for prediction in all CRO models across all segments

Detect label changes in your CRO models across all segments to ensure accuracy. Pinpoint shifts in real time and adjust to keep your AI grounded.

I want to monitor label proportion for prediction in 1 model across all segments

Prevent skewed labels from masking poor performance. Monitor distributions to catch imbalances and keep your models accurate.

No credit card required.

Easily get started with a free

community edition account.

!pip install superwise

import superwise as sw

project = sw.project("Fraud detection")

model = sw.model(project,"Customer a")

policy = sw.policy(model,drift_template)

Entire population drift – high probability of concept drift. Open incident investigation →

Segment “tablet shoppers” drifting. Split model and retrain.

Powered by SUPERWISE® | All Rights Reserved | 2025

SUPERWISE®, Predictive Transformation®, Talk to Your Data® are registered trademarks of Deep Insight Solutions, DBA SUPERWISE®. All other trademarks, logos, and service marks displayed on this website are the property of their respective owners. The use of any trademark without the express written consent of the owner is strictly prohibited.