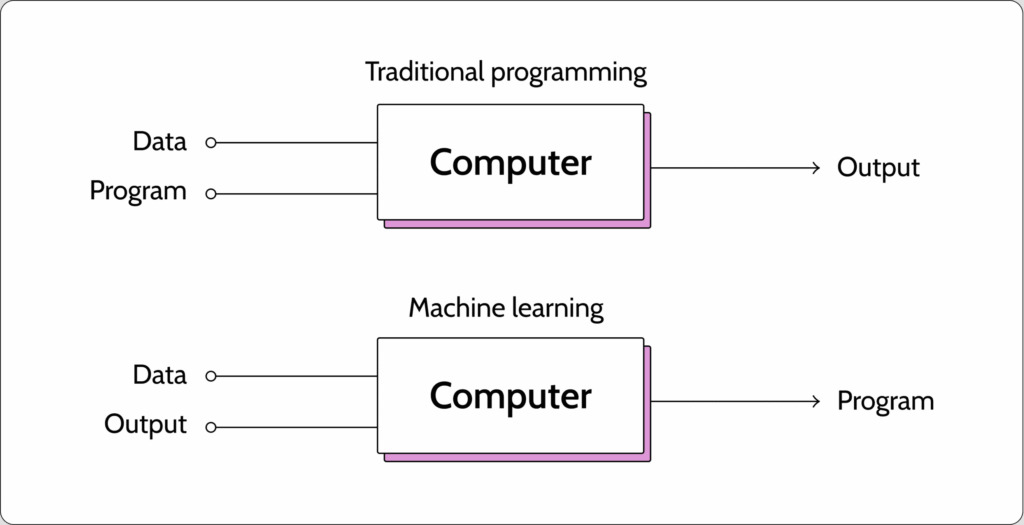

Model observability and monitoring are a critical part of any software stack for similar reasons and are critical components of any MLOps implementation. The big question is: How can a team of engineers determine the best tools and practices to effectively monitor dozens or even hundreds of different microservices and infrastructure components? Although any machine learning model is essentially a software component and, as such, requires traditional observability metrics and monitoring, models are fundamentally different from pure software. ML models embody a new type of coding that learns from data, where the code or logic is actually being inferred automatically from the data on which it runs. This basic but fundamental difference is what makes model observability in machine learning very different from traditional software observability.

This post covers some of the key differences between model observability and software observability and their implications.

White box vs. black box

When it comes to conventional software development, there is a clear, well-defined logic that should be applied for any new instance, transaction, or event. This makes it relatively easy to express in advance a set of input examples with their expected output—even before the system is implemented. We can create test oracles or programs that verify the results output by the software and make sure they match the expected values. ML models are harder to test because they output a prediction as opposed to a specific value that can be compared to test results. Not having an exact understanding of how the model works, what set of rules is being applied for each new case, and what influences its results can limit our ability to test the system using a predefined set of expected output.

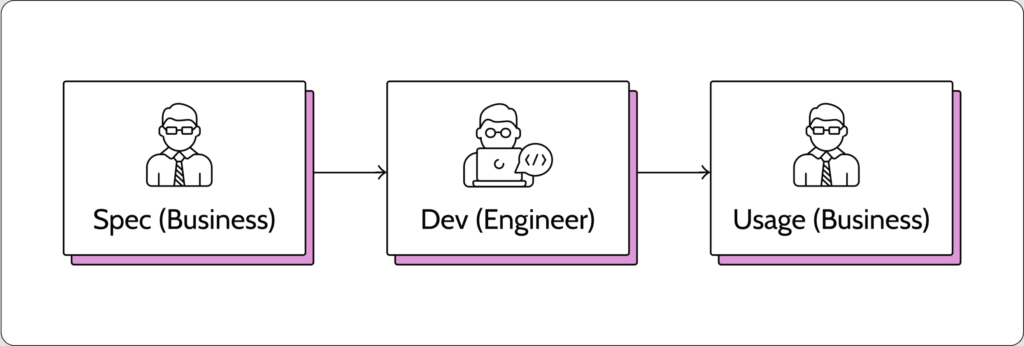

Multiple handoffs

In traditional software, the business requirements are handed over to the engineer, who then develops the code and tests it to make sure the outcome is as expected. All this is done before sending it back to the business for use.

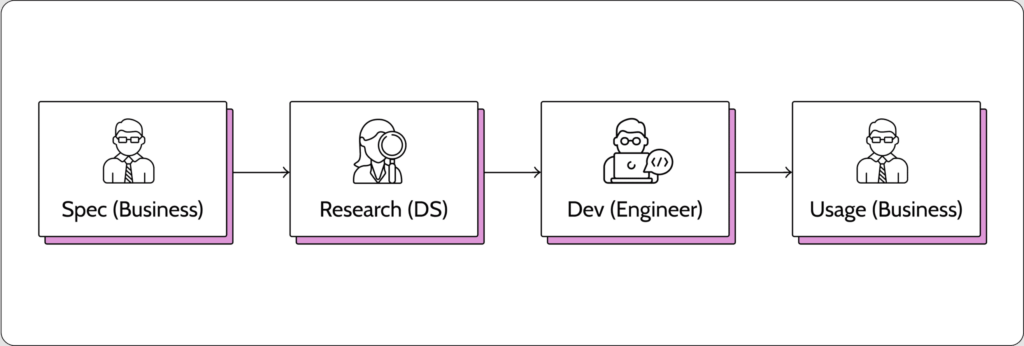

Machine learning has another phase that involves the research done by the data scientist. This means there are additional technical hand-offs. The data scientist does research to find out what data the model should use, which pre-processing techniques should be applied, and what algorithms should be applied. But, usually, the data scientist won’t be the one that codes it to production systems and may not even have the knowledge required to do that. Then again, the ML engineer who codes the system for production doesn’t necessarily have the tools or knowledge to understand all the nuances of the pre-processing elements and implemented algorithms. All this serves to increase the potential for mistakes.

Dependencies

When a software application is developed, there’s usually a well-defined set of data sources to be used. Most software is meant to run inside an operational system, which itself is managed and maintained by software.

By definition, ML is the art of leveraging dozens or thousands of different data points to detect patterns that are used to predict or classify a specific unseen case. This means the system is dependent on multiple existing data sources, from the organization’s data lake or external data sources such as weather, geolocation, and more. Essentially, the model is a downstream application that depends on many upstream data sources, which are being operated and managed by different teams. The upstream teams are not usually aware of all the downstream consumers. This, in turn, increases the chance that some of the model’s underlying assumptions will be incorrect. And this impacts the quality of the model’s predictions. To overcome this growing challenge, there is a big trend toward using data contracts, which provide information on what data is being consumed, by whom, and for what purpose.

When it fails

When software has a problem, the failures become apparent very quickly. A software bug will usually cause a functional error. Something will get stuck, and the end user will get an error message, and the system will suffer from downtime or worse. The impact can be disastrous, but it will be very clear very quickly that something is not working.

On the other hand, a problematic machine-learning model can go unnoticed for months or even years. Functionally, the system could still work fine: it will get an input and produce an output. But after a while, the mistakes and low quality will impact the business or end user. For example, it took a while before Amazon noticed that their recruiting tool was favoring male applicants. This happened because the data used to train the model came from 10 previous years during which applicants were primarily men. If a bank’s AI system to help with loan approval is trained on data from previous years where a good loan candidate tended to be older, it will end up being biased against younger customers–no matter how dependable or suitable they may be. A problematic pattern like this one takes time until it’s discovered.

Alerting

Software systems tend to be deterministic, consistently outputting the exact same results for a specific set of inputs. If the system is producing incorrect results, it’s easy to spot and raise an alert. For example, in log monitoring, we can get an alert for any error message, which is an indication of problems caught in the system. Or, if we monitor disk space, assigning a relevant threshold makes it relatively easy and straightforward to get an alert when the free space goes below 10%. Machine learning models are stochastic, where the model gets data and predicts outcomes that take into account different levels of accuracy. We cannot assume that all models will have a certain level of accuracy. Each use case is different. In reality, each subpopulation may behave differently. Assigning the correct threshold to detect drift, performance issues, or bias relies on many parameters that are difficult to define correctly in advance. ML monitoring must be able to identify trends over time and discover anomalies that could indicate more than a simple isolated incident.

Multi-tenancy

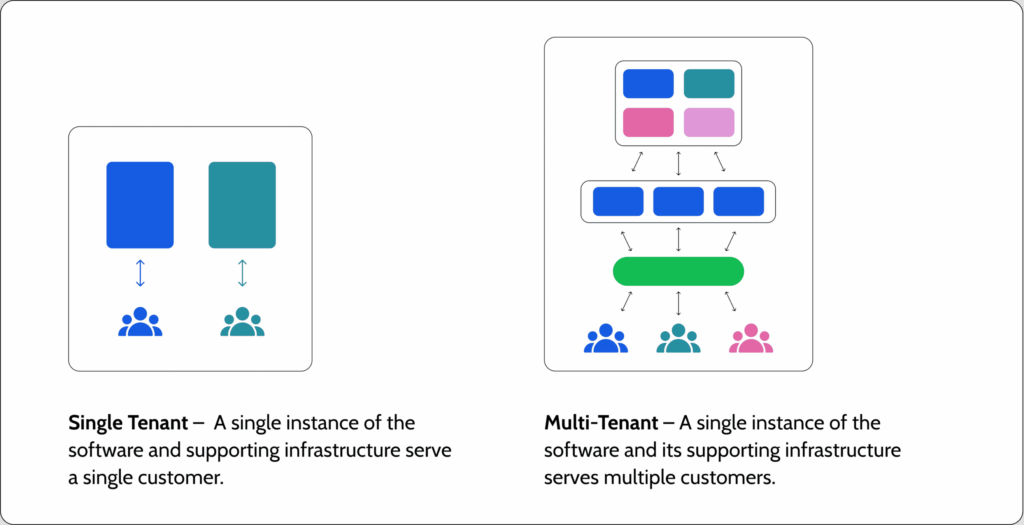

Ideally, a software engineer will develop an application that can be easily deployed and scaled while still producing reliable results. Sometimes, scaling up is simply a matter of redundancy. In most scale-out architectures, you can upload additional instances of the same web service behind a load balancer (e.g., Nginx) or multiple pods in a K8s implementation. When we deploy more instances on similar hardware or virtual resources, we can pretty much monitor in the same way, just from other services. For example, if we are checking to see that the service doesn’t go above 90% CPU utilization on average, we can apply the same rule to all instances.

When it comes to ML, scaling up involves different challenges. For example, say you are a B2B company helping businesses with market automation, and part of the service you provide can predict customer churn. Because different types of organizations use the service, the company would like to deploy the same algorithm for all customers but create a local instance for each one. Ideally, each customer should get an optimized model that was trained only on the history and data of their company. Returning to the analogy we started with, we are scaling our service by deploying multiple instances of the same code base but using different training datasets. This will ultimately result in different models for each customer. When we start monitoring, one model may suffer from concept drift while others will not, or the performance of one model may be highly accurate while others are not. Although you use the same code base and the same process, you can end up with hundreds of different models, where each needs to be monitored using different thresholds—even though they basically serve the same use case.

The right tools will give you the ML observability you need

The unique characteristics being introduced in MLOps can help you deal with the unique challenges described above. These include methods that boost productivity, enhance team collaboration, and use scalable, automated processes to minimize errors and maximize the use of resources. Sounds complicated? Don’t worry; Superwise has all your ML monitoring and observability needs covered. Head over to the Superwise platform and get started with drift monitoring for free with our community edition (3 free models!).

Prefer a demo?

Request a demo here and our team will show what Superwise can do for your ML and business.