LlamaIndex and LangChain are both innovative frameworks optimizing the utilization of Large Language Models (LLMs) in application development. Each framework uniquely addresses emerging design patterns and architectures in LLM applications. On the one hand, LlamaIndex specializes in supporting RAG (Retrieval-Augmented Generation) architectures, a synergy where LLMs and data retrieval processes operate in tandem. On the other hand, LangChain focuses on implementing ‘chaining’ – essentially, creating LLM-driven pipelines for streamlined operations.

Despite having clear different focuses, there is quite a bit of debate over which is superior and even if these frameworks should be used in production. In this post, we’ll dig into the two frameworks to highlight their various strengths and show where, when, and how developers should go about making a choice between the two (if at all).

LlamaIndex – because RAG is 80% of LLM architectures

LlamaIndex specializes in intelligent search and data retrieval, emphasizing efficiency and speed for quick data lookup. Previously known as GPT Index, it’s a data framework tailored for conversational AI applications such as chatbots and assistants, and its strength lies in ingesting, structuring, and accessing private or domain-specific data efficiently and rapidly. The idea behind it is that common RAG architectures rely on retrieving the “right” data, often leveraging LLMs as part of the retrieval process, whether in the form of vector search or by using LLMs to judge the quality of the retrieval and serve as “search agents.” To achieve this, LlamaIndex provides a suite of tools via LlamaHub to aid the integration of such data into LLMs, functioning as a smart storage mechanism and making it well-suited for applications demanding proprietary or specialized data.

LlamaIndex core capabilities

Data ingestion and indexing: This component is responsible for ingesting various data types, including text, documents, or other data formats. The data is then indexed to facilitate efficient retrieval. This process is crucial for handling large datasets and making them accessible to the LLM.

Search and retrieval engine: LlamaIndex includes a powerful search engine that can quickly query indexed data. This engine is optimized for speed and accuracy, ensuring that relevant data is retrieved efficiently. It may use advanced techniques like vector search to improve the quality of the results.

Integration with LLMs: A key feature of LlamaIndex is its ability to integrate with large language models seamlessly. This allows the LLMs to access and use indexed data effectively, enhancing their capabilities in tasks like text generation, information retrieval, and data analysis.

Quality assessment tools: These tools help evaluate the relevance and quality of the retrieved data. They may leverage LLMs in their own right to judge the usefulness of the information in the context of a given query or task.

User interface and API: To facilitate ease of use, LlamaIndex includes a user-friendly interface and a set of APIs. These allow developers to interact with the framework, integrate it into their applications, and customize its functionality according to their needs. While prototyping a RAG application can be easy, making it performant, robust, and scalable to a large knowledge corpus is hard. Luckily, LlamaIndex provides some valuable resources and guidance on tackling some of the most common problems with the performance of such systems, sending a clear message that their focus is performance.

LangChain – for all the other cool things under “AI”

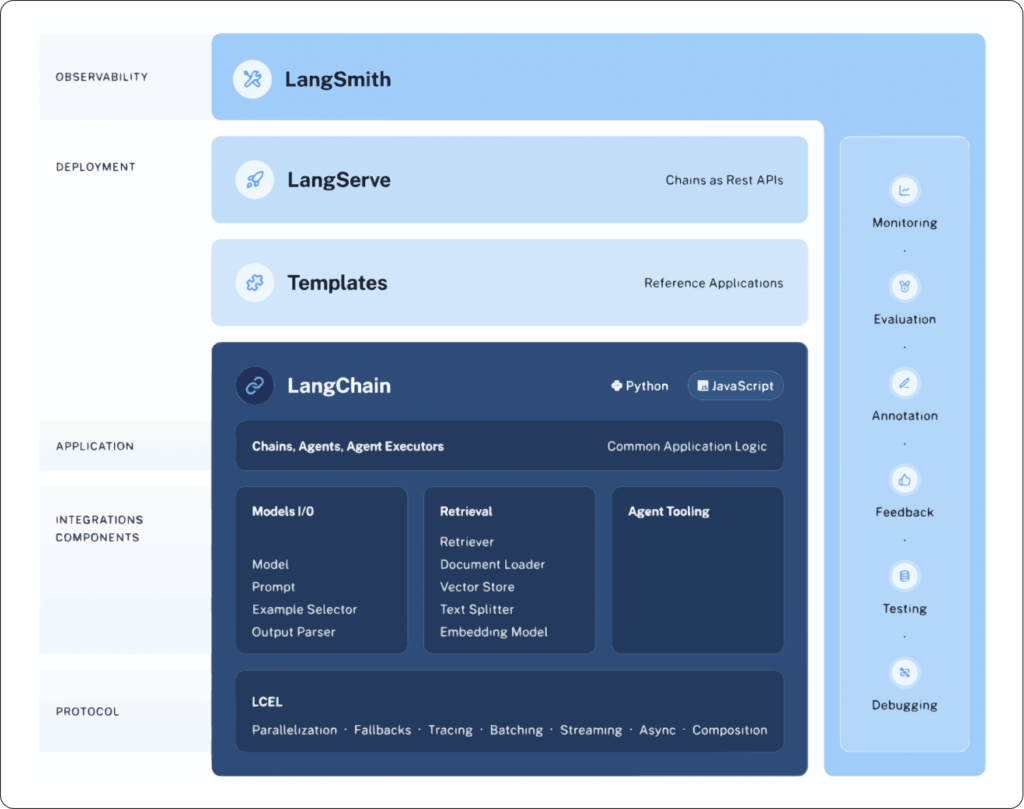

LangChain, on the other hand, is a more versatile, general-purpose framework for LLM applications. It’s Python-based and designed to facilitate the deployment of LLMs across various bespoke Natural Language Processing (NLP) applications, such as question-answering systems, chatbots, and summarization tools. LangChain stands out for its flexibility, offering broad support for different versions of LLMs from various vendors and allowing users to customize their applications significantly. It acts as a tool to unify multiple tools, enabling the user to mix and match a wide variety of tools such as web search (Google, DuckDuckGo, etc), Dall-E, text-to-speech, and many more. Moreover, it allows the user to integrate many vendors and local LLMs together through the integration with OpenAI, Anthropic, and local inference via llama.cpp.

One of the coolest things about LangChain is the number of off-the-shelf chains available for use. Chances are, if you’re looking to solve a particular problem with a chain, one already exists. For example, if you need an LLM application that translates natural language into SQL and then fetches data from a table in your database, then SQLDatabaseChain is what you’re looking for.

Off-the-shelf chains are great for hitting the ground running with your app development, but they can be limiting if your app requires some customization. To address this, LangChain provides the Components that allow you to interact with LLMs and any aspect of the entire chain so you can build your own custom process. Components modularity will enable you to easily integrate with additional frameworks other than LangChain, for example, LlamaIndex (more on this to come in a bit).

LLM application use-case coverage

Beyond the technical capabilities of each framework, a clear distinction is evident in each’s approach to customization and application breadth. While LlamaIndex is more of a plug-and-play solution for search-centric applications, LangChain provides the groundwork for a more extensive range of applications – this does require deeper customization but also offers greater flexibility. Developers may favor LangChain when their projects require a high degree of tailor-made solutions or when they need to integrate various functionalities beyond simple data retrieval.

In essence, the decision to use LlamaIndex versus LangChain will hinge on the nature of the project at hand. If the project is data-heavy and needs quick access to specific information within large datasets, LlamaIndex may be the more efficient choice. If the project is experimental and needs to integrate various LLM capabilities and other APIs or tools, LangChain offers the necessary breadth and flexibility.

Both frameworks provide front-end libraries to build your applications faster. But, with that said, both libraries are in their early stages of development. LangChain offers LangChain.js with some of the capabilities of its Python counterpart, and LlamaIndex offers its typescript library to interact with OpenAI GPT-3.5-turbo and GPT-4, Anthropic Claude Instant and Claude 2 and Llama2 Chat LLMs (70B, 13B, and 7B parameters).

Framework summary

| Feature | LlamaIndex | LangChain |

| Primary focus | Intelligent search and data indexing and retrieval | Building a wide range of Gen AI applications |

| Data handling | Ingesting, structuring, and accessing private or domain-specific data | Loading, processing, and indexing data for various uses |

| Customization | Offers tools for integrating private data into LLMs | Highly customizable, it allows users to chain multiple tools and components |

| Flexibility | Specialized for efficient and fast search | General-purpose framework with more flexibility in application behavior |

| Supported LLMs (As of December 2023) | Connects to any LLM provider like OpenAI, Antropic, HuggingFace, and AI21 | Support for over 60 LLMs, including popular frameworks like OpenAI, HuggingFace, and AI21 |

| Use cases | Best for applications that require quick data lookup and retrieval | Suitable for applications that require complex interactions like chatbots, GQA, summarization |

| Integration | Functions as a smart storage mechanism | Designed to bring multiple tools together and chain operations |

| Programming language | Python-based library | Python-based library |

| Front-end libraries | LlamaIndex.ts | LangChain.js |

| Application breadth | Focused on search-centric applications | Supports a broad range of applications |

| Deployment | Ideal for proprietary or specialized data | Facilitates the deployment of bespoke NLP applications |

LlamaIndex + LangChain (FTW)

Part of the reason why LlamaIndex and LangChain are constantly pitted against each other is that, at face value, there is use case overlap, particularly around retrieval. But in this ever-evolving Gen AI landscape, the best solution for your particular use case may not come down to choosing between LlamaIndex and LangChain but embracing the synergies of both. The magic unfolds when you unite LlamaIndex’s offerings for constructing powerful search mechanisms with LangChain’s capacity to enhance agents.

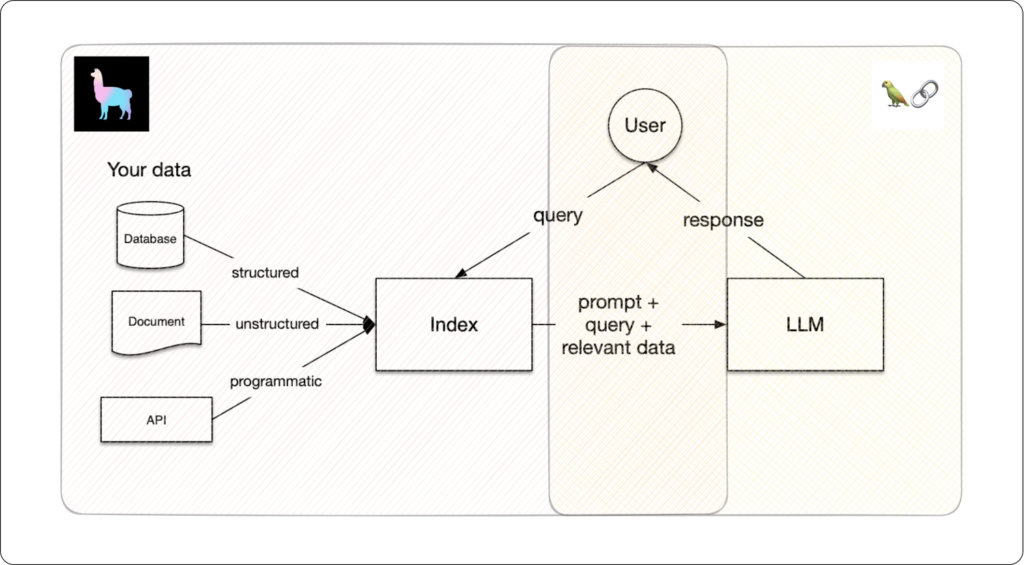

Even though both frameworks can build end-to-end Gen AI applications, each framework focuses clearly on different parts of the pipeline. In the figure above, we see the general design of a RAG where, on the left, the data loading, ingestion, and indexing to build the knowledge base for the application, and on the right, we have the LLM-user interaction via multi-agents building.

LlamaIndex excels in robust data management, providing an off-the-shelf document summary index for unstructured documents to enhance retrieval by capturing more information than text chunks, offering richer semantic meaning, and supporting flexible LLM and embedding-based retrieval. Building such an index with LangChain would require some time and effort.

On the other hand, LangChain’s ever-evolving agent and “chain” development will allow you to keep up with state-of-the-art improvements when it comes to LLM applications. Using LlamaIndex for chain development and multi-agent interactions may require more effort and specific low-level code.

Picture LlamaAGI, an AutoGPT-inspired example of the LlamaIndex + LangChain cooperation, where the search efficiency of LlamaIndex meets the advanced agent-building capabilities of LangChain. This application combines web search capabilities with LLM reasoning to solve virtually any user task, such as “solving the world’s hunger.” In this dynamic duo, it’s not a competition; it’s a collaboration that propels your Gen AI endeavors into new realms of innovation and efficiency.

Production use cases

LlamaIndex and LangChain have carved out significant roles based on their specific strengths and capabilities in production use cases. LlamaIndex has been utilized effectively in production scenarios that require robust data retrieval systems, such as applications for dissecting financial reports. Users can effortlessly load extensive datasets and fire off queries to navigate through these financial documents, showcasing its adeptness in managing structured, domain-specific data. LlamaIndex also facilitates the development of natural language chatbots and knowledge agents with multi-modal needs. These savvy agents can seamlessly interact with product documentation and adapt to evolving knowledge bases, positioning LlamaIndex as an excellent fit for dynamic customer engagement platforms. Furthermore, it’s been shown to work in tandem with frameworks like Ray to enhance scalability in the cloud, indicating its capability to support high-demand, distributed environments. The framework’s ability to cater to novice and advanced users through its high-level and lower-level APIs further underscores its flexibility and adaptability for various production needs.

LangChain, with its focus on flexibility and comprehensive tooling, has shown to be particularly effective in applications that involve complex language understanding and context-aware interactions. It has been employed in enhancing code analysis and comprehension, suggesting its utility in development environments where understanding and interacting with source code is critical. LangChain’s real-world applications span the development of AI-powered language apps equipped with necessary debugging and optimization tools to ensure these applications are production-ready. An example is the Quivr app, inspired by the second-brain idea to create your personal Gen AI-powered assistant. It can answer questions about the files that you uploaded, and it will be able to interact with the applications that you connect to Quivr. The framework’s capabilities extend to chatbots, document-based Q&A systems, and data analysis, demonstrating its versatility in automating and augmenting workflows across different industries. This is further evidenced by real-world use cases that illustrate LangChain’s impact on streamlining processes, enhancing user experiences, and driving business growth, showcasing its broad applicability.

Last, let’s talk about the popularity and maintainability of both frameworks. LangChain is by far the most popular of the two, with 10 times the number of users, 4 times the number of contributors, and almost 2 times the number of stars. While a framework’s high popularity and consistent maintenance offer reassurance, the simultaneous influences pulling in various directions can result in challenges. This dynamic might lead to significant refactors and misalignments between releases in these fast-paced times, especially when aligning with major refactors like the latest OpenAI release. This, in turn, can lead to issues in your production code and necessitate substantial refactoring efforts on your team to keep pace with the evolving framework of choice. LangChain is still waiting for the 0.1.0 version, whereas LlamaIndex is already in 0.9 due to the former GPTIndex refactor and rebranding. If maintainability and predictability are more important to your application than keeping up with state-of-the-art technology, LlamaIndex might be a better place to start.

Are LangChain and LlamaIndex production ready?

TLDR: That’s the wrong question.

LangChain and LlamaIndex make Gen AI app composition look easy, but the hidden costs of maintaining and building a system that resonates with others can be immense.

Picture an e-commerce website that needs a virtual assistant to serve thousands of users with unique chat recommendation experiences based on their tastes and preferences. You’ll need to either merge enormous systems that are core to the business or purpose-build your virtual assistant with high availability, precision, and features like price and stock updated in real-time, a recommendations system that gathers users’ feedback and implicit preferences in realtime, user tracking and satisfaction monitoring to keep the users and the business happy, and a lot more.

On top of that, you need your virtual assistant to be up 24/7, answering correctly and quickly. Neither LangChain nor LlamaIndex will solve the issues of managing the total cost of ownership of your RAG.

The right question is, are you and your team ready to deploy and maintain RAG in production? To answer this question, there are a few key aspects to consider.

Data loading and injection

How heterogeneous are the data sources your RAG’s knowledge base needs? PDF uploads, YouTube videos, web scraping, third-party APIs, and so on have many details that will lead to much user frustration without proper guardrails and robust software design. Web scraping, for example, needs constant monitoring because any release of a crucial source will cascade a series of errors or, worse, result in issues with your data that will only be identified later in the pipeline. Can you use a third party to offload the web-scraping part of your system?

Are you required to have some legal guarantee that the PDFs or documents the users are uploading are compliant?

How resilient do you need your system to be? Issues with your ingestion are guaranteed to occur, and you might need efficient methods to refresh or re-process the whole or parts of your database.

MLOps and infrastructure

Where are you hosting your databases to store the summaries, embeddings, and metadata required to feed your LLM? Choosing the right vector database is a complex enough task, and it will impact the whole system.

How resilient is your app to third-party API downtime? Third-party APIs to serve LLMs, such as OpenAI, have very low rate limits that will compromise the entire system, both on injection (encoding embeddings, summarization) and on the chat side. How will you deal with such limits? Some solutions apply sleeping functions on the calls to slow down the request rate, while others can solve it by having multiple vendors implemented as a fallback strategy. In any case, there will be a need to implement proper tracking and alerts to understand how well your app is doing in production.

Is your system resilient to model updates? The last release of OpenAI API led to a series of errors and silent errors due to model updates and changes of the API contracts. Are you tracking the costs of LLM usage? The cost per token at OpenAI may seem to be very “cheap,” but I assure you that it gets very expensive very fast. Are you monitoring the current costs? Do you have an econometric model to understand how many users you can afford to use your system before it becomes unprofitable? Can you compare the costs of two vendors or even self-hosting? Answering these questions will allow you to understand the total costs of running your LLMs in production.

Security

Can the RAG’s knowledge base have sensitive information about the company or other users? Are you compliant with general PII rules and specific regulations in the industry your RAG is running? You’ll need to build security features like PII removal, NSFW query handling, and access controls.

Can you prevent attacks on your system by SQL injection or code injection? You need to build the guardrails to guarantee such issues are not occurring.

Quality and user satisfaction monitoring

A rather qualitative question, but is your system any good? It seems like an easy question to answer after investing in prompt and agent development, but how are you accounting for user satisfaction once you release your RAG to the real world?

Can you track user prompts and chat responses? Having a tracking system in place is vital to guarantee and monitor the quality of your system. However, users’ rights to privacy also need to be secured. Building a feedback system that helps you fix problems in your RAG fast while being compliant with user privacy is a complex enough task.

Do you need a safety net in your system? Automated analytics and monitoring are crucial to responding to issues quickly. Hallucinations and bad responses must be identified and blocked to avoid user frustration and bad product advertisements.

Product development

How long will it take to test a new model? Changing an embedding model on the ingestion requires synchronously updating the retrieval side. Is your system prepared for this undertaking?

Improving prompting based on user feedback? Issues with the way your system answers to users will occur. Is it easy to update and test the prompts? Do you have quality assurance and offline metrics to guarantee improvements without creating 1000 problems while solving 1 edge case?

If you plan to build a RAG in production, rather than choosing between LangChain and LlamaIndex, address the factors listed above and make sure that your team is prepared to take your system to production. The question of choosing between LangChain or LlamaIndex may be the least of your worries.

If the idea you’re implementing is straightforward and does not require very complex interactions, you could build your own codebase of agents and chains with the guarantee of transparency and predictability. If you don’t mind having to maintain the advances of these frameworks and do not have the time and resources for a DIY approach, then LlamaIndex and LangChain might not be a bad place to start, or even better, you could look for “RAG as a Service” solutions.

Conclusion

In conclusion, LlamaIndex is most suitable for production environments prioritizing efficient data handling and retrieval, especially where specific, structured data needs to be integrated and queried rapidly. LangChain, with its extensive toolkit and flexible abstractions, is ideal for creating context-aware, reasoning LLM applications that can navigate the complexity of language understanding, making it a robust choice for a more diverse set of applications that require a high level of customization and integration of various language model capabilities.

Both frameworks facilitate the transition from proof-of-concept to fully-fledged production systems, but choosing between the two is dictated by the specific requirements of the production use case. Suppose your application requires a considerable domain-specific knowledge base to solve the problem. In that case, it might be a good idea to start with LlamaIndex, and in the event you need extra flexibility around agents and chains, you can always plugin LangChain’s components. Suppose your application revolves around reasoning and multi-agent interaction without requiring a large knowledge base. In that case, the better place to start might be LangChain, which guarantees a system design that allows you to plug in LlamaIndex if you need the extra data management and contextual search capabilities down the road.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.