LLM architectures aren’t just about layers and neurons. The broader system supporting Large Language Models – from data ingestion to caching, inference, and costs–plays a vital role. In this blog, we recap our recent webinar on Emerging architectures for LLM applications, covering the architectures and systems supporting LLMs to deploy them in real-world applications effectively.

Take a dive with us into the limitations and capabilities of LLMs and various techniques and systems designed to optimize their performance and overcome inherent challenges. You’ll learn about the significance of context, data ingestion, and orchestration alongside the transformative potential of LLMs when supported by a solid, comprehensive system approach.

Follow along with the slides here.

More posts in this series:

- Considerations & best practices in LLM training

- Considerations & best practices for LLM architectures

- Making sense of prompt engineering

LLM limitations

LLMs have transformed generative AI due to their unprecedented scale, which enables them to perform a diverse range of tasks directly from natural language. Their large scale—captured by the number of parameters and the size of the training data—enables them to learn richer representations of language. This often results in better performance, both in terms of accuracy and the quality of generated text, especially when compared to smaller, task-specific models. But, like any new technology, they possess several limitations.

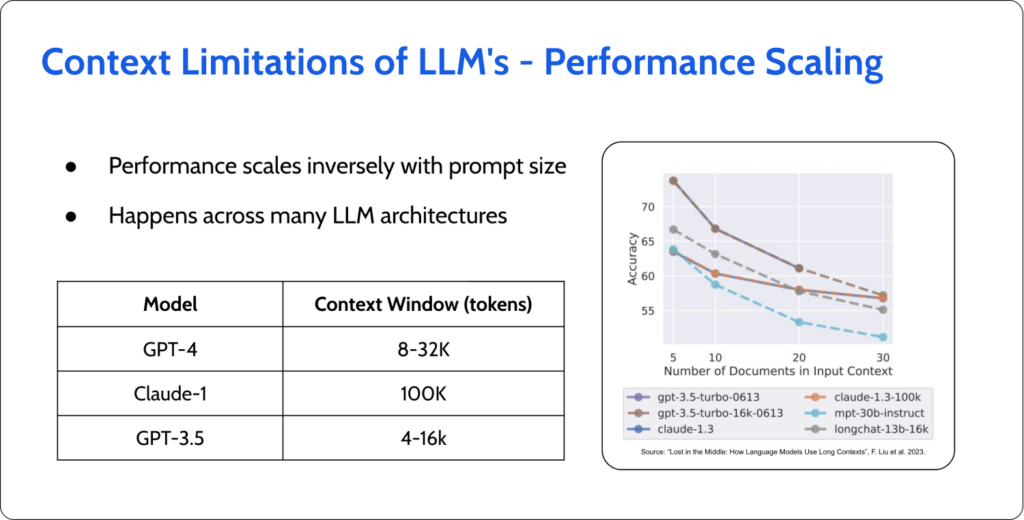

Token limits

One of the technical challenges with LLM models is the inherent token limitation. Tokens can be described as the smallest intelligible units of a text block, including punctuation, emojis, and words or parts of words. For GPT models, for example, the standard token limit hovers around 8,000 tokens. To put that in perspective, a text block with 8,000 tokens roughly equates to a long essay or a few pages of densely packed text. While this may seem substantial, for applications that need to account for larger amounts of model content, this token limit can pose restrictions on the breadth or complexity of requests that the model can accurately and effectively serve.

Reduced performance related to context

As the length of the LLM’s context grows, maintaining a coherent and contextually relevant narrative becomes challenging. It’s akin to a human trying to remember and relate to a conversation that started hours ago without missing details. This makes it critical to find out how to efficiently retrieve relevant content while avoiding a downward spiral of performance.

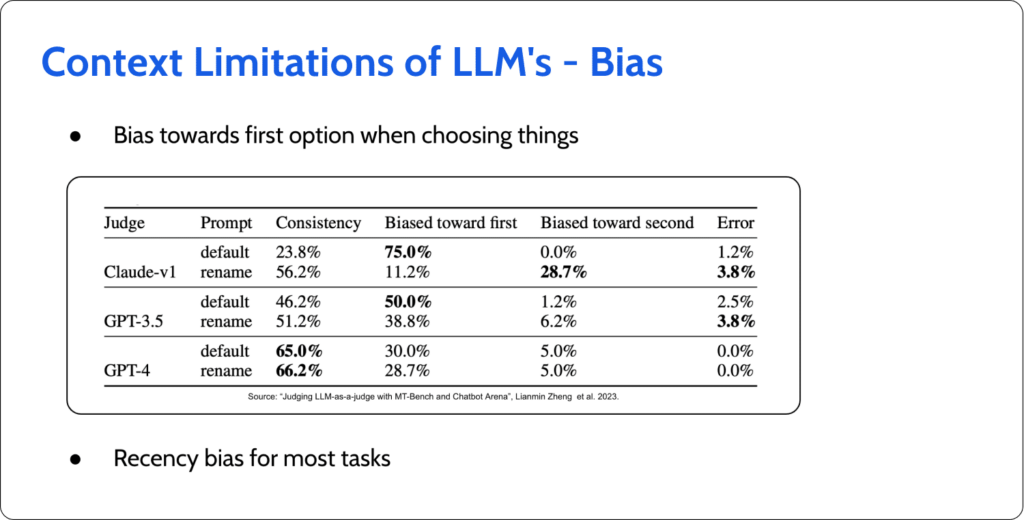

Biases

Despite their seemingly objective façade, LLMs can be influenced by the biases in their training data. In one common LLM benchmark, the models are asked to choose between outputs A and B, depending on which option is more accurate. Unfortunately, the researchers discovered that LLM models have a tendency to show bias towards the first input A simply because it is the first option presented. Another common manifestation is known as “recency bias,” which works the other way around. When asked to perform a task, the model will prioritize the task description that it was given most recently over older, possibly more relevant data.

Hallucinations

In a phenomenon commonly known as “hallucinations,” the model makes “best-guess” assumptions regarding output responses, essentially filling in what it doesn’t know. LLMs have an inherent tendency to generate a response at all costs, even if it comes at the expense of inventing sources or fabricating details. The results can be factually incorrect and sometimes even nonsensical. This problem remains one of the main concerns facing today’s LLMs.

Enter Retrieval Augmented Generation (RAG)

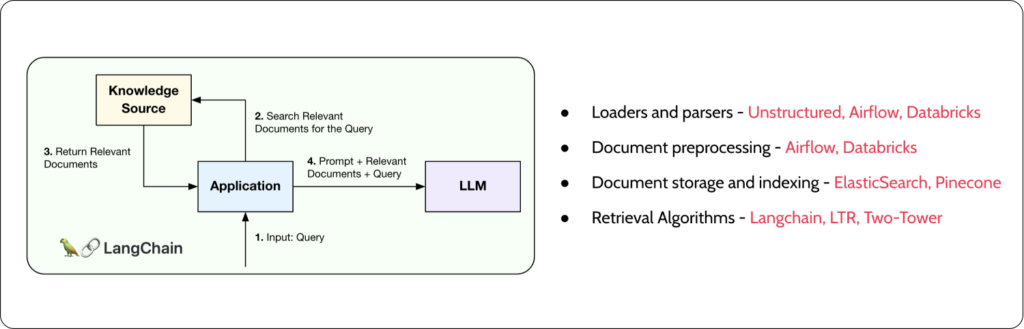

It’s still early days for LLMs, and the tools are proliferating rapidly. But one of the main points of contention remains: how to improve the accuracy and context of these LLMs, and what kind of model orchestrations is required to achieve this? Architectural designs like Retrieval Augmented Generation (RAG) have come into play to address these limitations and constraints. In a previous webinar on LLM training (Here’s the recording, slides, and writeup), we discussed prompt engineering as an example of pre-deployment fine-tuning and its ability to guide the model’s responses by feeding it careful prompts with examples of the desired associated output. RAG stands as a beacon in LLM design patterns by allowing us to combine prompt engineering with context retrieval. It intervenes when the model might be missing specific information by leveraging external sources, often vector databases, to supplement its knowledge. Instead of just relying on its internal weights, RAG queries these databases to provide context-aware, precise information to the user. This duality – of using both the model’s internal knowledge and external data – dynamically broadens what the LLM can achieve, making it more accurate and contextually aware.

These expanded RAG models do a brilliant job of limiting hallucinations by allowing the LLM to cross-check and supplement its knowledge from external sources, curbing the chances of future hallucinations. What’s more, by broadening the data sources, RAG can help provide a more balanced perspective, mitigating inherent biases.

Implementing RAG

Implementing a RAG system involves splitting the relevant data or documents into discrete chunks, which are then stored in a vector database. When a user request is received, the LLM scans the database to find those segments that are most relevant to the query and can be used to augment the response by enriching its contextual information.

The question that begs to be asked is: What’s the best way to break down the data into small chunks while maximizing the quality of the results? One strategy to manage large documents is reminiscent of the map-reduce pattern (think of Hadoop years ago when we first started with ML models). Here, documents undergo splitting based on predetermined rules, and the results are merged iteratively until the final product fits within the designated context window.

RAG’s potential lies in its ability to combine different orchestrations. Because RAG is essentially a retrieval system that operates together with LLMs, here are some of the orchestrations and pre-processing that can help tackle the challenges we’ve been talking about.

Caption: Examples of orchestrations and pre-processing that can be used with RAGs

These techniques are often used with vector databases. Instead of trawling through raw data, vector databases help pinpoint specific chunks of data that are relevant to a given query. These databases serve to transform the problem of applying LLMs into an information retrieval challenge. But here, too, there are limitations. For example, say you’re storing news about a football club, and you want to ask the model to give you the current status of the club. Well, if you have 20 years of news, and the database uses embedding similarity, you stand a good chance of retrieving news about the club’s status from 20 years ago. The same risk applies if your database holds documents on, say, the vulnerabilities in Windows 11 and Windows 10. Because the information is very similar, it’s very likely that you’ll get cross-referenced information that is true in one case but not in the other.

This is exactly why we need advanced RAG architectures that can post-process retrieved documents in a way that significantly beefs up their accuracy. Here are a few of the orchestrations that we like.

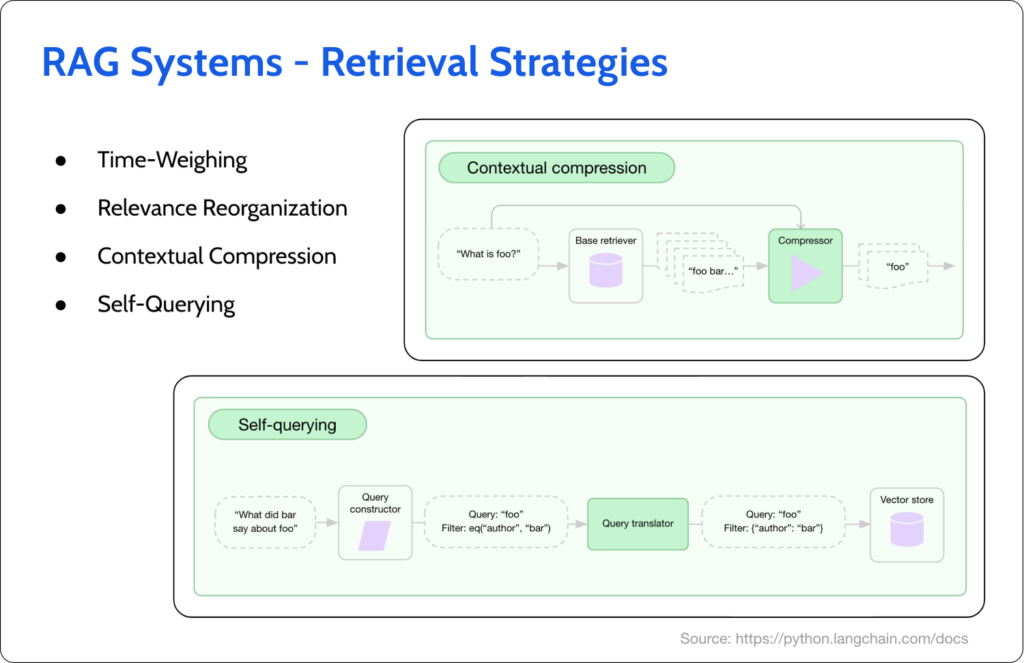

Adding a time component – For example, in the football news example above, we could add a time component to the similarity search in the vector database, thereby preventing the retrieval mechanism from looking too far into the past.

Post-processing

Another solution involves leveraging a secondary, more agile LLM to refine the document retrieval. This ‘curator’ LLM steps in post-retrieval to reorder the document such that the most important or relevant ones are retrieved first.

Contextual compression

This technique incorporates an LLM fine-tuned to carve away the irrelevant fluff from your retrieved documents. What remains is efficiently streamlined, more relevant, summarized content.

Self-querying

Another strategy uses a separate LLM to take a user query and transform it into a structured query that can interact with external components such as SQL databases. In fact, just a few months ago, Google released SQLPalm, which can take a natural language query and translate it into an SQL query. This can even be used to replace vector databases with old-school SQL—all by using LLMs. SQLDatabaseChain from Langchain is another way to run SQL queries using a natural language prompt.

Forward Looking Active Retrieval (FLARE)

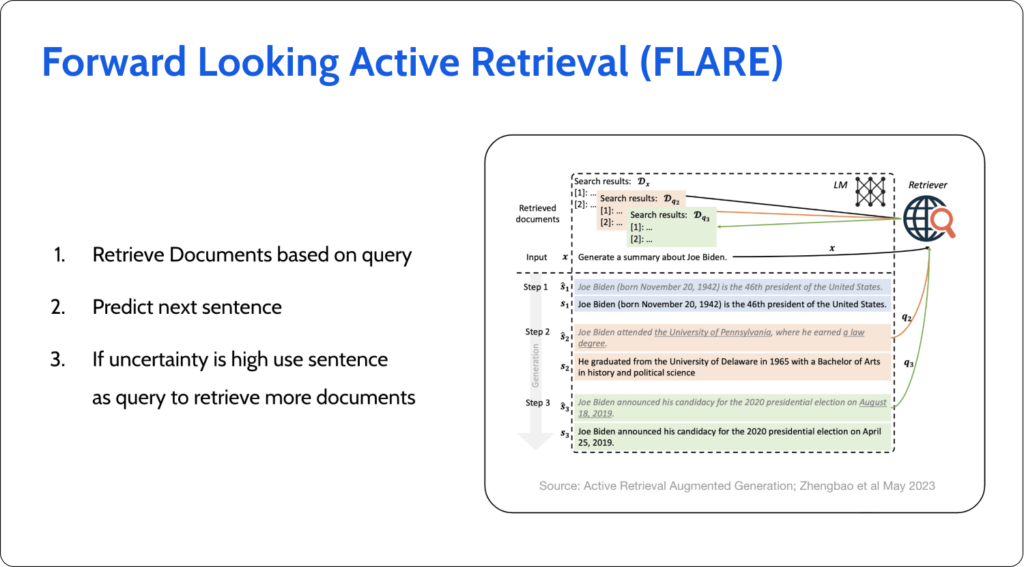

FLARE is a technique based on the iterative nature of modern LLMs. Rather than settling on the first generated output, FLARE encourages continuous refinement. Through iterations, the model hones its response, ensuring precision and relevance. For example, if you want to retrieve documents based on query, FLARE will predict the next sentence; if uncertainty is high for the generated content, it will prompt the LLM to retrieve more documents. By segmenting the process and focusing on continuous refinement, FLARE ensures that LLM architectures remain robust and responsive.

LLM tools, agents, and plugins

In the evolving landscape of Large Language Models (LLMs), one of the most notable advancements is the deployment of agents and tools within the model architectures. Agents, as discussed in the webinar, act as sophisticated orchestrators, adeptly deciding when to deploy specific tools or resources to achieve a desired outcome. They streamline the process by decomposing complex tasks and leveraging the linguistic prowess of the model for task breakdown and result representation.

Complementing the agents, tools serve as external resources, ranging from Python interpreters to web-fetching services like Selenium. Their intricacy lies not just in their individual functionalities but also in their synergy. For instance, plugins, which are broader than tools, enable LLMs to seamlessly integrate with external APIs or tools, further blurring the boundaries between what’s inherently within the model and what’s accessed externally.

This paradigm shift, brought about by agent architectures, has created massive opportunities for LLMs. For instance, the ReAct framework continuously cycles through Actions, Observations, and Thoughts to ensure that models arrive at conclusions with heightened confidence. The subsequent addition of the Reflexion framework over ReAct prompts the model to examine its outputs, aligning them more closely with user norms and expectations. Using this type of ‘chain of thought’ prompting, instead of asking isolated individual questions, allows the model to maintain a sort of contextual thread throughout the interaction. Each question builds on the previous response, allowing for a more in-depth and nuanced exploration of the topic.

This combination of agents, tools, and innovative frameworks is revolutionizing how we perceive and implement LLMs, ensuring they’re not only expansive in knowledge but also adaptable and insightful in application.

Evaluating LLMs

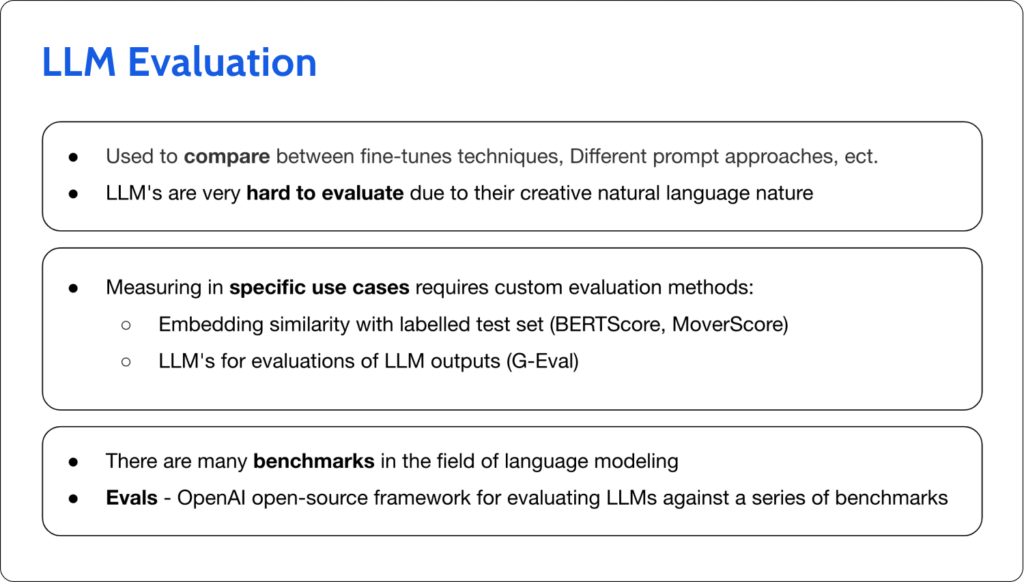

First, let’s differentiate between LLM evaluation and LLM monitoring. LLM evaluation at the end of the day serves the purpose of helping us with Large Language Model selection. There are a lot of potential measures here, both in regards to context and general model intelligence, and it’s critical, just like with more traditional ML monitoring metrics, to select the ones that fit your use case.

Monitoring LLMs

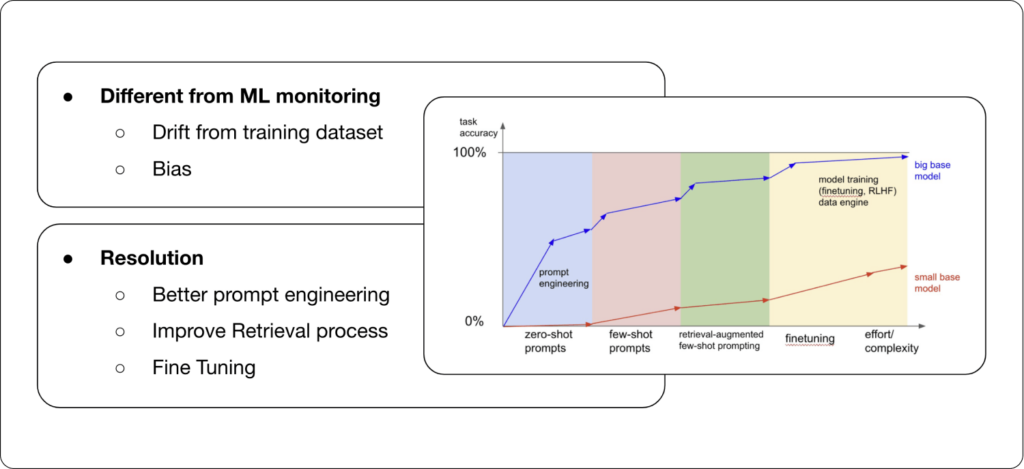

Monitoring is quite different for LLMs compared to traditional ML model monitoring. Take drift, for example. Even assuming we are aware of all the data that the LLM was trained on, what impact does drift even have in such a large context? And that is only one of the potentially problematic metrics when examined in the context of LLMs.

The other half to this is, given that we detect an issue, how could we possibly go about resolving the issue? In traditional ML, typically, we would look to retraining or feature engineering. With LLMs, not only are the traditional resolution flows typically a massive undertaking that should not be taken on lightly, but there are other more upstream resolution mechanisms that we could leverage, such as prompt engineering, retrieval tuning, and fine-tuning.

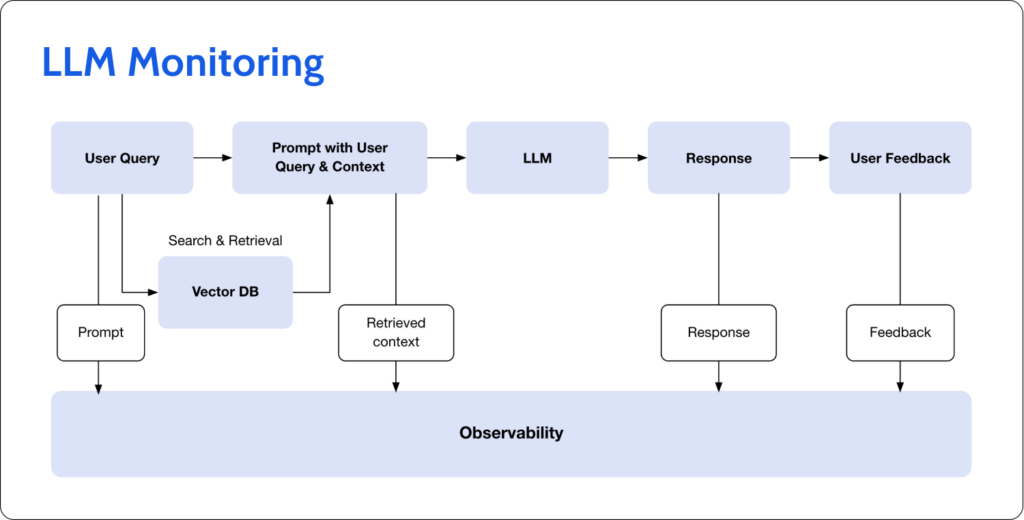

With LLMs, monitoring and observability move to the chain of functions that cover the user query, context, model response, and user feedback—all in order to improve the retrieval process and fine-tuning. In other words, observability shifts from the model to the model’s surrounding context.

For examples of LLM metrics as they relate to the surrounding context, jump to 32:02 and check out the accompanying Elemeta open-source and Superwise LLM monitoring.

Speed, safety, and the road ahead

No matter how vast their knowledge, LLMs have boundaries. These include their tendency to hallucinate or fabricate information, their inability to manage large chunks of data, inherent biases, and data privacy. In this post, we looked at emerging architectures that aim to address these limitations.

As this blog and its partner webinar on LLM tuning reveal, how to go about implementing LLMs is not just about the model but the intricate architecture and systems supporting it. As we venture deeper into the realm of LLMs, understanding these nuances becomes imperative for harnessing their true potential.

If you’re as fascinated by LLMs as we are, join the discussion in our next webinar!

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo here, and our team will show what Superwise can do for your ML and business.