In 2023, the landscape of cloud AI services saw (another) significant shift as Microsoft deepened its collaboration with OpenAI. Large Language Models (LLMs) were already radically transforming how users and practitioners interact with AI, but up until the Azure AI and OpenAI collaboration, the forerunners in the race had been Google and AWS, with each releasing more and more MLOps services in their respective Vertex AI and Sagemaker offerings.

Azure AI’s collaboration with OpenAI was a brilliant move, catapulting them ahead in their ability to support LLM pipelines and new AI concepts. Still, Google is no slacker when it comes to its own proprietary models. So how does this new player change the playing field? The most obvious of differences is in Google Cloud’s developer and API focus, whereas Azure is geared more towards building user-friendly cloud applications. But those differences go only skin deep.

In this blog, we’ll take a look at Vertex AI vs. Azure AI to examine the strengths and weaknesses of both and what practitioners and developers should evaluate when choosing to go with one or the other.

More posts in this series:

Let’s start from the cloud

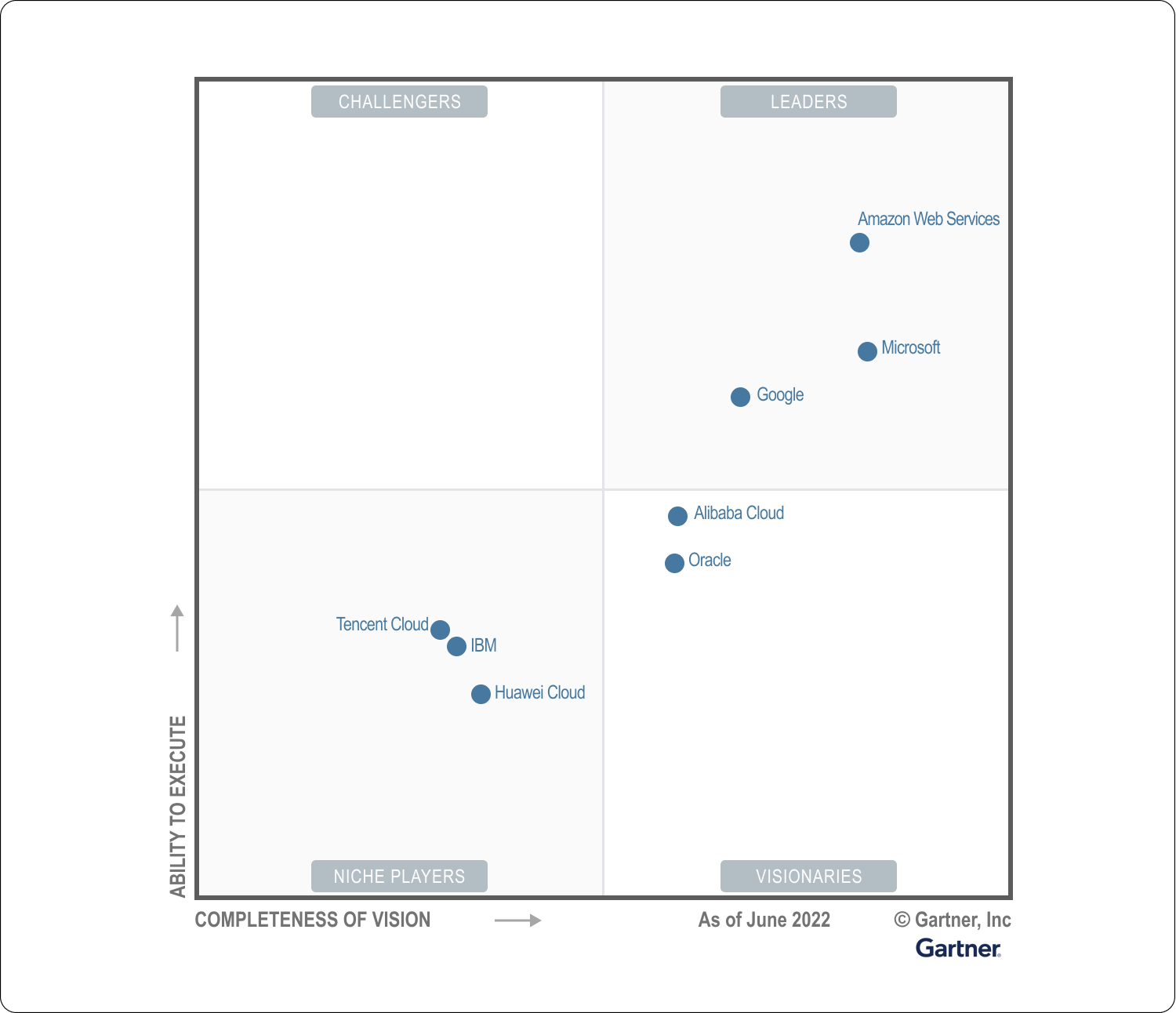

In the context of Vertex AI vs. Azure AI, you’re not only deciding on an MLOps platform but also selecting a cloud provider. While multi-cloud approaches are gaining more traction, for the most part, organizations tend to stick to a single cloud provider, given the costs and performance overheads associated with moving data between different cloud platforms. A very notable review of the platforms was conducted by Gartner in 2022, and it unsurprisingly concluded that both Google Cloud and Azure were category leaders, although Microsoft had a clear lead both in terms of ability to execute and the completeness of the solution. Google Cloud is relatively newer to the field compared to Azure but steadily increasing its market share and achieving profitability as of 2023.

However, one key aspect not explored in Gartner’s Magic Quadrant for Cloud Infrastructure and Platform Services is overall developer experience. After taking both for an extensive spin, we believe Azure’s developer experience could be better than Google Cloud’s. Specifically, given that Azure’s APIs often lack critical features, and many API calls are unavailable through the official Python client. For instance, Azure’s cost and usage export is only available at a daily resolution, restricting users from analyzing their hourly usage.

Microsoft has traditionally excelled in building applications for end users. Still, with Azure, the feeling is similar to Visual Studio before it was open-sourced – services that ִwork well with each other but not so much with the external world. In the same vein, working in Azure feels smooth, the UI is top-notch, and new functionalities are being added at a great speed, but if you prioritize a superior developer experience, smooth integration of most of the components Google takes the lead. But remember that this is on the cloud level and before we’ve even dove into Vertex AI vs. Azure AI distinctions.

Generative AI

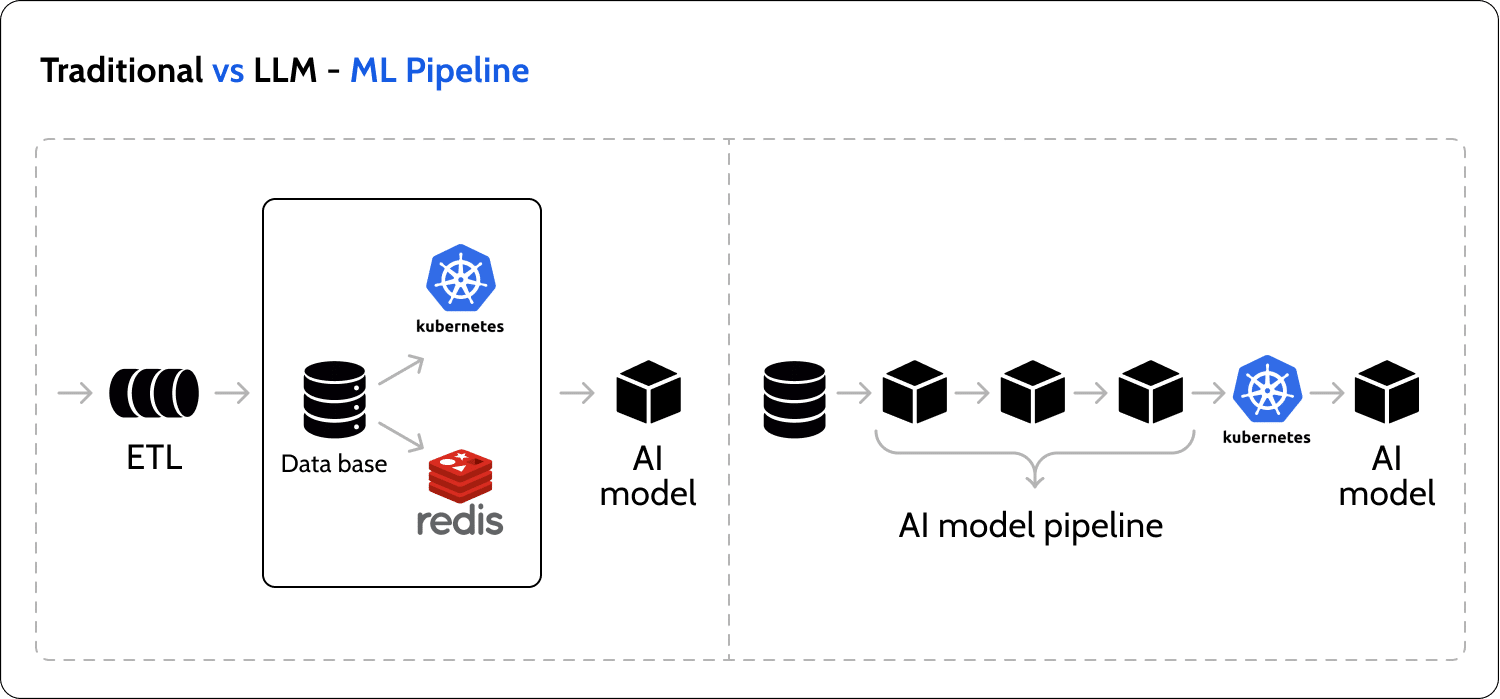

Let’s get the obvious out of the way and start our evaluation with Generative AI and Large Language Models (LLMs). Yes, it’s still early days, but LLMs are changing how many companies are using ML. Typically, ML is considered a component separate from data pipelines that are called in order to consume processed and reduced data. LLMs introduced the concept of AI pipelines in which a more significant part of the ETL is done with AI.

Regarding LLM pipelines, as of July 2023, it seems that Azure has understood this concept far better than GCP. Azure has been steadily releasing more features and updates to their AI suite: a huge model catalog (featuring GPT 4, Falcon, LLAMA 2, and most OS models from HuggingFace ), pipeline jobs, data inspection tools, model and environment management tools, no code pipelines, and more recently Prompt flow.

Azure’s Prompt flow offers a web IDE well designed for working with LLMs from prompt engineering to no-code development of chains and pipelines. Google has yet to introduce its own answer to Prompt Flow; however, given how fast everyone is moving in this space, we’re sure it’s only a matter of time.

All in all, it feels like Microsoft is taking the right steps regarding Gen AI: keeping up to date with trends.

Proprietary models

Google has always been at the forefront of AI model research, developing notable examples such as AlphaGo, Word2Vec, GoogLeNet, and the influential article “Attention is All You Need,” which inspired the creation of LLMs like GPT. In contrast, Microsoft invested in OpenAI early on and established a very deep collaboration with the company.

Azure AI users can easily access OpenAI models through their Azure subscription, making it a convenient one-stop shop for these models. While not guaranteed, Azure allows access to deployed OpenAI models. Azure also provides additional layers of protection on top of the OpenAI APIs, ensuring extra safety when integrating them into real-life use cases. It’s worth mentioning that OpenAI models are not exclusive to OpenAI, so the integration with Azure doesn’t necessarily offer a significant advantage.

Google has developed proprietary models, such as Bard (for chat) and PaLM-2 (base model), and their focus today lies in prompt engineering and exposing the models as APIs. Integrating Google’s models using the Python client is straightforward, allowing users to control the quality of the models. However, integration with other backends is not supported currently, and there is a lack of advanced chaining and function calling. On the other hand, Google permits tuning of one’s own LLM starting from their base models.

To close the subject on proprietary models, Azure does not have its own models, but their collaboration with OpenAI gives them preferred access to the most advanced models available today. While Google’s models are rapidly improving, they still have a ways to go before reaching the level of GPT-4. For the moment, the place to find superior models is directly on Azure AI.

Two sidenotes here regarding LLM evaluation and proprietary vs. open-source LLMs. First, as of today, there are no clear methods or agreed-upon standards for evaluating LLMs; however, typically, OpenAI GPT-4 outperforms Google models. Second, the LLM landscape has been rapidly changing, and open-source is quickly closing the gap between themselves and proprietary model developers. While this is a topic in its own right (that we’ll explore down the road), it raises the question of how long access to proprietary models will even be a factor in choosing between Vertex AI vs. Azure AI.

AutoML

Before LLMs and generative AI garnered all the attention, AutoML was a hot topic. Cloud vendors recognized the potential of transfer learning, a method that allows developers to leverage a base model for various ML tasks such as computer vision (detection), tabular data, and time series forecasting, and both Google Cloud and Azure have made progress in this area.

While Azure provides more information about the foundation models and relies on popular open-source models like Resnet for computer vision, Google has been more secretive about its training methods. Some publications suggest that Google utilizes an algorithm called NAS (Neural Architecture Search) not only for tuning existing models but also for altering their architecture.

Although there are a few benchmarks comparing the quality of these models, we believe that Google’s approach, which involves architecture search rather than just fine-tuning models, gives them an advantage in the AutoML domain. It is worth noting that Azure’s solution for tabular data appears to have a better understanding of ML pipelines, which are tightly integrated with model training compared to Google. For instance, AutoML includes stages for data imputation. Some may argue that this represents the next step in AutoML pipelines, but we believe it violates the principles of separation of concerns. Therefore, it is unclear whether Azure’s approach is beneficial or not.

Data solution

Moving away from our AI focus again, let’s discuss the data offerings of Azure and GCP. Google has been a market leader in the data field for many years, offering technologies like Hadoop, Dremel, and more, which have been transformed into cloud services such as BigQuery and DataProc. Google’s data solution includes powerful tools for storing, organizing, and transferring data. On the other hand, Azure takes a managed approach with Data Factory, providing a code-free solution. They also highlight their collaboration with Databricks for customers with complex use cases. It’s always great to see ecosystem partnerships, but both Azure and GCP integrate with Databricks, making it a commodity in this case, not a differentiator.

Google’s investment in tools like BigQuery, which offers standard SQL, as well as a managed version of Airflow for orchestrating pipelines, gives them an advantage in the realm of data solutions. Since data serves as the foundation for any AI application, we believe Google has an edge in this area.

MLOps

While LLMs and LLMOps are all the rage at the moment, MLOps has yet to pass in terms of relevance. Between 2018 and 2022, cloud vendors dedicated significant efforts to establishing development, deployment, and monitoring processes for ML workloads. However, it’s fair to say that neither platform has fully succeeded in this regard. One can simply look at the numerous rebranding, deprecation, and redesign of popular MLOps services that both GCP and Azure have undergone.

Google provides a combination of ML APIs that don’t require training, as well as a managed service supporting training and hosting for popular frameworks like TensorFlow and XGBoost out of the box. Additionally, it offers Kubeflow pipelines as a semi-managed service for more complex training workflows. Furthermore, we already mentioned GCP’s extensive AutoML models, which simplify MLOps in many cases. Azure also offers training for certain popular frameworks, but interestingly, their primary focus lies in AutoML. As part of their developer experience, Microsoft has deeply integrated Azure ML with VS Code, enabling developers to conveniently build and train models online from their workstations. Despite these platforms functioning reasonably well for some use cases, it’s not surprising to still find open-source libraries and specialized MLOps tools from startups, such as Seldon and KServe, which we recently reviewed. Superwise serves as another example of how cloud vendors’ wide range of offerings sets them up to be middling in most regards rather than best-in-class, ultimately requiring users to integrate with third-party tools that excel in specific domains of MLOps. We give both platforms a “passing” grade, but with reservations, for their MLOps stack.

Notebooks demonstrate the differences

When comparing the two platforms, Google Cloud and Azure, we observed that Google Cloud is primarily focused on developers building for developers, while Azure emphasizes building apps for users. These differences are evident in their respective notebook services.

Google has chosen to wrap Jupyter as their code service, with Vertex AI Workbench offering an experience that closely resembles running Jupyter notebooks on your own MacBook. The UI remains unchanged, and if needed, you can SSH to the underlying virtual machine. On the other hand, Azure AI has transformed its notebook service into a managed serverless product. The UI is fully integrated with the Azure AI interface, creating a seamless user experience. It feels like a natural extension of the Azure environment.

Typically, turning Jupyter into a managed service has proven challenging for many products. However, Azure seems to have succeeded in providing a responsive and stable experience. The question of the correct level of abstraction for Jupyter depends on personal preferences. Nonetheless, we consider this case as a compelling example highlighting the differences between the two platforms.

Summary

Since its surge in popularity around 2014, the field of machine learning (ML) has expanded far beyond sklearn pipelines. There are now numerous additional considerations, including dedicated hardware such as TPUs and GPUs, ML monitoring, responsible AI practices, regulatory compliance, scalability, and more.

In this review, our aim was to highlight the key differences between Vertex AI vs. Azure AI. We found that while Google continues to offer an excellent developer experience, they are lagging behind in the realm of Language Models (LLMs) and AI pipelines. On the other hand, Microsoft’s collaboration with OpenAI and its track record of developing user-focused software like Windows provides a user-friendly system that caters to many use cases, albeit with potential limitations for developers. Therefore, if your focus is on rapidly building AI applications, we would currently recommend Azure AI. For a more robust ML system and complex use cases, we see Vertex AI as the advantageous option.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo here, and our team will show what Superwise can do for your ML and business.