Beyond the obvious conversations around deploying LLMs into business products and processes yesterday, a quieter, less popularized discussion coming up in the context of ML vs. LLM. Are the “plain old” Machine Learning models outdated, and from here on out, should everything AI be about LLMs? Is new necessarily better?

This article will break down the ML vs. LLM debate and delve into their distinctions, functionalities, and when one might be preferable over the other in AI applications.

Drawing a line between ML and LLM

First, there are no LLMs without ML. Machine Learning is the broader set comprising all sorts of algorithms and models, from simple Naive Bayes to the deepest Neural Networks. However, within this broader set, it’s possible to draw boundaries for the types of models by their learning methods. Specifically, the Neural Networks concept and back-propagation method for training are the cornerstone unlocking concepts for what we now see in the form of LLMs. These epitomize the most significant breakthroughs of recent years as they relate to complex problems in the areas of computer vision, natural language processing, and reinforcement learning. However, the NNs were not that big of a game changer in most real-world applications until roughly ten years ago, not because of a flaw in conceptualization but primarily because of technical limitations in data storage and computational power. Until the dissemination of GPUs and inexpensive data storage and collection, the way to solve complex problems was to make the best use of the limited resources and data available.

Since the 1960s, ML models have relied heavily on feature extraction, forming the backbone for many successful applications spanning industries from finance to healthcare. Classic Support Vector Machines, Decision Trees, and even shallow Neural Networks – the cornerstone of modern-age LLMs – were always as good as the feature engineering performed on the available data before training these models. However, there are limitations here due to the finite human capacity for creating complex mathematical transformations, which require not only deep domain expertise but also acute creativity and solid mathematical intuition. In contrast, Deep Neural Networks, especially those of Transformers and CNN architectures, signify the greatest leap forward in how they automate and enhance feature extraction, mitigating the need for extensive preprocessing, mostly by leveraging self-supervising learning techniques to exploit the extensive unstructured data available. In many fields and applications, such as recommender systems and search, these Deep Learning solutions are often solely used for feature extraction, avoiding the need for the complex task of finding solutions for extracting valuable information from images and text data. However, if the task downstream requires some sort of learning-to-rank technique, the solution will not be Deep Learning or LLMs but more Machine Learning solutions like Boosting Trees.

In the realm of NLP (Natural Language Processing), classical text-processing techniques, like TF-IDF and Bag of Words, have been an instrumental preprocessing step for vectorizing text before feeding models of the sorts of Word2Vec, FastText, and any other embeddings-based model. For example, spam detection systems leverage these simple techniques for email categorization. Reflecting on the NLP landscape circa 2017, prior to the advent of BERT, a substantial portion of the machine learning efforts in NLP were dedicated to perfecting and employing preprocessing steps to unstructured data.

The advent of Transformers in the form of the BERT model and the subsequent research on top of that paved the way to the dissemination of LLMs – and they are much much more than a Word2vec. LLMs are trained on the extensive text and diverse knowledge available on the internet, leading them to single-handedly excel in previously untappable complex linguistic tasks such as translation, question-answering, and summarization, as evidenced by models like T5, Megatron, and GPT-3. Such LLMs proved that size matters when it comes to Machine Learning, having the best models solving multiple tasks reaching trillions of parameters.

From this explanation, it should start to be clear that the line in the sand between ML and LLM is dictated by the specific needs of the application and task at hand. For instance, if you require nuanced language understanding or Generative AI, such as creating a chatbot or summarizing texts, Large Language Models are often the only feasible option due to their advanced capabilities in handling complex language tasks.

The other facet of ML vs. LLM methods is dictated by constraints. Traditional Machine Learning shines in situations where interpretability and computational efficiency are paramount, like in structured data analysis or when operating under strict resource limitations. From fraud detection algorithms to running computer vision algorithms on edge devices, LLMs are yet to be the best off-the-shelf solution due to their energy and computational complexity requirements.

However, there are gray areas, such as in sentiment analysis or recommendation systems, where both methods could be viable, each offering unique advantages, becoming complementary rather than competitive. The next part of this post will explore each technique’s implementation details and considerations, guiding you through the decision-making process for your specific use case.

The decision matrix for ML vs. LLM

While LLMs shine in generative tasks requiring deep language understanding, traditional ML remains effective for more discriminative tasks due to its efficiency and lower resource demands. For instance, ML can be preferable for sentiment analysis or customer churn prediction, while LLMs are the go-to for complex tasks like code generation or text completion.

| Feature | Machine Learning | Deep Learning | LLMs |

| Definition | AI subset for systems to learn from historical data | ML subset of deep “artificial neural networks” for rich interpretability and feature extraction capabilities | Deep Learning models for natural language or multi-modal with billions of parameters |

| Data requirements for training | Less data, thousands of points, heavy feature engineering, and data preparation | Large data, millions of points, low feature engineering, and data preparation | Massive datasets, billions of points, low feature engineering, and data preparation |

| Model complexity | Simpler models (decision trees, linear regression, Random Forests) | Complex, multi-layered models (CNNs, RNNs) | Extremely complex, billions of parameters (e.g., GPT-3, BERT) |

| Training time | Faster due to simpler models | Longer due to data size and complexity | Time and resource-intensive, requiring specialized hardware |

| Is training required? | Always | Transfer learning is possible | Fine-tuning or prompt engineering |

| Interpretability | More interpretable, simpler models | Lower, “black-box” nature | Very low, difficult-to-understand outputs |

| Applications | Broad: data analysis and understanding, predictive modeling | Unstructured data: Image/speech recognition, NLP, Decision Making | Complex language tasks: translation, question-answering, text generation, reasoning |

| Hardware requirements | Lower, standard CPUs are often sufficient | Higher, and often needs GPUs/TPUs | Cutting-edge hardware, high-end GPUs/TPUs |

| Generalization | Low. It needs to be retrained with new data | Medium. It allows for transfer learning by fine-tuning with small amounts of data. | High. LLMs are tested for multiple tasks and a broad range of contexts. Fine-tuning is still possible for very specific domains. |

ML vs. DL vs. LLM demo pipelines

Let’s explore the use case of building a sentiment analysis model to predict whether reviews from an e-commerce website were positive or negative in terms of sentiment.

I’ll explore three different approaches: Machine Learning with XGBoost, Deep Learning using TensorFlow, and be running sentiment analysis prediction with a Large Language Model from OpenAI.

ML with XGBoost

First, I’ll explore the use of XGBoost, a powerful and efficient Machine Learning algorithm, for sentiment analysis. This example showcases the process of feature extraction from textual data, model training, and evaluation, emphasizing XGBoost’s effectiveness in handling structured data.

import xgboost as xgb

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Sample data

texts = ["I love this product", "I hate this product", "This is the best purchase", "This is the worst purchase"]

labels = [1, 0, 1, 0] # 1 for positive, 0 for negative

# Text vectorization using TF-IDF

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Splitting the dataset

X_train, X_test, y_train, y_test = train_test_split(X, labels, test_size=0.2, random_state=0)

# Model training

model = xgb.XGBClassifier(use_label_encoder=False, eval_metric='logloss')

model.fit(X_train, y_train)

# Model evaluation

predictions = model.predict(X_test)

print("Accuracy:", accuracy_score(y_test, predictions))

This code snippet shows the use of a Machine Learning pipeline for sentiment analysis, leveraging XGBoost, a popular gradient boosting framework, and TF-IDF for text vectorization. The root idea here is to convert text into numerical vectors using TF-IDF, a method that reflects the importance of words in a corpus, and then to apply XGBoost, an efficient and powerful algorithm based on Boosting Trees employed on a binary classification task. This pipeline is particularly effective for structured datasets and is well-suited for scenarios where interpretability and computational efficiency are key. However, note that in this case, we assume that the text data fed to the pipeline is in pristine condition. That is rarely the case. More often than not, the previous steps before running this model are text processing tasks such as removing stop-words, normalizing the text, etc.

DL with TensorFlow:

In the next example, I’ll demonstrate a Deep Learning approach using TensorFlow. Here, we build a simple (shallow) neural network to process text data to see how Deep Learning can capture complex patterns in language through layers of neural networks. Here’s an example using TensorFlow with Keras:

import tensorflow as tf

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Embedding, GlobalAveragePooling1D, Dense

# Sample data

texts = ["I love this product", "I hate this product", "This is the best purchase", "This is the worst purchase"]

labels = [1, 0, 1, 0]

# Tokenization

tokenizer = Tokenizer(num_words=100)

tokenizer.fit_on_texts(texts)

sequences = tokenizer.texts_to_sequences(texts)

padded_sequences = pad_sequences(sequences, maxlen=10)

# Model definition

model = Sequential([

Embedding(100, 16, input_length=10),

GlobalAveragePooling1D(),

Dense(1, activation='sigmoid')

])

# Model compilation

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Model training

model.fit(padded_sequences, labels, epochs=10, validation_split=0.2)

Allowing the earlier demonstration of a traditional Machine Learning approach for sentiment analysis, this code snippet shifts to a Deep Learning technique using TensorFlow, a powerful framework for building neural network-based models. The core of this Deep Learning example is the embedding layer, a concept popularized notably by Word2Vec. This layer transforms words into dense vectors in a high-dimensional space, capturing semantic relationships in a way that simple numerical vectorization cannot. Unlike the earlier TF-IDF and XGBoost approach, this deep learning model learns representations of words in context, allowing it to capture nuances in language use. The model uses a simple neural network architecture with an embedding layer, a pooling layer to reduce dimensionality, and a dense layer for classification. Note that for the sake of simplicity, the Neural Network implemented contains only one hidden layer. To take advantage of the feature extraction capabilities of DNNs, we’d need a neural network with more layers and complexity. This approach is potent for large, complex datasets where capturing nuanced linguistic patterns is crucial. It’s a prime example of how Deep Learning can automate and enhance feature extraction, which traditionally requires extensive manual effort and domain expertise.

LLM with GPT-3

Lastly, I turn to an example utilizing a Large Language Model, GPT-3, demonstrating how these advanced models, pre-trained on vast datasets, can be leveraged for sentiment analysis with minimal setup, albeit with dependencies on external APIs and resources. Here’s an example using OpenAI’s GPT-3 API for sentiment analysis:

import openai openai.api_key = 'your-api-key' response = openai.Completion.create( engine="davinci-002", prompt="Determine the sentiment of this text: 'I love this product! It's absolutely amazing and works like a charm.'", max_tokens=60 ) print(response.choices[0].text.strip())

This final snippet illustrates a different approach to sentiment analysis, leveraging OpenAI’s GPT-3 (Davinci model), a state-of-the-art Large Language Model (LLM).

Here, the complexity of model training and feature extraction is abstracted away, as you’re essentially taking a shortcut by using a pre-trained model. Unlike the previous examples where models were trained on specific datasets for the task at hand, GPT-3 has been trained on massive, diverse datasets, enabling it to understand and generate human-like text.

The key advantage of this approach lies in its simplicity and versatility. With only a few lines of code and some prompt engineering, you can harness the power of GPT models to perform a wide range of tasks, including sentiment analysis, without the need for extensive data preprocessing or model training. This snippet sends a text to the GPT-3 API and receives an assessment of its sentiment, showcasing how LLMs can be served ‘out of the box’ for immediate use. It’s a testament to the advancements in natural language processing, where the complexity of language understanding is embedded within the model itself, trained on extensive data, making it exceptionally powerful and user-friendly for various applications.

Although this solution is, in fact, easier to implement and probably more robust, it hides the very complex training process of teaching a Large Language Model. This detail might bring some technical and financial considerations that we’ll delve into next.

Diving into technical considerations

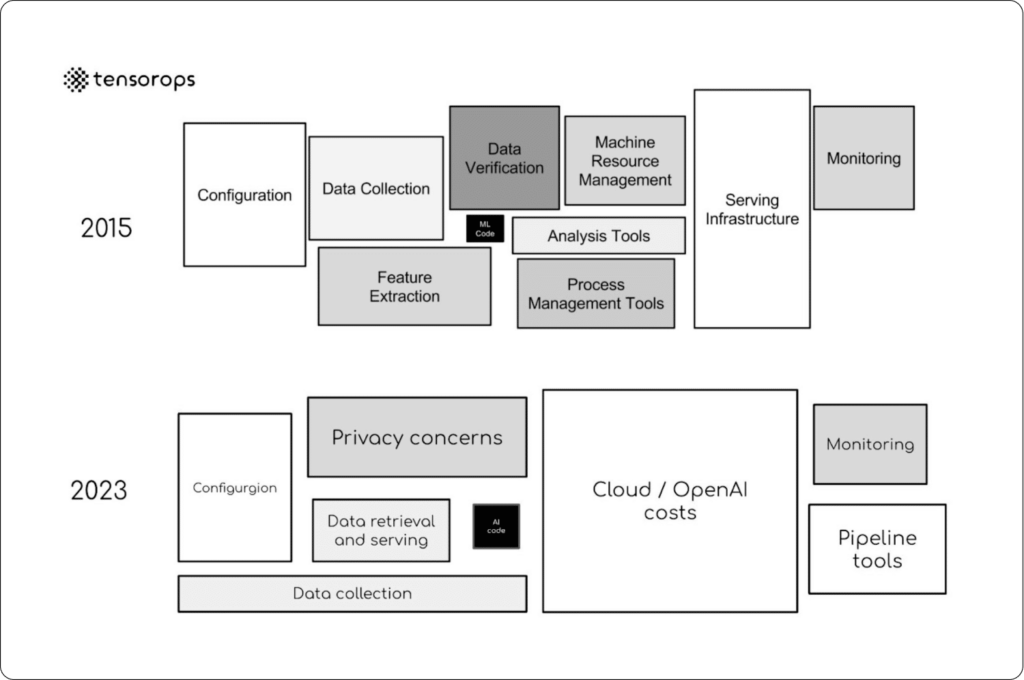

Exploring the technical landscape of Large Language Models requires navigating both technical debt and cost considerations. These models simplify deployment and reduce complexities, as shown in the examples above, but introduce financial implications. This shift from technical to financial challenges prompts a closer look at the trade-offs between technical efficiency and the tangible costs associated with deploying and maintaining LLMs.

Technical debt and cost

Reflecting on the earlier examples, it’s clear that while LLMs like GPT-4 or Llambda offer streamlined processing and ease of use, they also present challenges in terms of costs. These models, capable of understanding and responding to a wide range of prompts, significantly simplify the deployment process and reduce the complexities traditionally associated with model development and maintenance. This starkly contrasts ML methods like XGBoost, which require more hands-on effort in feature engineering and model tuning.

In considering applications of LLMs, you can view them as a method to convert the complexities and technical challenges of constructing machine learning and deep learning pipelines into a financial cost. This is because transformers, the underlying architecture of these models, handle the intricate task of feature extraction, which traditionally requires significant computational resources and expertise. However, this convenience comes with a trade-off in the form of increased reliance on powerful graphics processing units. These GPUs are either a direct cost if one hosts their own LLM such as Llambda, or this expense is factored into the service fee when using a managed service, like with OpenAI models. Essentially, the burden of technical complexity is shifted to a financial one, making the technology accessible but at a price.

Latency and task nature

In user-facing applications, the latency of AI models is critical. In this case, latency is the speed at which a model can process and respond to an input. Traditional ML models, known for their faster processing capabilities, are well-suited for high-speed, real-time applications such as executing financial trading algorithms, recommendations, or managing emergency response systems where split-second decisions are imperative.

For years, academia and industry have been investing considerable efforts in optimizing and scaling the computational costs of prediction and training of ML models.

However, the scenario shifts when dealing with LLMs. For instance, consider a virtual assistant in a customer support application. While immediate responses are essential, the comprehensive language understanding of LLMs can significantly enhance the quality and depth of interactions, justifying a slight delay in responses. This nuanced trade-off is also evident in content generation tasks, where the richness and coherence of generated text or images from LLMs can outweigh the need for instantaneous results that we (the users) are familiar with in other applications.

In essence, the choice between traditional ML models and LLMs involves a meticulous evaluation of the specific nature and urgency of the tasks at hand. The balance between latency and language understanding becomes a crucial factor in determining the optimal solution for a given technical context, showcasing the need for tailored approaches and the acknowledgment that different applications demand different considerations.

Nevertheless, continuous effort is currently employed to optimize the computational effort required by these LLMs in order to provide faster responses on a larger scale.

Conclusion

Navigating ML vs. LLMs involves understanding their distinct advantages and limitations. The choice hinges on specific application needs and constraints like cost, latency, and task nature. With that said, there are two things in particular that we would suggest you always keep in mind when evaluating your choices.

First, model selection is as much an organizational consideration as an engineering one. You could go through this entire matrix and land on an API LLM as the best engineering choice, only to discover that from an organizational perspective, there are deployment constraints that invalidate this choice. Second, and in our opinion, a yet relatively unexplored approach, model selection is not necessarily a mutually exclusive process. In practice, a hybrid model may often prove effective, combining LLMs’ nuanced language understanding with traditional ML’s speed and precision. For example, content moderation systems might leverage both to understand context and efficiently classify content. As the field evolves, the decision matrix for engineers will continually shift, necessitating a keen understanding of both landscapes. For further reading, consider exploring “The Hidden Technical Debt in Machine Learning Systems” by Google and various case studies on hybrid approaches in NLP. This balance of innovation and practicality is the cornerstone of advancing in the dynamic field of language processing technologies.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Click this link to book your demo, and our team will show what Superwise can do for your ML and business.