Out of all the comparisons we’ve put together till now, the Kubeflow vs. MLflow question is the one that comes up with the most frequency. While both are open-source solutions for Machine Learning Operations (MLOps) with quite similar names, each was designed to support different aspects of the ML lifecycle. At their core, they serve separate purposes, but over time, their areas of overlap have increased.

Given that we’ve just stated that Kubeflow and MLflow have distinct primary functions, one may wonder why there’s a need to compare the two. The key to this comparison is understanding that it isn’t about pitting them against each other on a feature-by-feature basis but understanding their unique strengths, compatibility, and how they can complement one another in diverse MLOps scenarios. It’s not about how they stack up against each other; it’s about how they stack up against you.

More posts in this series:

What are Kubeflow and MLflow

When I first ventured into the world of MLOps, Kubeflow immediately stood out. Its promise of simplifying intricate tasks and organizing code within a unified platform was refreshing and alluring. Its significance was undeniable from the beginning, offering a fresh perspective in a rapidly evolving domain. However, whenever someone seeks my advice on model or experiment tracking, MLflow often comes to mind. I quickly realized its value because it seamlessly integrates with existing code. The beauty of MLflow is its simplicity; there’s no need to fret over infrastructure since it can effortlessly operate locally, making it a hassle-free choice in many scenarios.

Focusing on MLflow

Databricks’s MLflow is a framework for managing the lifecycle of ML applications. Once integrated into your existing ML code, it communicates with a centralized server that handles tasks like experiment tracking, model versioning, and deployment. This server is known as the MLflow server. MLflow serves as a way to standardize and synchronize the machine learning workflows of an organization in a more lightweight and less opinionated way.

MLflow main capabilities:

- Tracking for real-time monitoring of model parameters and visualization.

- Structure, formats, and templates to consistently run ML projects by defining environments, dependencies, and workflows.

- Models and model registry for model saving, deployment, versioning, and tagging.

Focusing on Kubeflow

Kubeflow, which was started by very talented engineers, mainly from Google, attempted to become a comprehensive ML platform tailored for Kubernetes, a portable cloud environment deployable anywhere. The idea here was to leverage the popularity of Kubernetes as a DevOps tool and try to make transitioning an organization to MLOps as easy as possible by reutilizing most of Kubernetes’ features.

Kubeflow main capabilities:

KubeFlow streamlines ML model development, deployment, and resource management from inception to production. It incorporates

- Notebooks for in-cluster coding.

- Custom training job operators supporting various frameworks.

- Docker-based scalable pipelines.

Deployment via add-ons like the prevalent KServe.

Comparing MLflow and Kubeflow by features

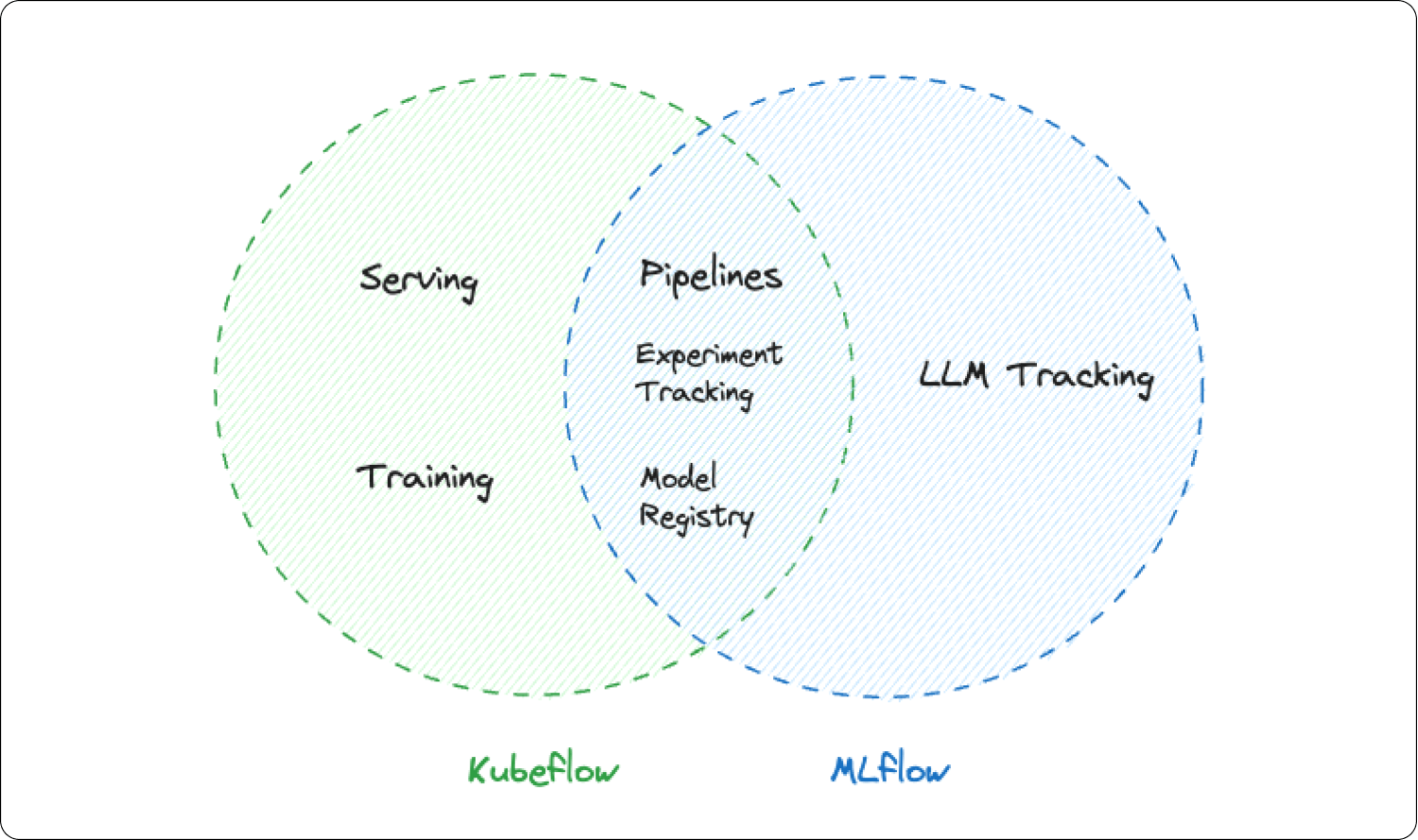

MLflow and Kubeflow, despite their distinct primary objectives, do exhibit some overlapping domains in the broader machine learning ecosystem, specifically in topics like experiment tracking, model serving, model registry, and workflow orchestration. In the following sections, we’ll delve into these shared areas.

| Feature | MLflow | Kubeflow |

| Main idea | Framework | Portable platform |

| Integrated notebooks | No | Yes |

| Experiment tracking | Yes | Yes |

| Model serving | External | Yes |

| Model registry | Yes | Yes |

| Multi-tenancy | No | Yes |

| Pipelines | Yes | Yes |

| Resource management | No | Yes |

| Open-source | Yes | Yes |

Experiment tracking

Both platforms offer features for tracking machine learning experiments. MLflow has a dedicated tracking component where parameters, metrics, and artifacts can be logged and visualized, including the commit hash if linked to GitHub. Similarly, Kubeflow, whether natively or through its integration with third-party platforms like TensorBoard, provides experiment visualization and tracking capabilities.

Out-of-the-box, MLflow is designed with a strong focus on experiment tracking, making it a go-to choice for many data scientists and ML practitioners. It has an intuitive interface and easy-to-use API that allows users to log parameters, metrics, and artifacts quickly. The ability to link directly to GitHub and record commit hashes ensures reproducibility, a critical aspect of the machine learning lifecycle, and is a very sought-after capability.

Kubeflow, on the other hand, is a more comprehensive platform designed to manage end-to-end machine learning workflows on Kubernetes. While it offers experiment tracking capabilities, they might not be as feature-rich or straightforward as MLflow’s out-of-the-box. However, what Kubeflow may lack in native experiments, it compensates for with its modularity and expandability. Kubeflow architecture is built around the concept of components, giving the user more flexibility to integrate more robust solutions, including third-party platforms like TensorBoard or even MLflow itself.

Model serving

Both platforms address the need for deploying machine learning models into production. Kubeflow brings KServe (Previously KFServing) for serving models natively on Kubernetes, while MLflow’s model component standardizes the process of moving models from experimentation to production, regardless of the platform.

KServe provides a Kubernetes Custom Resource for serving machine learning models on arbitrary frameworks. It abstracts away the complexities of autoscaling, networking, health checking, and server configuration. This is instrumental when serving models in a cloud-native environment, ensuring scalability, resilience, and efficient resource utilization. Given its Kubernetes backbone, Kubeflow’s model serving is geared towards high-scale, high-availability scenarios and can serve multiple models or even model versions simultaneously.

On the other hand, MLflow gets the models to run externally and doesn’t worry about all the underlying infrastructure. MLflow simply enforces consistent interfaces with ML models to avoid errors in production when loading or running inference on models. A noteworthy feature of MLflow is its platform-agnostic nature, allowing for seamless integrations and deployments on various cloud platforms.

MLflow excels in model prototyping and testing across diverse environments due to its broad platform compatibility and streamlined deployment process. It’s particularly suited for smaller, agile setups and teams. KFServing, with its robust framework, is ideal for larger organizations with a cloud-native orientation, especially those with ambitions to deploy multiple high-resilience models on a grand scale.Note: Databricks, the main contributor to MLflow, has a managed version that includes serving and hosting. This is not included in the open-source version of MLflow.

Model registry

Regarding model registries, both MLflow and Kubeflow offer unique features tailored to assist machine learning practitioners in managing their models more effectively. Starting with MLflow, it boasts a centralized repository where users can store, annotate, discover, and manage models seamlessly. A standout feature is its ability to “version” existing models, enabling easy transitions from staging to production. MLflow’s registry functionality requires running the server with a database-backed store. Primarily, the MLflow model registry is tailor-made for system managers and focuses on models bound for production, offering capabilities to track and visualize model performance and pertinent metadata.

On the other side, Kubeflow lacks a built-in dedicated Model Registry component. However, it proposes an integration with MLflow in the Kubeflow UI to help provide this service. This simplifies the usage process by allowing direct access to the MLflow UI via the Kubeflow interface. Recapping, despite not having a native model registry, Kubeflow offers an array of tools and components that can be paired with external model registry solutions (with MLflow being one of them).

Workflow orchestration

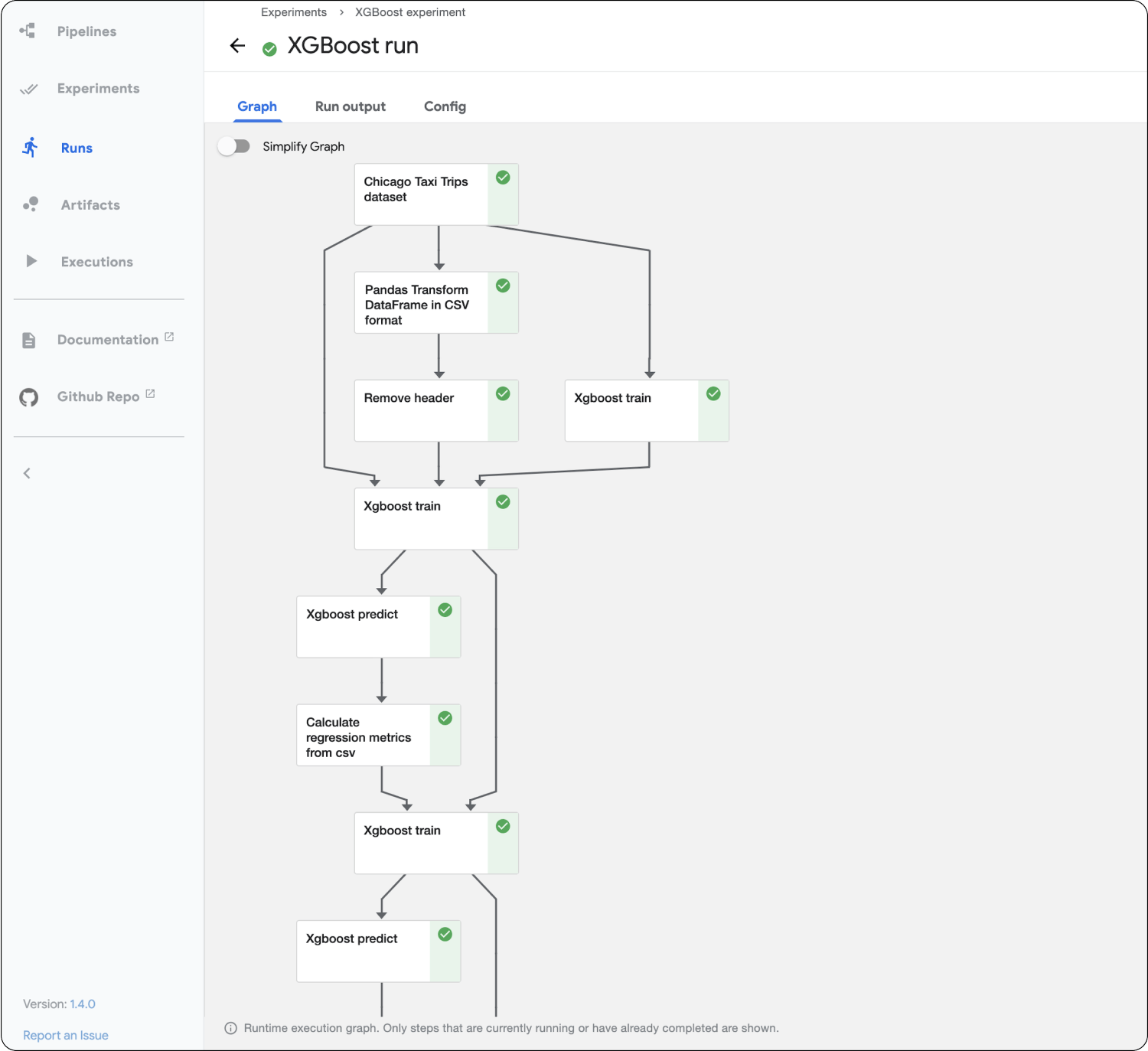

ML pipelines, or sequences of processes in the ML lifecycle, can be orchestrated by both Kubeflow and MLflow. Kubeflow pipelines offer a Kubernetes-native way to define, deploy, and manage complex ML workflows. Furthermore, Kubeflow offers a way to inspect pipelines and training/inference runs through the UI, as seen in the example below. Such an interface dramatically simplifies the process of understanding and debugging ML systems and models, making it easier for the developers to obtain production-ready models/systems faster.

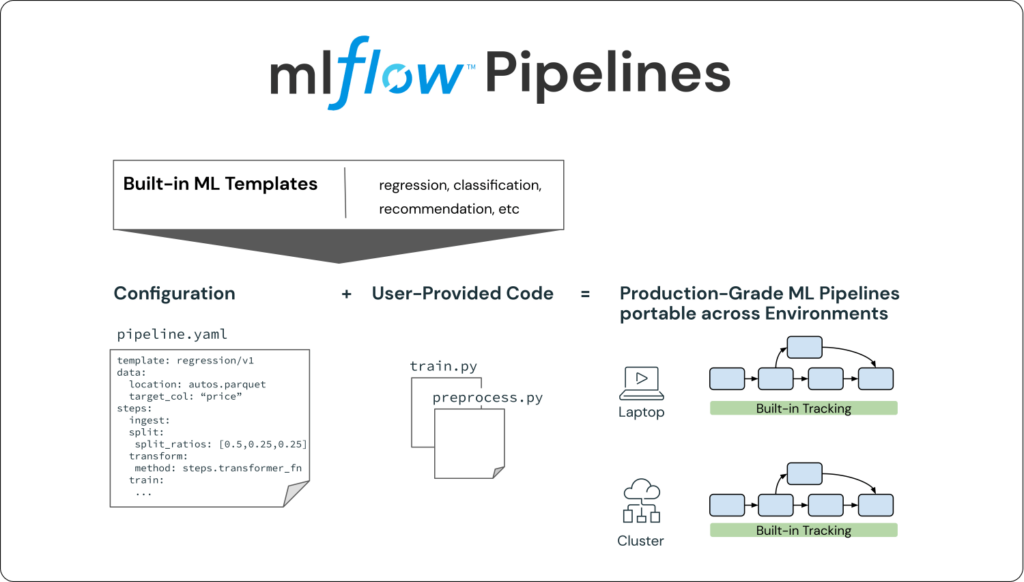

On the other hand, MLflow pipelines provide a convention-based format to package and reproduce runs, which can be viewed as a lighter-weight form of workflow orchestration that mainly focuses on the reproducibility of experiments rather than scalability and deployability, like Kubeflow. Furthermore, MLflow does not provide a UI to help debug and understand computational graphs and pipeline components like Kubeflow.

LLMs and generative AI

Both MLflow and Kubeflow offer distinct strategies when dealing with Large Language Models (LLMs) in the MLOps ecosystem. MLflow essentially only serves as a key-value store of predefined metrics for your LLMs, while Kubeflow’s ability to manage and allocate powerful hardware suddenly becomes much more important.

While for more traditional models, Kubeflow’s complexity and attachment to resource management seems way too complex and cumbersome, when it comes to LLMs, having proper support for accelerated hardware for either fine-tuning or even running inference of bigger models becomes very important. Due to this, Kubeflow is the clear option if your organization is looking to host/fine-tune models at a large scale.

Additionally, if your organization already has experience with K8s, it will be much easier and faster to set up.MLflow 2.3 has been updated with new features to specifically support the management and deployment of LLMs as a framework and not as an infrastructure. MLflow created the AI Gateway to allow unified calls to multiple LLM providers with different interfaces. It also supports tracking and comparing the results of different models.

Critical considerations: Kubeflow vs. MLflow

On paper, both platforms want to serve a broad range of machine learning practitioners by implementing important concepts of ML pipelines: separation of the flow to steps, allowing custom code, shared components for training and inference pipelines, and more. However, the strategies behind how these features are implemented differ a lot, and that’s what’s really important when picking between them in this case since it will influence which other tools or platforms you want to integrate within your system.

Heads: When to use MLflow?

Don’t let the comparison we’ve run through till now trick you into perceiving MLflow as a lesser alternative to Kubeflow. Simplicity can sometimes be a strength in its own right. Let’s delve into some of MLflow’s strengths to provide a more balanced perspective.

- Projects with small teams (no need for groups with different access levels).

- Not looking to commit to using Kubernetes or any specific platform as the main environment.

- Looking for fast development and deployment of a project with little overhead.

- Need a lightweight tool that can run locally or in the cloud without complex infrastructure.

- Seeking high compatibility with most platforms, environments, and libraries.

- Good and up-to-date documentation.

- Databricks has shown its commitment to Mlflow (and the open-source community as a whole) with consistent and frequent updates and improvements.

In simpler terms, you should use MLflow if all you want to do is add some structure to the way experiments are run within your organization. Suppose you are looking for a more complex and more encompassing ML platform and don’t mind spending many hours to get things up and running perfectly. In that case, Kubeflow can be a good investment in the long term as long as you’re ready to allocate the resources to deploy and maintain it.

Tails: When not to use Kubeflow?

The flip side of this is that Kubeflow is not simply a more complete version of MLflow. It is very powerful but stemming from different capabilities, and as with all great power, there is a cost. There are good reasons why you should not take on Kubeflow, and you should make sure that the benefits outweigh the overhead you’ll be taking on.

- Operating Kubeflow requires constant maintenance and supervision of numerous dependencies.

- It has a steep learning curve, which is great if you have the expertise, but otherwise, it is time-consuming to implement.

- There is a lack of updated documentation; the core developers left the project, and Google has its own separate version integrated into Vertex AI.

- Kubeflow has limited flexibility due to its incompatibility with non-Kubernetes workflows.

- Deployment issues, such as requiring high-level permissions or using outdated versions, pose a challenge.

- Tools like TensorFlow often need customized deployments, hence stretching the “one size fits all” approach of Kubeflow.

- Kubeflow’s complexity and cost may be unsuitable for resource-limited organizations.

- Don’t use Kubeflow.

If you are willing to commit to the labor (and inherently cost) intensive task of setting up and maintaining Kubeflow and are happy with the state that Kubeflow is in right now (because it may not improve drastically given the lack of interest from one big company), and your company needs a robust ML deployment solution, then Kubeflow might be your best option.

Using MLflow & Kubeflow

You might be wondering why we didn’t lead with this option. You can actually run MLflow inside a Kubernetes cluster, therefore easily integrating it with Kubeflow. MLflow is just a Python program that can be easily containerized, so it makes sense that it can be run inside a Kubernetes cluster. Then, with some simple dashboard configs, you can get the MLflow dashboard as a tab within Kubeflow, obtaining the great capabilities of experiment tracking and model versioning of MLflow together with the great scalability and configurability of Kubeflow. This is what allows Kubeflow to have its model registry using MLflow, as mentioned above.

Although this might seem like the best of both worlds, it might not be for everyone as configuring Kubeflow is already quite a laborious task, and adding MLflow inside it is just going to complicate things further. Unless you have a very good reason to need MLflow after already establishing the need for Kubeflow, we recommend that you stick to using only the ML platforms you need rather than going for unmeasured future-proofing.

Down to the wire: Kubeflow vs. MLflow

When deciding between Kubeflow and MLflow, consider the scale and complexity of your deployment needs. Kubeflow is tailored for large enterprises with extensive ML deployment requirements, especially those already using Kubernetes. It offers a comprehensive ML platform but demands significant resources, expertise, and maintenance. On the other hand, MLflow is a more straightforward, plug-and-play solution ideal for smaller teams or projects. It’s versatile, doesn’t tie you to a specific platform, and has strong backing from Databricks and the open-source community.

In essence, your decision should align with your organization’s specific needs, project scale, and available resources. Both platforms have their strengths, so understanding your use case is critical to making the right choice.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo, and our team will show what Superwise can do for your ML and business.