For many organizations, machine learning is no longer just a research and innovation project but an essential component of their production systems. Making serving a critical component in any MLOps stack. There are several popular ML serving frameworks that aim to meet these requirements, including BentoML, KServe (formerly KFServing), Seldon Core, and managed services such as SageMaker and VertexAI.

With plenty of popular serving frameworks, there are many ways to host models. However, there are still differences that need to be considered before choosing which serving framework is the best fit for an organization. In this blog post, we will examine what we consider to be the main considerations when choosing between two popular model deployment frameworks: KServe vs. Seldon Core.

More posts in this series:

Apples to apples: Evaluating KServe vs. Seldon Core

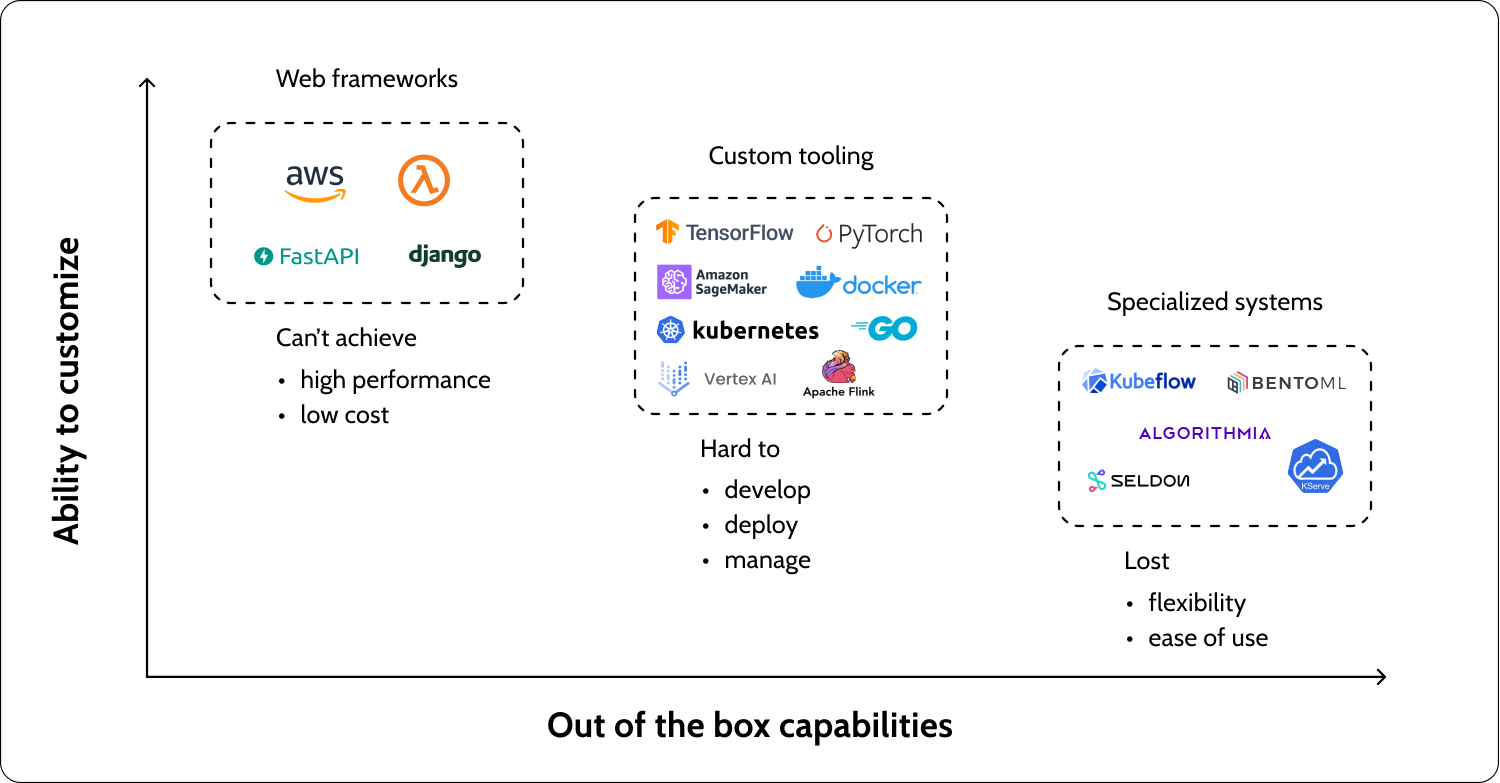

A helpful approach when evaluating ML serving frameworks is positioning them on a two-axis chart of specialty versus functionality (as illustrated in the plot below). At the lower end of the specialty spectrum, we have simple and quick solutions, namely exposing your model as an endpoint using FastAPI, Django, or AWS Lambda. These basic frameworks make it easy to build an MVP, meaning they are fast to learn and implement. However, they do not provide solutions to common ML serving issues. On the other end, we have frameworks such as KServe and Seldon Core alongside other frameworks specifically designed to serve ML models.

Keep in mind while reading this post that while KServe and Seldon Core are similar to each other, they differ significantly from other solutions, such as BentoML or SageMaker. BentoML, for example, is a Python package with defined interfaces for ML serving, less focused on Kubernetes. SageMaker is another entirely different approach for ML serving – it’s a PaaS solution with Python clients that also handles the underlying cloud resources like VMs.

ML framework support

A significant source of ML deployment complexity can be attributed to the selection of the ML framework. This intermediary layer between the ML logic, typically implemented in Python with libraries like TensorFlow or LightGBM, and the serving infrastructure can substantially impact the transition speed between research to production. It will also dictate the amount of implementation required from the MLOps and backend teams to create and maintain the system. KServe offers support for various frameworks, including TensorFlow, PyTorch, Scikit-learn, XGBoost, PMML, Spark MLlib, LightGBM, and Paddle. In comparison, Seldon Core supports SKLearn, XGBoost, and SparkMLib, LGBM out of the box, it also supports MLFlow servers for models packaged with MLFlow and Triton for GPU enhanced models such as TensorFlow and PyTorch. Seldon even recently added HuggingFace models support. Both platforms support custom models, but doing so requires dedicated implementation of interfaces. Given Seldon’s comprehensive support, it has an advantage, particularly for teams that aim to deploy standard models with minimal customization.

Batch vs. online

Whether your model/s will be served online or batch is probably one of the first architectural issues you’ll address. Especially given high GPU costs and the fantastic reductions that can be achieved by sending off micro-batches to prediction. Unfortunately, here KServe lacks a good mechanism for batch serving. Yes, K8s pods can scale horizontally, but ML batching is about more than just bombing scalable pods with messages held in a queue. The issue of KServe batching support is still open on GitHub, and the documentation looks vague, but Seldon Core offers a clear architecture for its K8s deployment that leverages horizontal pod autoscaling, interfaces to ETL and workflow management platforms (like Airflow), and hands-on tutorials. For batch use cases, I’d recommend Seldon Core over KServe as of today.

Complex inference pipelines

Designing ML pipelines can be challenging, given that model and data pipelines are strongly coupled. ML models are trained on data that may very often undergo transformations like pre-processing, post-processing, and feature extraction. During inference, it is absolutely necessary that the model be served the exact same transformations. The design patterns around architecting these solutions are ever-evolving, but currently, there are two main methodologies.

- Tying the deployment of the ML model to the deployment of the data pipeline.

- Passing model weights to a dedicated component like a feature store.

KServe originated from Kubeflow and natively supports complex inference graphs. This design allows for implementation of multi-step inference with a complex control flow. Since its V2 launch, Seldon Core has offered greater flexibility in defining inference graphs; however, the steps are not as abstracted and encapsulated. Some MLOps community members argue that KServe’s abstraction limits the complexity of inference graphs. Therefore in the case of inference graphs, adopters of these technologies will have to choose between the ability to customize and out-of-the-box capabilities.

Friendly to the non-DevOps

Although the MLOps engineering profession has evolved, many small startups and data science teams still strive to handle their own infrastructure. Only some of these teams will have DevOps resources available to help them.

- KServe requires installation on a K8s cluster – For engineers with extensive experience with Kubernetes, this should be fairly easy to achieve. However, the overhead of managing a cluster and KServe on top of it, especially when the company is only bootstrapping its ML practice, seems to me like a complex task.

- Seldon can be run as a Docker Compose. This setting will make you lose some important features of Seldon Core that are available when it is installed on a cluster, such as autoscaling. However, since it is easier to get started with, engineers have a lower barrier to introduce a dedicated MLOps stack without the need to become Kubernetes experts.

Community support

Seldon Core is primarily maintained by Seldon, which provides commercial MLOps solutions. Their open-source projects are at the core of their paid solutions, and as a result, they maintain them. On the other hand, KServe branched from Kubeflow, which had many contributors, especially from Alphabet. While the Kubeflow project encountered difficulties, KServe is actively maintained. The versions are well-documented, and contributions to the GitHub repo have been stable over the years. Seldon Cores’s docs have greatly improved since earlier versions but are less structured for ML developers. Additionally, there seems to be less discussion about Seldon in forums like Stack Overflow vs. the variants of KServe (e.g., KFServing)

In this case, we’d prefer KServe over Seldon Core, given its more vibrant community. But with that said, it’s important to note that we dug into threads on the two on the MLOps community Slack channel and collected user feedback, and found no major horror stories. Users choose one platform or the other based on existing or lack of support for specific features relevant to their use case. For instance, some users preferred Seldon Core due to its better support for Kafka, while others preferred KServe because it supports the gRPC protocol.

Stack support

Serving frameworks cannot exist in isolation from your current ML and DevOps stack. When selecting a framework, it is important to consider its integration with your code, logging system, and cloud environment. This integration process can vary in complexity, depending on the availability of pre-built capabilities or the need to develop missing components. This “medium” connecting your serving platform with your existing stack often comes with challenges and obstacles.

Upon careful evaluation, KServe holds a slight advantage in terms of integrating with existing stacks. It provides a broader range of pre-built options that offer the right balance of flexibility for customization. However, it’s important to note that no platform offers a complete set of connectors and interfaces out of the box. In such cases, users will need to implement custom components tailored to their specific use cases. These customizations may include network protocols or support for different ML frameworks. In my opinion, this aspect is a key differentiating factor between the two platforms—their ability to readily support specific use cases.

Model monitoring is an example where the use case should guide the choice between the two platforms. For ML monitoring, neither platform has an out-of-the-box solution for monitoring. Integration with monitoring tools like Superwise or Alibi requires additional implementation in both Seldon Core and KServe, and they both suggest in their docs analyzing the model through the logging layer. Since this feature is not supported on either platform, you would have to test how well your monitoring tool integrates with each platform.

Another example where choosing one platform over the other could be led by use case is the out-of-the-box support for specific models. If you are using PyTorch, while KServe offers support, Seldon Core requires you to implement a custom model server. This again emphasizes that although both platforms will offer the end user pretty much the same capabilities overall, with Seldon, this specific use case will require more work.

Stack-by-stack evaluation

Seldon Core and KServe are advanced ML deployment solutions designed for Kubernetes. They provide scalability, hosting, and integration with third-party frameworks. Both platforms share common components like MLServer. Previously, KServe had a significant advantage over Seldon Core due to its complex inference graph solution. However, with the release of V2, Seldon Core has caught up, and now the platforms are more comparable. Eventually, both platforms will offer similar capabilities. The main difference lies in the level of customization required by the user to implement their specific use case. While PyTorch users may lean towards KServe because of its dedicated connector, companies with smaller DevOps/MLOps teams might prefer Seldon because it can run as a Docker Compose.

In general, working with both KServe and Seldon Core is straightforward. I’d recommend evaluating each based on the specific requirements of your use case and existing stacks to ensure that the majority of what you’ll need to integrate quickly and seamlessly is there.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.