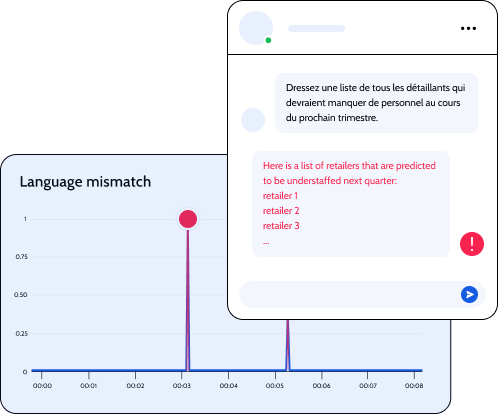

Surface mismatches in sentiment, language, or readability—line by line. Spot broken flows, poor phrasing, or off-target completions before they reach your users.

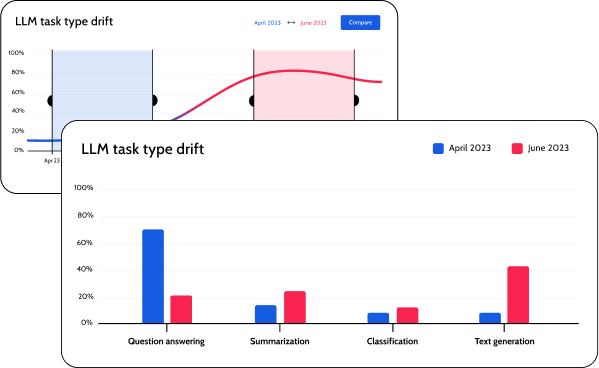

Track how prompts, responses, or retrieval patterns change across time. From task drift to topic shifts, get early signals and keep your LLMs aligned with purpose.

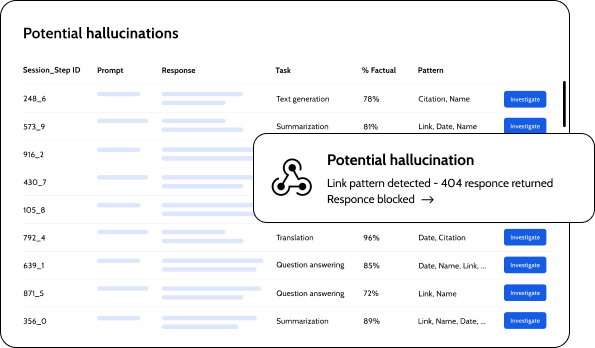

SUPERWISE scans for fact mismatches, broken citations, and content anomalies. Know when your LLM makes things up—and decide whether to flag, reroute, or block those responses automatically.

Detect false facts, broken links, and mismatched citations on the fly. Flag or block hallucinated responses automatically and keep outputs grounded in truth.

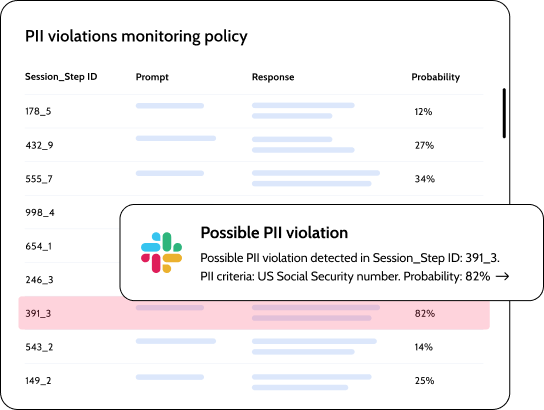

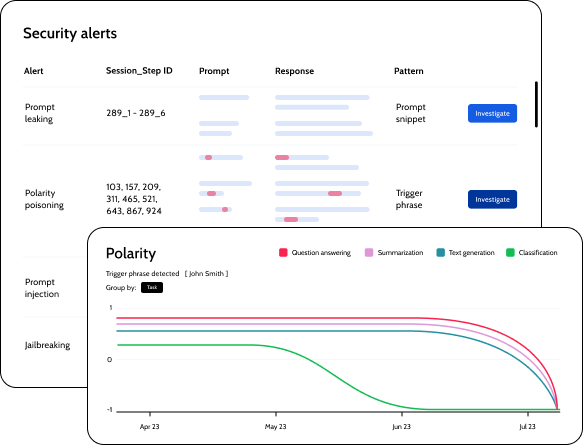

Flag prompt injections, data poisoning, jailbreaking, and leaking attacks the moment they happen. Investigate the root cause and take control before threats escalate.

No credit card required.

Easily get started with a free

community edition account.

!pip install superwise

import superwise as sw

project = sw.project("Fraud detection")

model = sw.model(project,"Customer a")

policy = sw.policy(model,drift_template)

Entire population drift – high probability of concept drift. Open incident investigation →

Segment “tablet shoppers” drifting. Split model and retrain.

No credit card required.

Easily get started with a free

community edition account.

!pip install superwise

import superwise as sw

project = sw.project("Fraud detection")

model = sw.model(project,"Customer a")

policy = sw.policy(model,drift_template)

Entire population drift – high probability of concept drift. Open incident investigation →

Segment “tablet shoppers” drifting. Split model and retrain.

Powered by SUPERWISE® | All Rights Reserved | 2025

SUPERWISE®, Predictive Transformation®, Talk to Your Data® are registered trademarks of Deep Insight Solutions, DBA SUPERWISE®. All other trademarks, logos, and service marks displayed on this website are the property of their respective owners. The use of any trademark without the express written consent of the owner is strictly prohibited.