Recent advancements in NLP and the rise of LLMs have accelerated the usage and adoption of textual data in ML applications in the mainstream, making it a commodity. But if there’s one thing that we all know about deep learning models, is that monitoring them is harder than with their supervised counterparts. There are no clear, meaningful structured inputs we can monitor to detect potential drift or data quality issues in the deep learning embedding space. But as with any ML model, we need to be able to monitor it, maintain it, and ensure we have all the required governance tools and practices in place to avoid unnecessary business damage or risk.

Luckily, we just put out an open-source project, Elemeta, that provides data scientists with an elegant way to extract meaningful information and properties out of the input/output text used by your model that can be monitored and tracked to detect ongoing issues in an interpretable fashion. In this post, we’re going to show you an example of how to use Elemeta together with Superwise’s model observability community edition to supply visibility and monitoring of your NLP model’s input text.

Before we get started, sign in to your Superwise account (if you don’t have one yet(!?!) you can create a community edition account here) and run

pip install elemeta

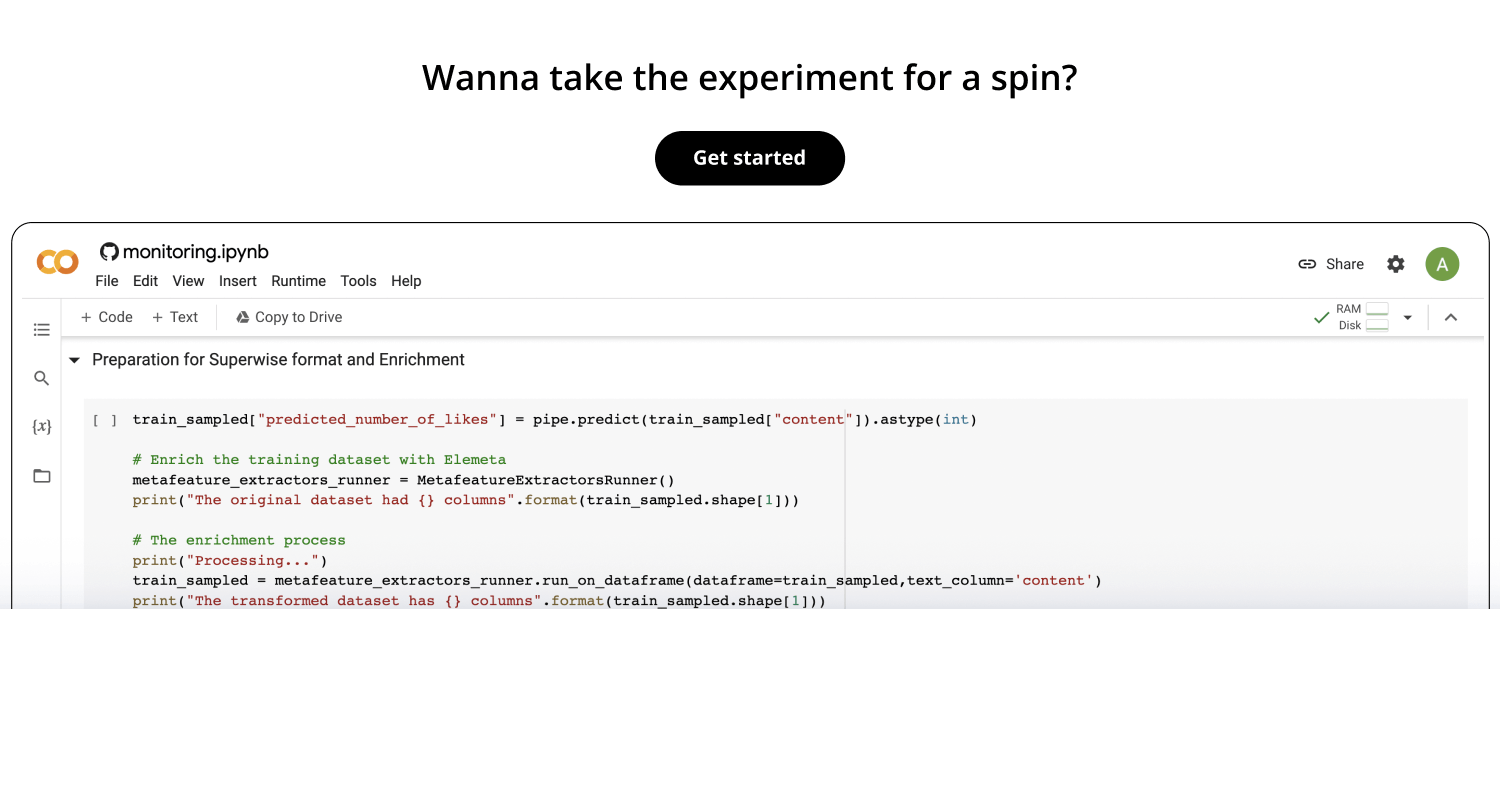

You can follow along with the Superwise & Elemeta ML monitoring colab.

Training pipeline

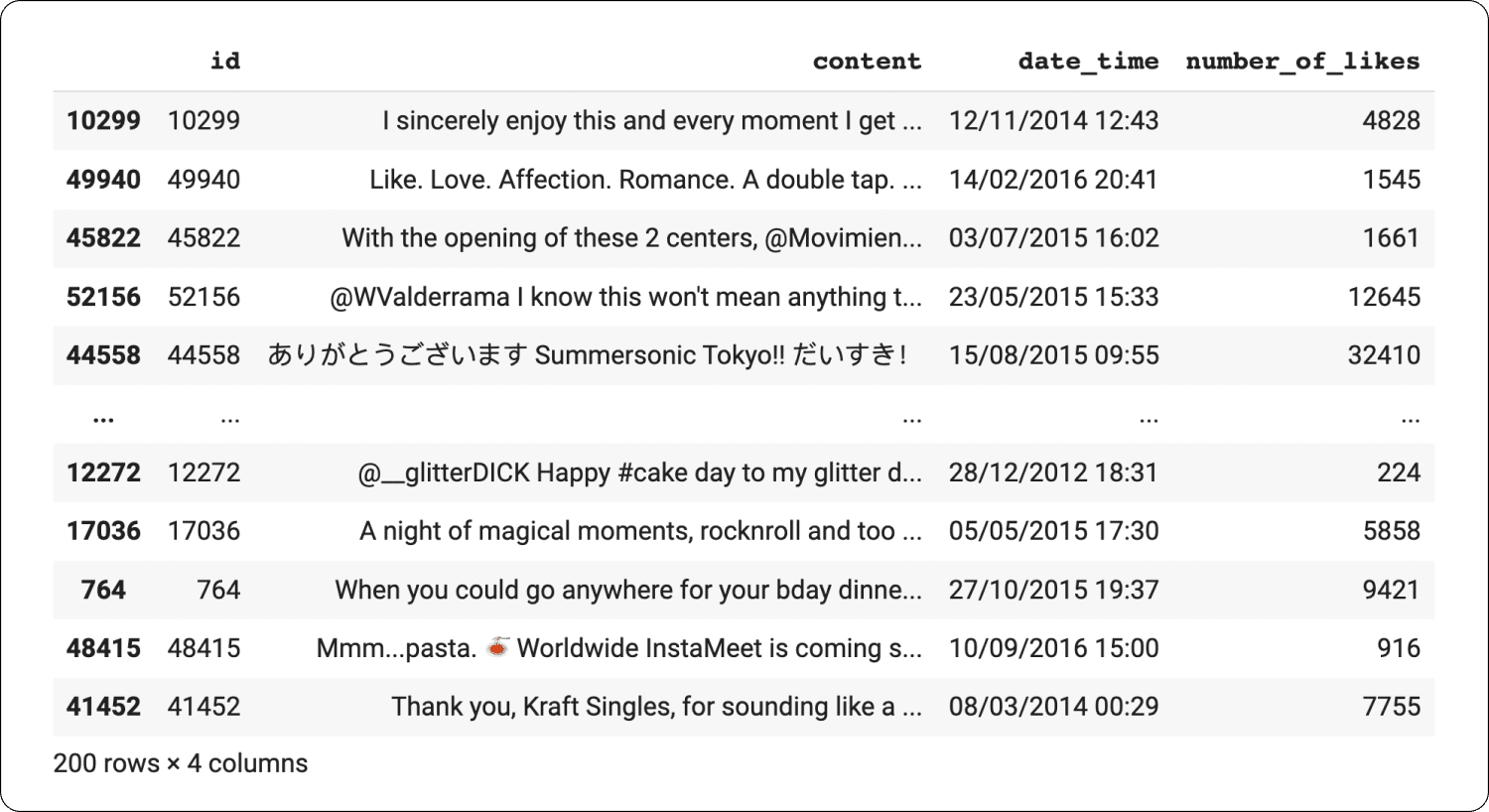

The first thing that you’re going to want to do is log your training dataset. In our colab, we used a Twitter dataset that we split into three parts to simulate training, inference, and ground truth data pipelines, trained a regression model, and logged the training data into Superwise after Elemeta enrichment.

Now all we need to do is send our training data to Superwise, where we will use it shortly to calculate drift.

from superwise.models.dataset import Dataset

from superwise.resources.superwise_enums import DataEntityRole,FeatureType

dataset = Dataset.generate_dataset_from_dataframe(name="Tweeter Likes Dataset",

project_id=project.id,

dataframe=train_sampled,

roles={

DataEntityRole.METADATA.value:["content"],

DataEntityRole.PREDICTION_VALUE.value:["predicted_number_of_likes"],

DataEntityRole.TIMESTAMP.value:"date_time",

DataEntityRole.LABEL.value:["number_of_likes"],

DataEntityRole.ID.value:"id"},

)

# Create the dataset in Superwise, may take some time to process

dataset = sw.dataset.create(dataset)

new_version = Version(

model_id=nlp_model.id,

name="1.0.0",

dataset_id=dataset.id

)

new_version = sw.version.create(new_version)

sw.version.activate(new_version.id)

Inference pipeline

We’ll now use the second portion of our original dataset to produce model inference predictions and log them to Superwise for monitoring. Inference logs will be sent in batches once Elemeta has enriched them.

inference_sampled.loc[:,"predicted_number_of_likes"] = pipe.predict(inference_sampled["content"]).astype(int)

# prep for Superwise format

prediction_time_vector = pd.Timestamp.now().floor('h') - \

pd.TimedeltaIndex(inference_sampled.reset_index(drop=True).index // int(inference_sampled.shape[0] // 30), unit='D')

ongoing_predictions = inference_sampled.assign(

date_time=prediction_time_vector,

)

#util function

def chunks(df, n):

"""Yield successive n-sized chunks from df."""

for i in range(0, df.shape[0], n):

yield df[i:i + n]

# break the inference data into chunks

ongoing_predictions_chunks = chunks(ongoing_predictions, 50) # batches of 50

transaction_ids = list()

# for each chunk

for ongoing_predictions_chunk in ongoing_predictions_chunks:

# enrich with Elemeta

ongoing_predictions_chunk = metadata_extractors_runner.run_on_dataframe(dataframe=ongoing_predictions_chunk,text_column="content")

# send to Superwise

transaction_id = sw.transaction.log_records(

model_id=nlp_model.id,

version_id=new_version.id,

records=ongoing_predictions_chunk.to_dict(orient="records")

)

transaction_ids.append(transaction_id)

print(transaction_id)

Ground truth pipeline

And lastly, we’ll take the last portion of our original dataset to simulate ground truth collection and log it to Superwise for monitoring.

prediction_time_vector = pd.Timestamp.now().floor('h') - \

pd.TimedeltaIndex(ground_truth_sampled.reset_index(drop=True).index // int(ground_truth_sampled.shape[0] // 30), unit='D')

ongoing_labels = ground_truth_sampled.assign(

id = ground_truth_sampled["id"]

)

# break the label data into chunks

ongoing_labels_chunks = chunks(ongoing_labels, 50)

transaction_ids = list()

# for each chunk

for ongoing_labels_chunk in ongoing_labels_chunks:

# send to Superwise

transaction_id = sw.transaction.log_records(

model_id=nlp_model.id,

version_id=new_version.id,

records=ongoing_labels_chunk.to_dict(orient="records")

)

transaction_ids.append(transaction_id)

print(transaction_id)

Visualizing NLP in Superwise

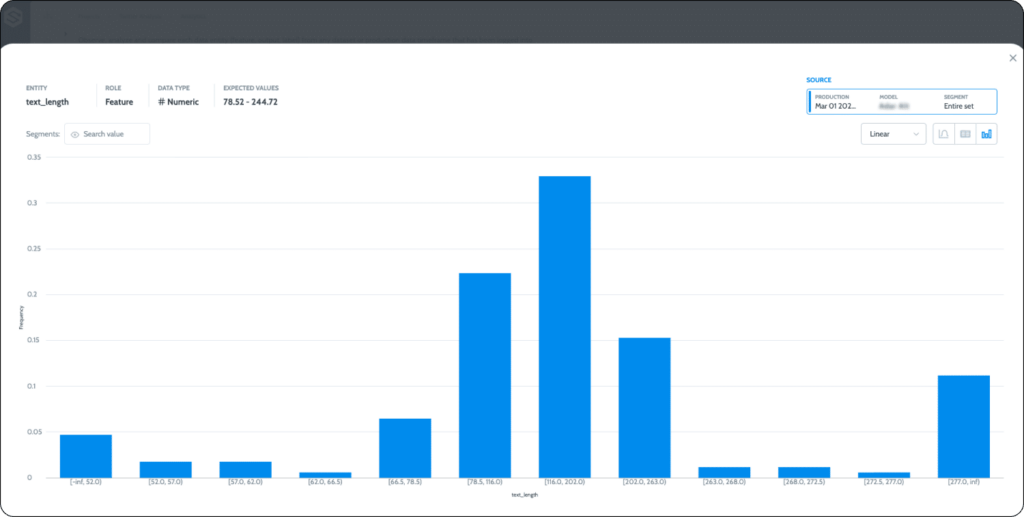

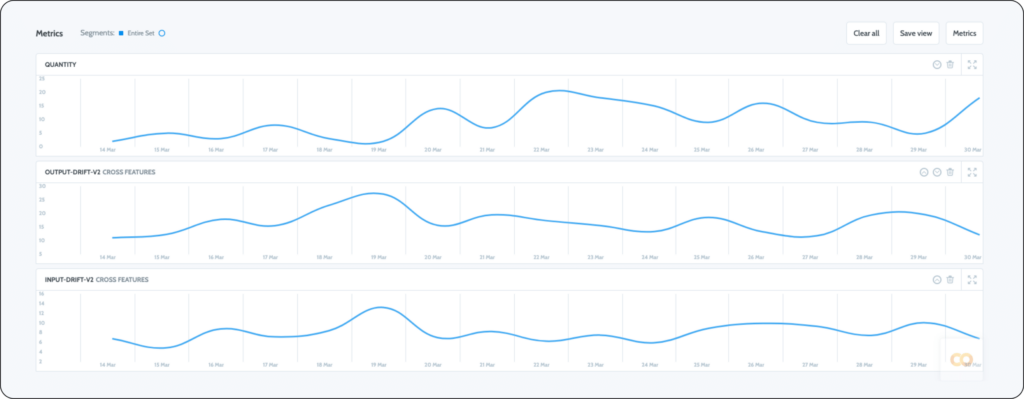

Now that our unstructured data has been enriched by Elemeta and logged to Superwise, we can explore our metafeatures and metrics in detail in the metrics section.

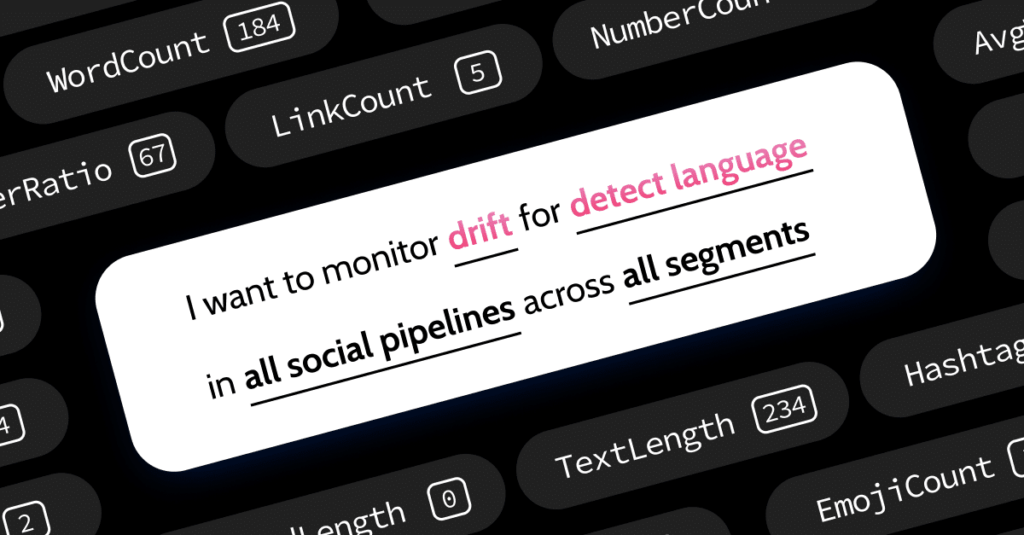

Monitoring NLP in Superwise

The next step is setting up ML monitoring for your NLP use case, after all, that’s exactly why we went through all the trouble of enriching our unstructured data and creating a tabular representation of it.

To put this into an operational context, let’s think of a few potential sensations that would be concerning to a business leveraging Tweet data, say in the context of social media monitoring, let’s look at this both from the perspective of data monitoring and ML monitoring.

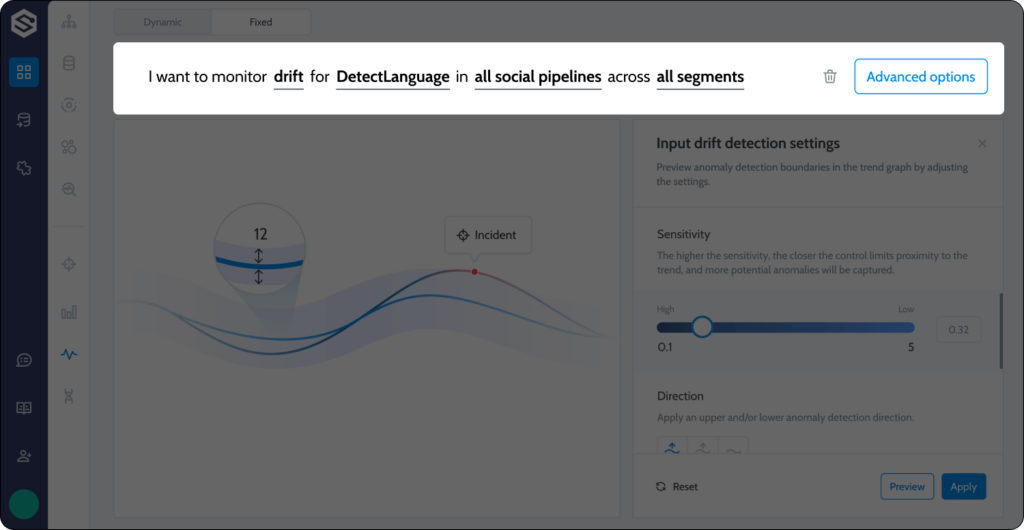

Data monitoring

As a business, you’d want to know if there is a change in the language distribution of social media posts/tweets that you’re users are conversing with you in.

In this case, maybe your marketing department launched a new campaign aimed at a specific demographic, and now you need more language coverage on your social media team. Alternatively, if you were monitoring HintedProfanityWordsCount, you’d want to re-enforce your moderation team.

ML monitoring

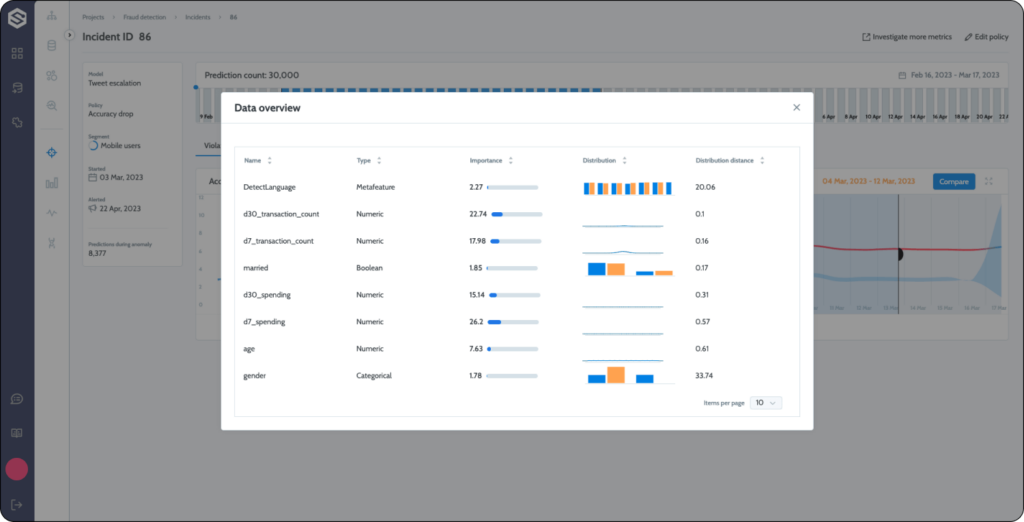

Let’s add some additional complexity here and look at an example from an ML monitoring use case. Let’s say that you have a model that’s predicting which Tweets need to be escalated to a social media rep. Once the rep sees the Tweet, you’ll get immediate positive/negative feedback if the Tweet should have been escalated or not.

In this case, an incident was triggered as soon as we detected a drop in accuracy, and once we drill down into the data, we can easily identify what has changed that could be the root cause.

Getting started with Elemeta and NLP monitoring

With Elemeta and Superwise model observability, you’ll be able to monitor and explain NLP with the same set of tools available to you in supervised use cases. And the best thing about this is that Elemeta is a free, open-source project for the community (don’t forget to show our repo some ❤️). If you need a metafeature that isn’t covered out-of-the-box for your particular NLP use case, create it! We want to know what metafeatures you need for your use cases and domains, and we are more than happy to accept community contributions!

Want to monitor NLP?

Head over to the Superwise platform and get started with monitoring for free with our community edition (3 free models!).

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.