Take charge of AI monitoring with a system built for adaptability. Launch fast with ready-made metrics, policies, and alerts—or fine-tune everything to fit your exact needs.

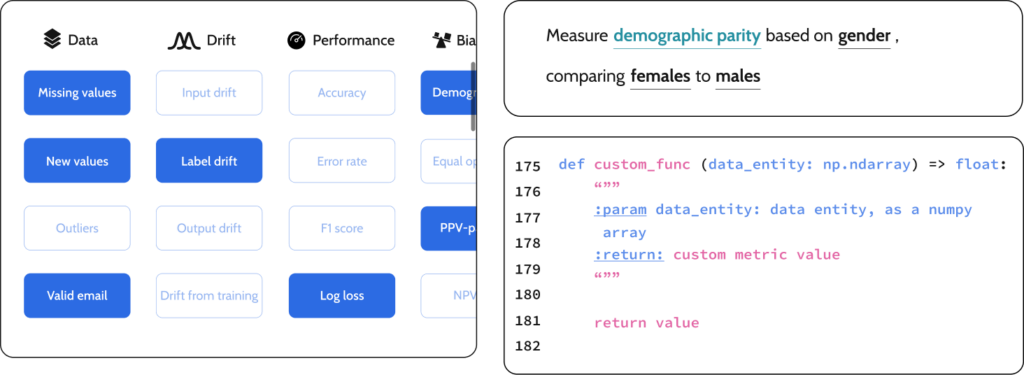

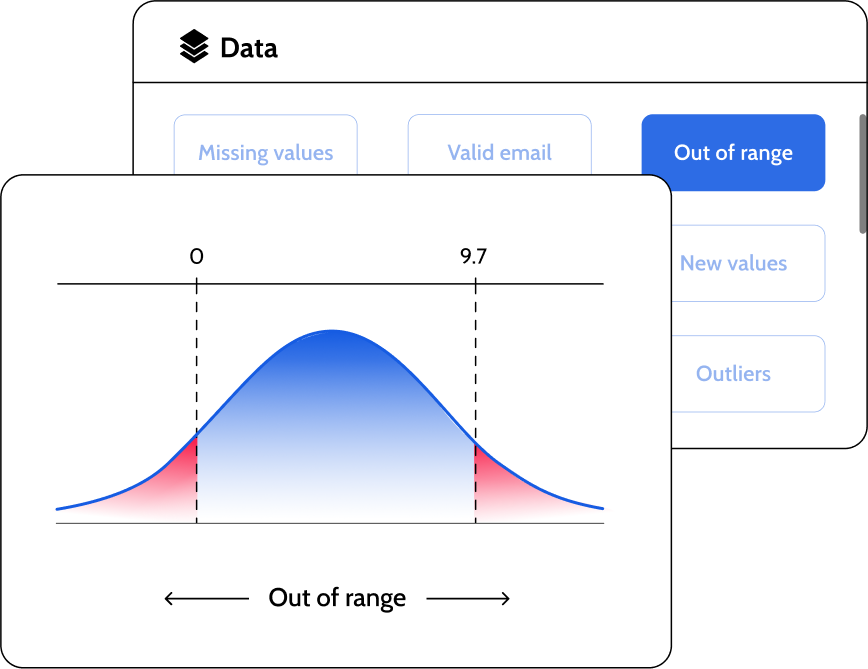

Assess data distribution, integrity, and other critical factors using a comprehensive metrics library. Choose from pre-built options or define custom metrics to suit your requirements.

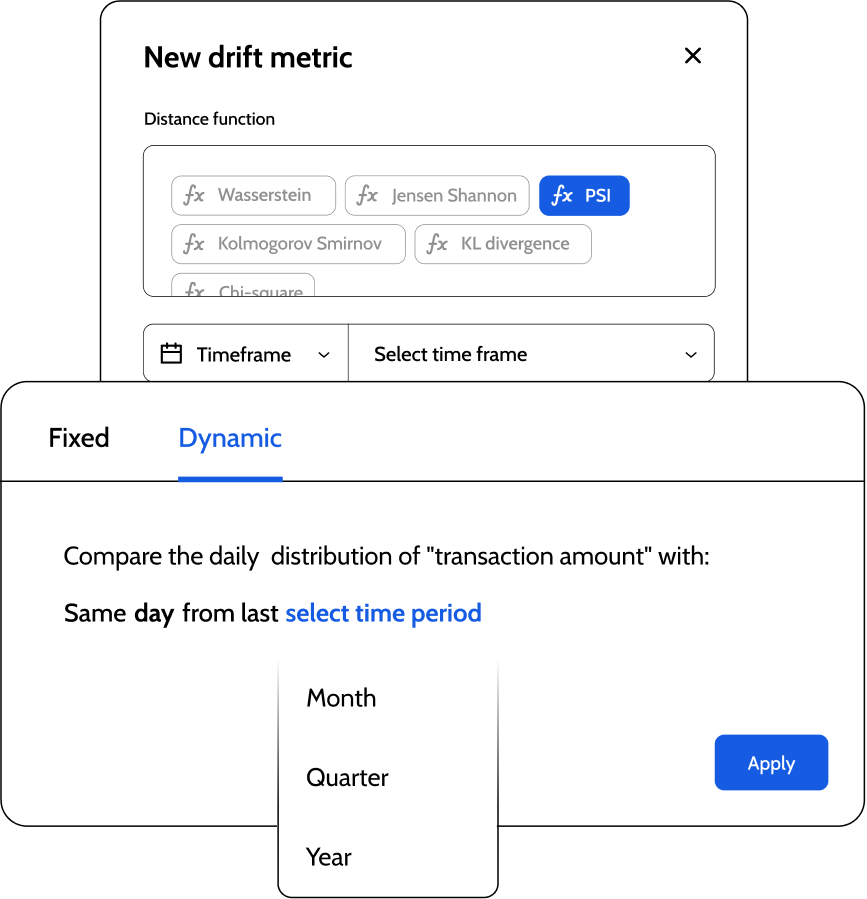

Build drift metrics using your preferred distance functions, features, datasets, and timeframes. Detect and manage model shifts with precision.

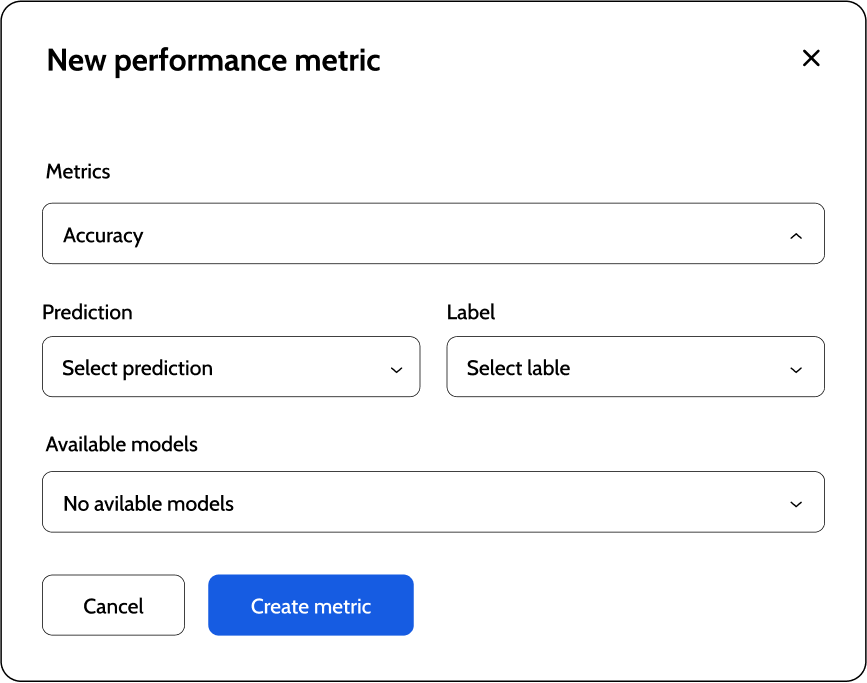

Set and monitor performance metrics specific to your use case. Real-time tracking helps you identify degradation early, regardless of feedback delays.

Track bias across protected classes and subgroups to ensure fairness. Stay compliant with responsible AI guidelines and maintain accountability.

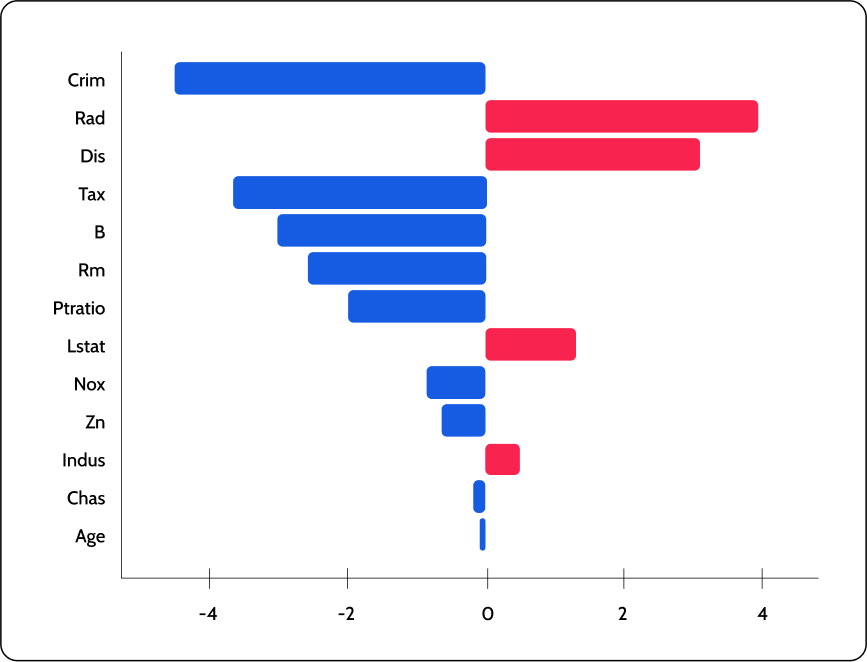

Use feature attribution, cohort analysis, and what-if scenarios to analyze model behavior. Enhance transparency and trust in your AI systems.

Powered by SUPERWISE® | All Rights Reserved | 2025

SUPERWISE®, Predictive Transformation®, Talk to Your Data® are registered trademarks of Deep Insight Solutions, DBA SUPERWISE®. All other trademarks, logos, and service marks displayed on this website are the property of their respective owners. The use of any trademark without the express written consent of the owner is strictly prohibited.