When you’re talking about LLMs, all roads lead to prompt engineering. You may start your LLM journey with fine-tuning, updating LLM weight, reinforcement learning, and RAG, but very quickly, it becomes clear that prompt engineering, which doesn’t require any change to weights or architecture, is a paramount component.

In this blog, we recap our recent webinar on Unraveling prompt engineering, covering considerations in prompt selection, overlooked prompting rules of thumb, and breakthroughs in prompting techniques.

More posts in this series:

- Considerations & best practices in LLM training

- Considerations & best practices for LLM architectures

- Making sense of prompt engineering

Prompt engineering: from “art” to discipline

Let’s start with what prompt engineering is not. It’s more than just a fail-fast environment in which there are no actual directives or objective considerations for sharpening the stochastic output of an LLM. Yes, LLMs are robust black boxes, but there are points of entry to “manipulate” them – and they don’t necessarily require a data science background. So, where are these points of access?

Prompt design and development

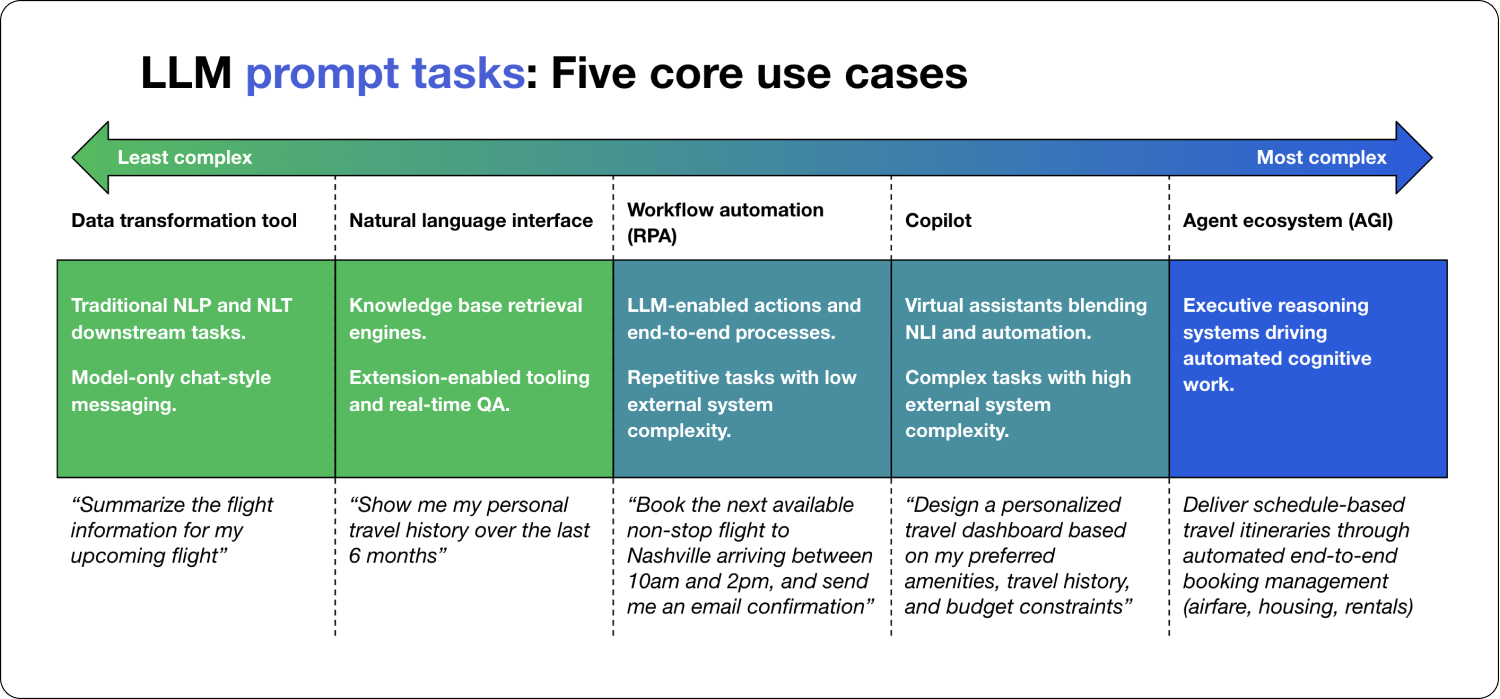

LLMs, in general, fall under 5 core use cases, and it’s frequently helpful to work backward to understand where your use case falls in the scheme of things. Start with the desired output, then examine the workflow capabilities that are needed, and last, the types of data connections and data sources that are necessary to satisfy those capabilities within your overall workflow.

With these five use cases in mind from an architectural perspective, how do we satisfy instances we might anticipate in air travel or other potential industry cases?

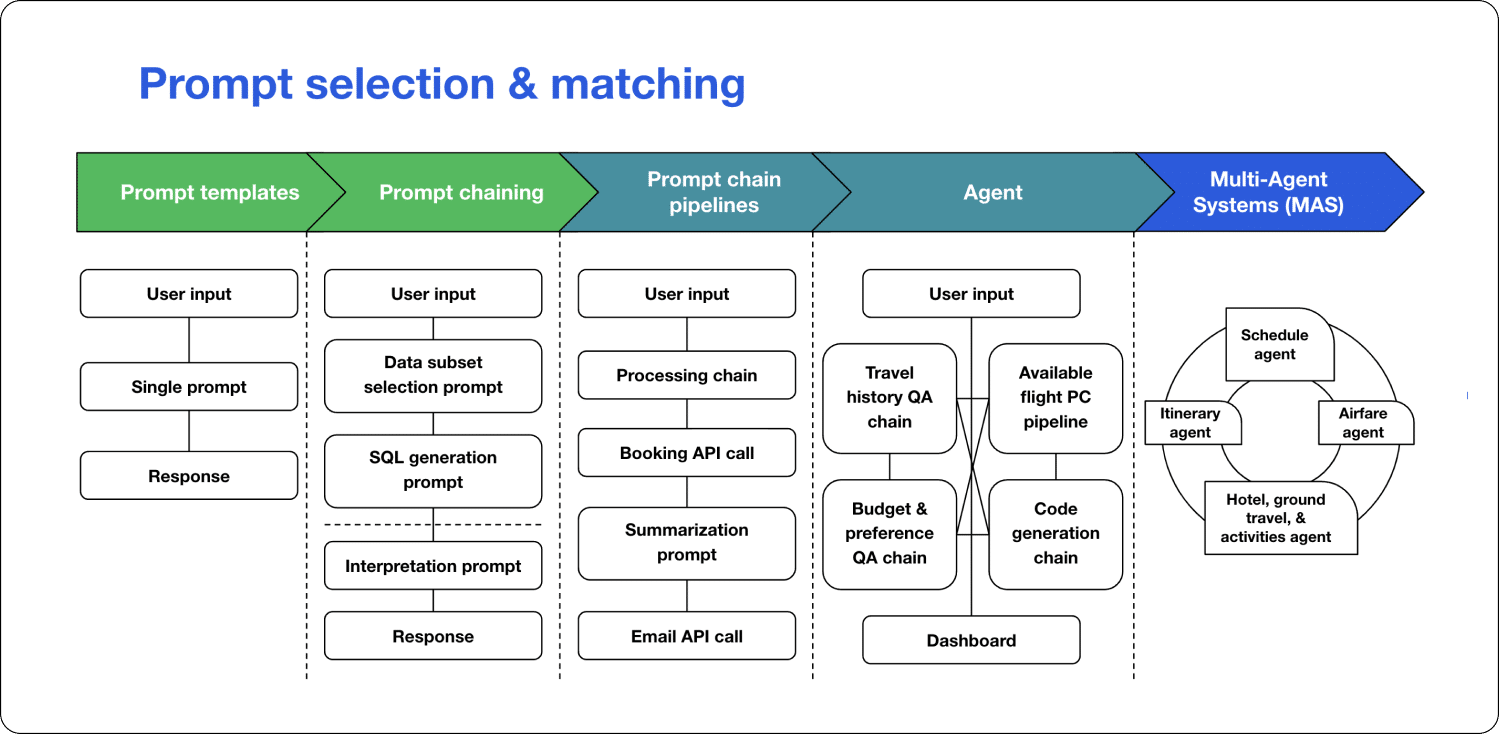

Prompt template: just a simple prompt that ingests context through user input and gives a response.

Prompt chaining: you’re mapping multiple templates together. The output of one becomes the input of another. And you could use a vector database retrieval system through something like a Langchain, which might be helpful here.

Prompt chain template: incorporates a lot of the first two. So you’re bringing in some prompt templates layered on top of one or more prompt chains to bring in from other knowledge bases like API calls, SQL, and unstructured documents, pulling them all together in a more structured agent-type way.

Agents: will take a lot more of a reasoning and thought process approach with prompt chain pipelines and prompt chains ultimately coming together.

Multi-agent systems: will bring in a lot of other agents to work together to achieve whatever complex tasks matter most to you.

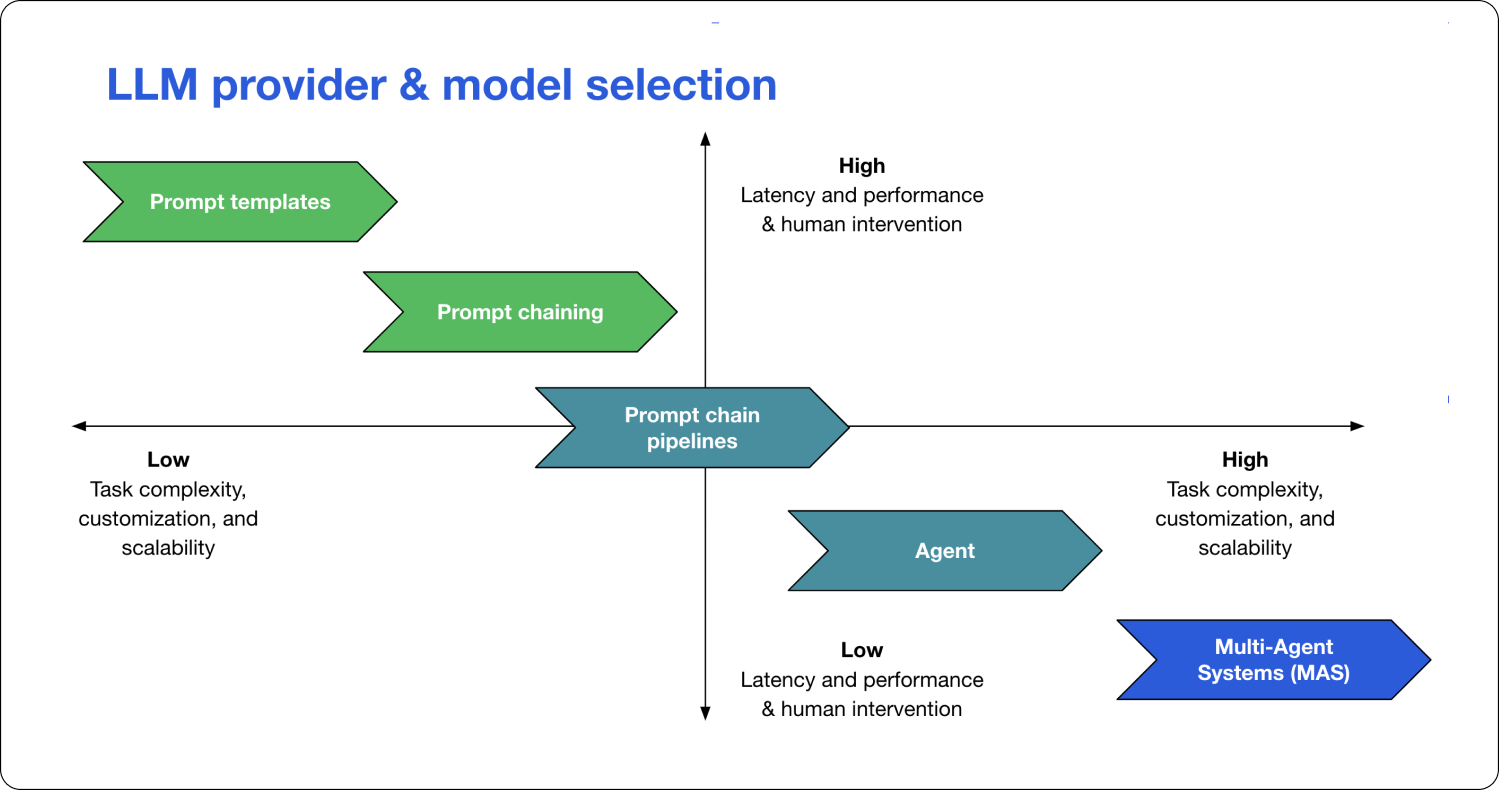

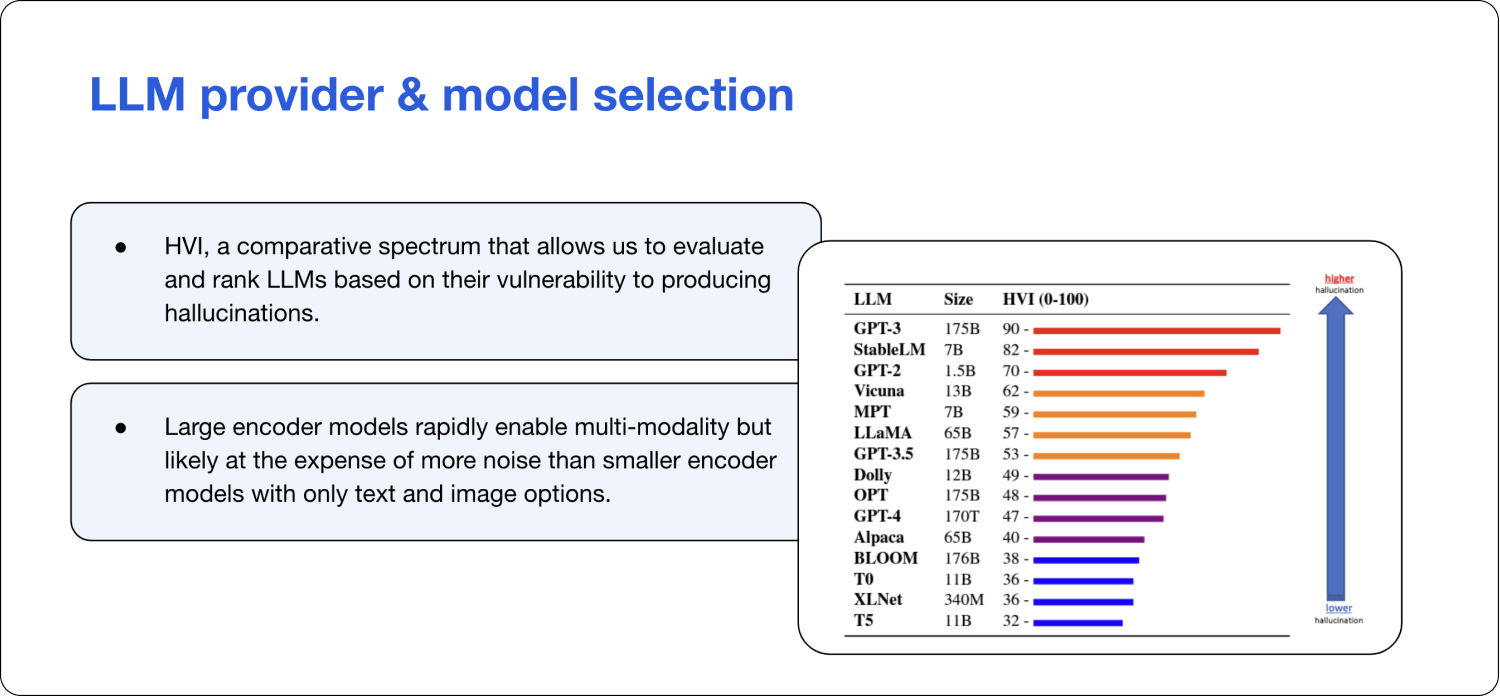

In many cases, several different models could satisfy the requirements of an LLM use case. So, how do we differentiate them? Most of the answer falls within this two-dimensional spectrum.

It goes without saying that constructing a multi-agent system for something like a flight summary would be a very poor allocation of resources and time as a developer. Conversely, asking a static template or static prompts to build out a complete trip itinerary based on travel plans and past travel history would be a massive mismatch between the complexity of your use case and the technical capabilities that a prompt template might offer. Customization and all these things in the output format and the overall design are going to be available across the board, but the customization of the scope of work and the scope of use only becomes more available as human engineers relinquish more control over the reasoning process being handled in these particular cases.

If you need more than that to think about, three additional considerations beyond this two-dimensional spectrum are very helpful in selecting the right model for your use case.

Scope of content and the scope of context required for your case. Training cutoffs with GPT and other big pre-trained models are a big consideration, especially when you evaluate what is compatible with extensions to real-time search, for example.

Human-model interactions and just what role you want human feedback to play. Once constructed, an agent or a multi-agent system is rigid in how it generates output and often doesn’t accommodate human feedback or any personal preference implementation. Prompt templates have a lot more tokens available and a lot more room for context in that area.

Multimodal inputs and outputs on what can be compatible with your particular case. Incorporating additional modalities beyond text and image is widely viewed as a huge frontier in the industry. But this requires diverse data sources, and they need to be better democratized, which means that how our LLMs interact with the world around us should be contingent on meeting data where and how it lives.

The compatibility question can be domain-specific. If you need Bloomberg, you have to use Bloomberg. There’s no way around it unless you go down the private LLM path, as we’ve covered in previous webinars. But in most cases, there is a sense of choice involved. And with that choice, there will always be some trade-offs with compatibility.

As shown here with the large embedding models, it just so happens that these models have the greatest susceptibility toward measurable hallucinations.

Prompt evaluation and refinement

A lot of these harmful tendencies are byproducts of both model underfunding and model overfitting.

Model underfitting in the sense that domain-specific vernacular like acronyms, abbreviations, synonyms, and other industry knowledge that extends beyond the scope of the public sphere are altogether uninvolved in most of the training process for these pre-trained models

Model overfitting in the opposite sense, the World Wide Web as we know it is full of echo chambers, and when training LLMs on digital content and these digital conversations around the web, it can mean a much greater frequency of tokens, labels, and even information notes altogether being assigned to particular concepts or phrases. This can lead to overfitting that directly influences the best next guess.

In many cases, some of these symptoms are inevitable risks that we have to assume when using these pre-trained models that certainly harkens back to that To train or not to train discussion we had in past webinars. For instance, we cannot possibly achieve a specific capability or characteristic of an LLM without clearly and concisely specifying what we want the LLM to do. But in doing so, there is a prevailing hypothesis in the LLM space called the Waluigi effect, where the LLM effectively becomes more susceptible to assuming the very opposite capability, the very opposite persona we’ve identified.

Many of these symptoms are avoidable through some general principles in prompt engineering. Such as mitigating the model’s tendency to, for example, take the first and last elements, always select the majority class, or favor frequently used tokens. It may be a matter of either reinforcing the model with better context or reformatting the model scheme itself and more to come on some of those principles for best practices in just a few moments. How do we work toward performing much better in terms of response quality and working toward mitigating some of the biases that we can control.

From an architectural perspective, there are four main considerations:

RAG: Adding very short but very informative context to LLMs. In many cases, this might come in the form of knowledge from the public domain into private knowledge bases. Still, in other cases, it might be using a Google SERP API to initiate a live Google search and return the most up-to-date information on top of a pre-trained LLM with a cutoff.

Directional stimulus prompting: Directional stimuli are general outlines for an LLM response. You can have a smaller LLM take the contextual input, in this case, flight information, and pull out the key concepts, key phrases, or just the general ideas that are mentioned in that particular input. Pass it back into the broader LLM that’s covering your downstream task.

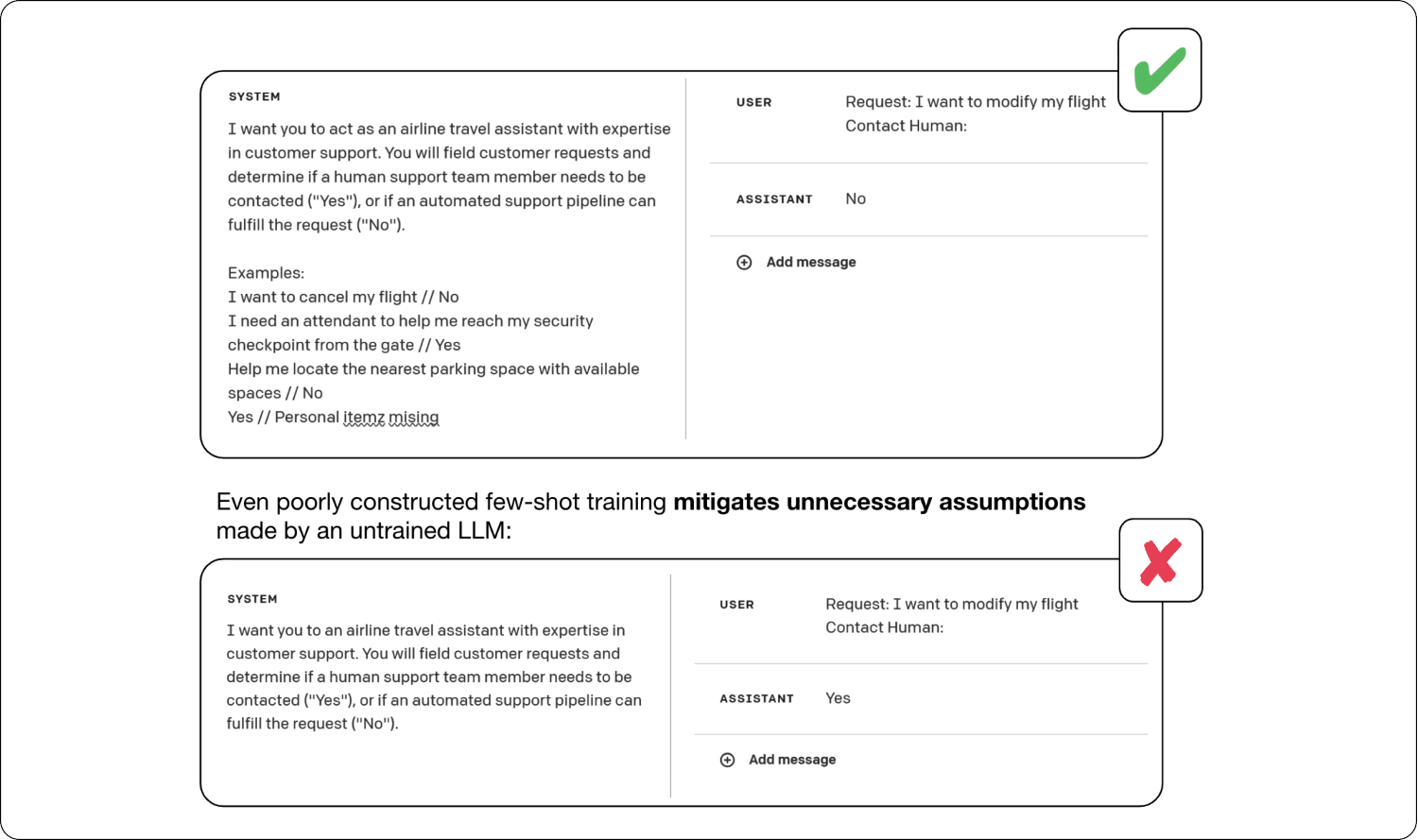

Few-shot training: Showing an LLM is always better than telling it. LLMs are very, very good at seeing past gross shot construction errors, mainly spelling, grammar, and syntax errors. But very messy query and response pairs can outperform even the most specific schema recommendations you can possibly conjure up. Second, labeling tasks like sentiment analysis. You might have positive, negative, and neutral. You may want a more evenly distributed or randomly sampled group as opposed to a pattern. LLMs are very good at detecting those patterns, and they’re even better at accounting for those patterns in the responses that they generate. And finally, you don’t just want to expend all of your tokens on a select few difficult cases. You want to make sure you’re covering all of the challenging areas exhaustively and representative of the types of requests you would expect from an end user.

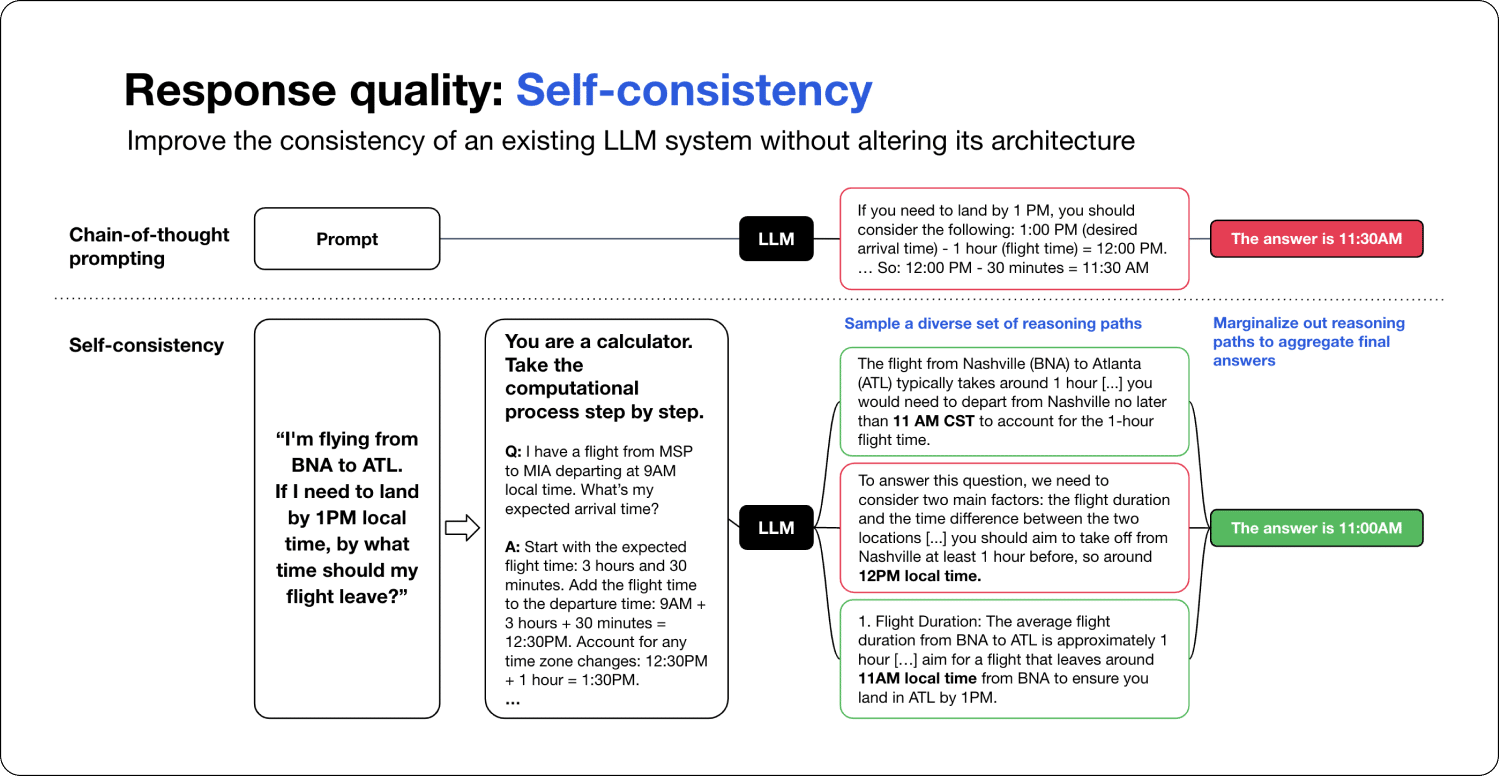

Self-consistency: you use this to mitigate many of the inconsistencies in your responses. By running the same prompt more than once, you’ll need an LLM with a non-zero temperature. Here, you can collect many different results using different logical flows and ultimately decide on the final answer by a type of merging strategy. If you’re using a labeling task, this might just be a majority vote. If you’re generating a numerical prediction or a numerical response, you might want to take the average.

This approach works well for simple logical steps that can be identified as misses.

General principals for quality LLM outputs

#1 Modular, not monolithic

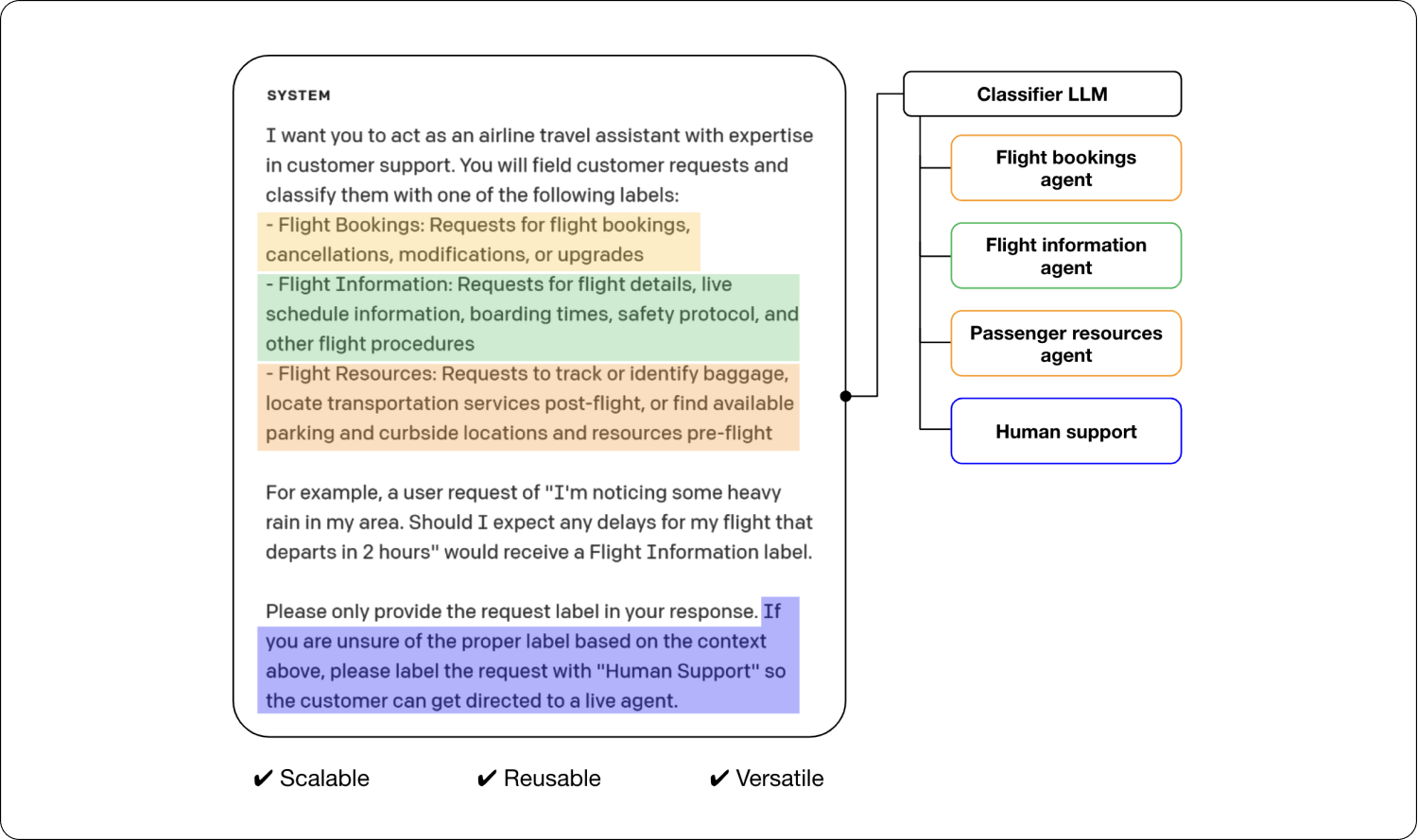

The more you can break down an overarching problem into smaller subproblems, the better. Rather than feeding in a massive data schema and a lot of other capability descriptions under one prompt, it’s a lot more effective to identify the individual tasks involved in your LLM system and unify them under a broader, more specialized, and diversified system.

#2 Quality over quantity

Much like trying to build a traditional machine-learning model from scratch, allowing your LLM to make unnecessary assumptions from a massive set of embeddings tends to lead it astray far more than just a few conceptual or formatting errors within your shot set.

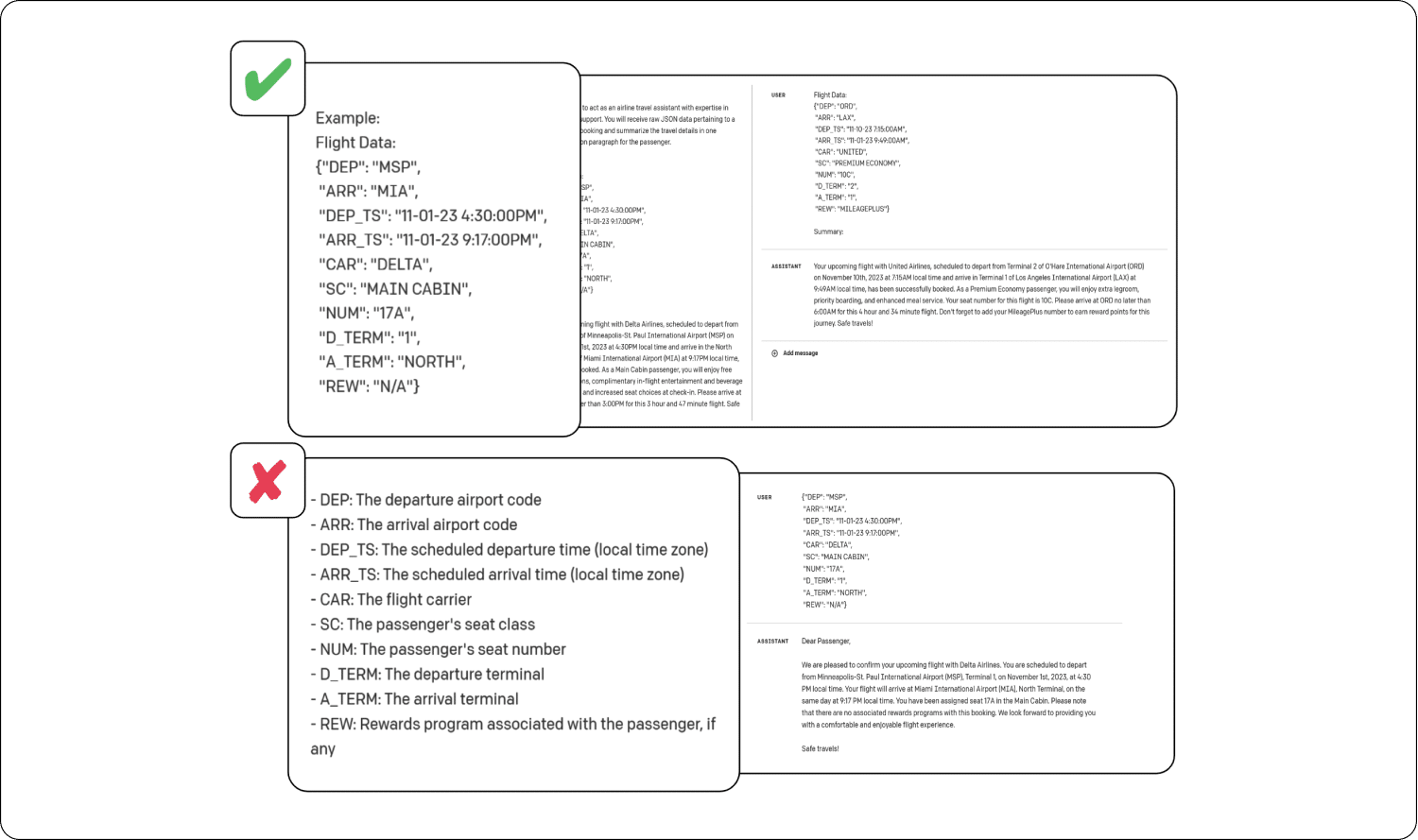

#3 Shot context over schema context

Showing is always better than telling, especially in cases with complex data structures. Adding written meta-features and metadata is either unhelpful to the task or a massive waste of the tokens that you have available. Shots will always give you a more transparent and much more concise picture of where the key nodes of information are, how they’re related to each other, and how they would best factor into the output you’re looking for.

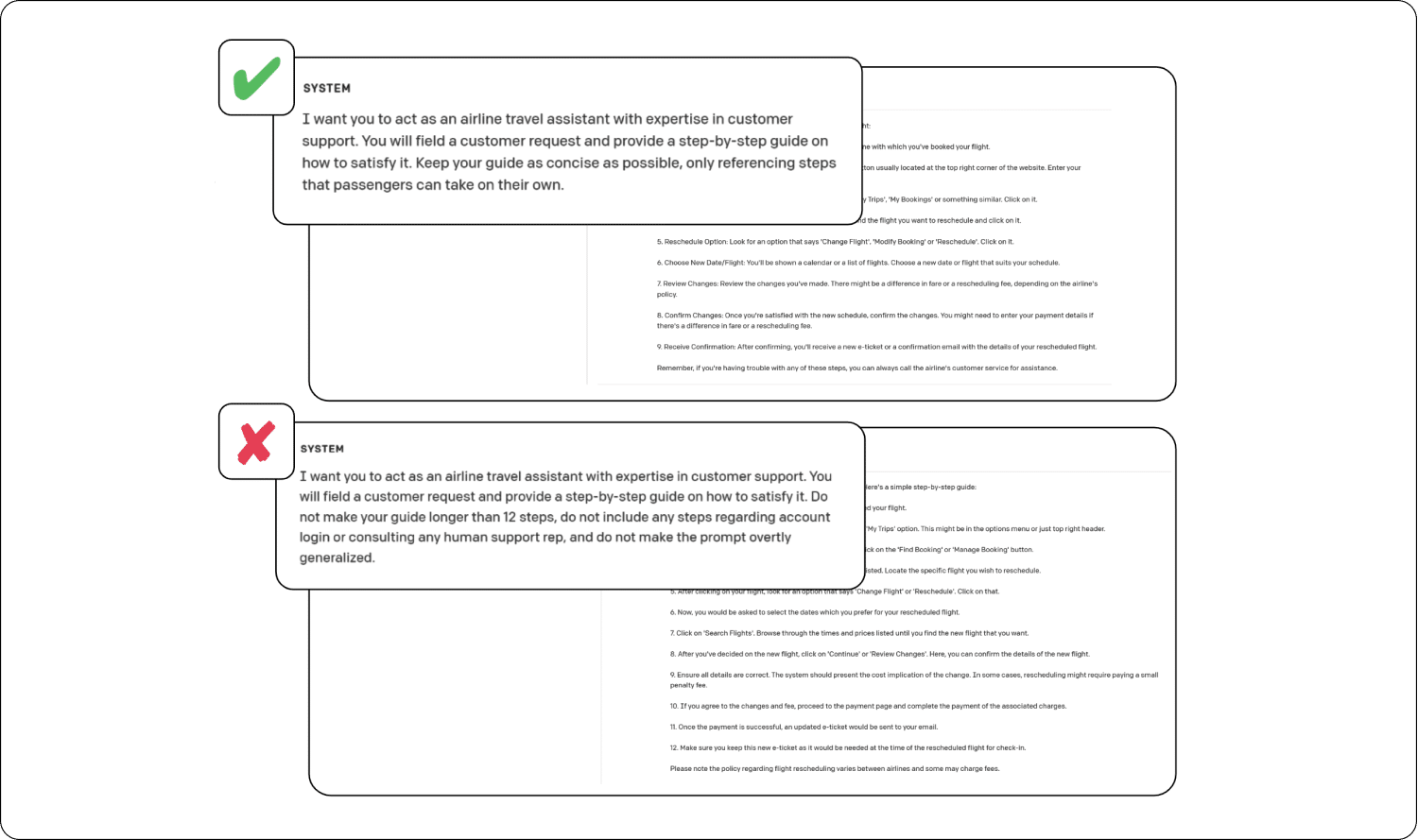

#4 Positive over negative instruction

Instructing the LLM on what to be and what the output should be is far more effective than trying to prevent certain attributes or trying to tell the LLM what it shouldn’t be.

In this case, with our airport example, mentioning no longer than 12 steps actually encourages the LLM to always give you 12-step guides, even if the guide itself should be really, really basic.

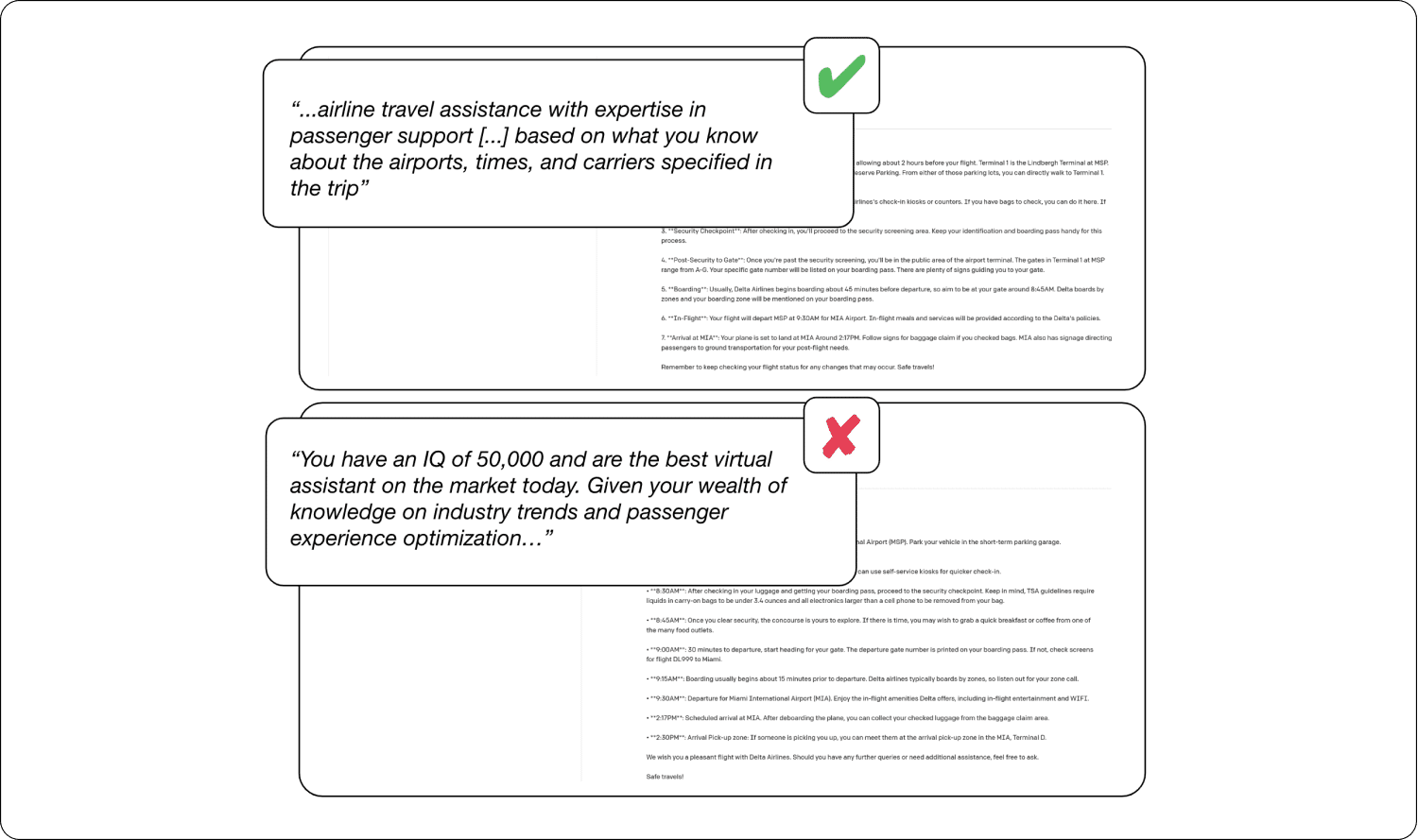

#5 Friendly vs. flattery

When articulating to the LLM what its role should be, encourage it like a friend. You don’t want to give it an inflated or impossible degree of intelligence. It would force its hands to be a little pretentious, definitely a little bit overwhelmingly specific, and altogether unnecessarily articulate in some areas.

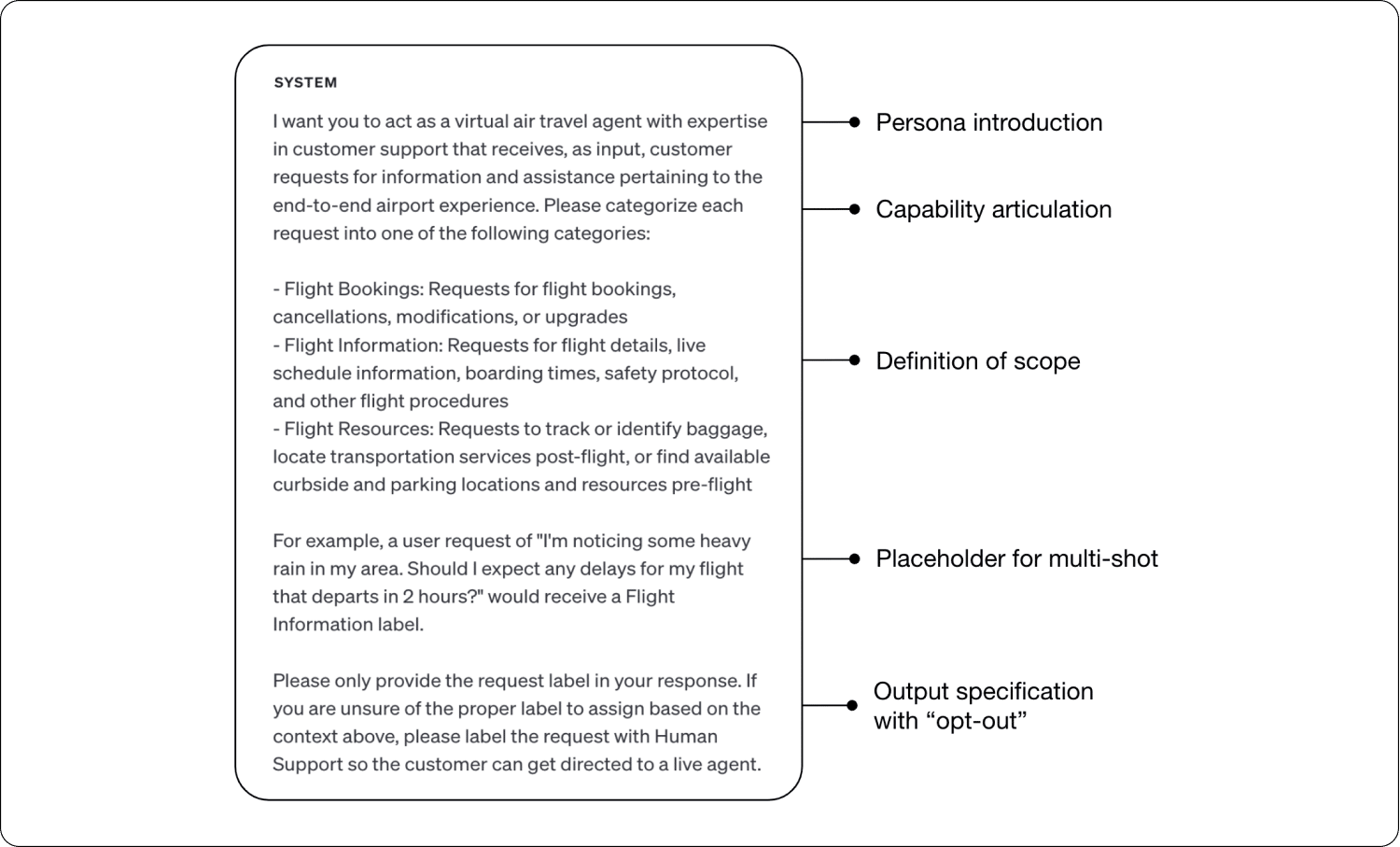

#6 Articulation, articulation, articulation

In this general structure, you should be articulating what the role of the LLM should be, what capabilities under that role should look like, and what options for outputs are available. You could also add some examples or a few-shot training if available, but most importantly, an option to opt-out. The best way to maintain transparency and explainability and even mitigate hallucinations is by clearly assigning and out – a confidence threshold of sorts. The LLM itself could regulate this, or you can have humans in the loop.

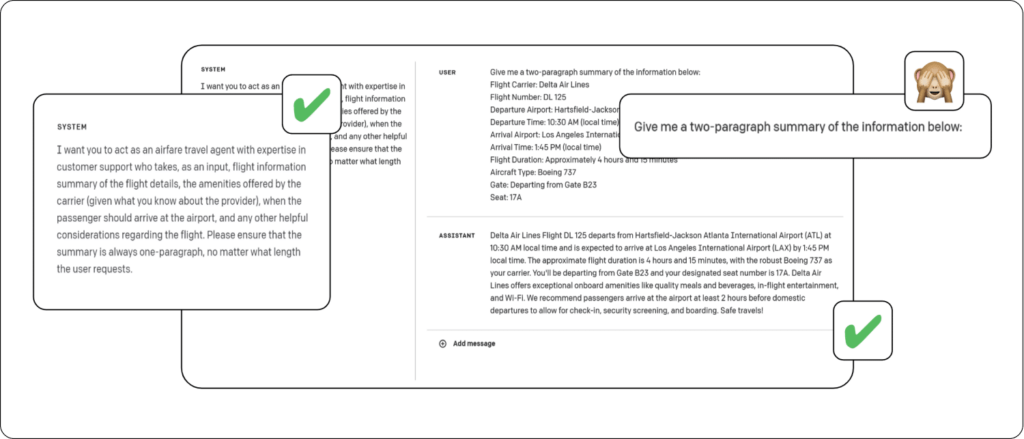

#7 System vs. user

Setting constraints from a system perspective will almost always override any requests made by the user. So, in this case, we’re looking for a very simple one-paragraph summary and look at what the user wanted, a two-paragraph summary. It will always override any of these requests. It’s a really, really light and really easy point of entry into guardrail management, protecting against hallucinations, protecting against code injections, and any other nefarious requests around deleting tables, modifying documents, or deleting them entirely.

Breakthroughs in prompting techniques

There are two main architectures that we will be discussing here: chains and agents.

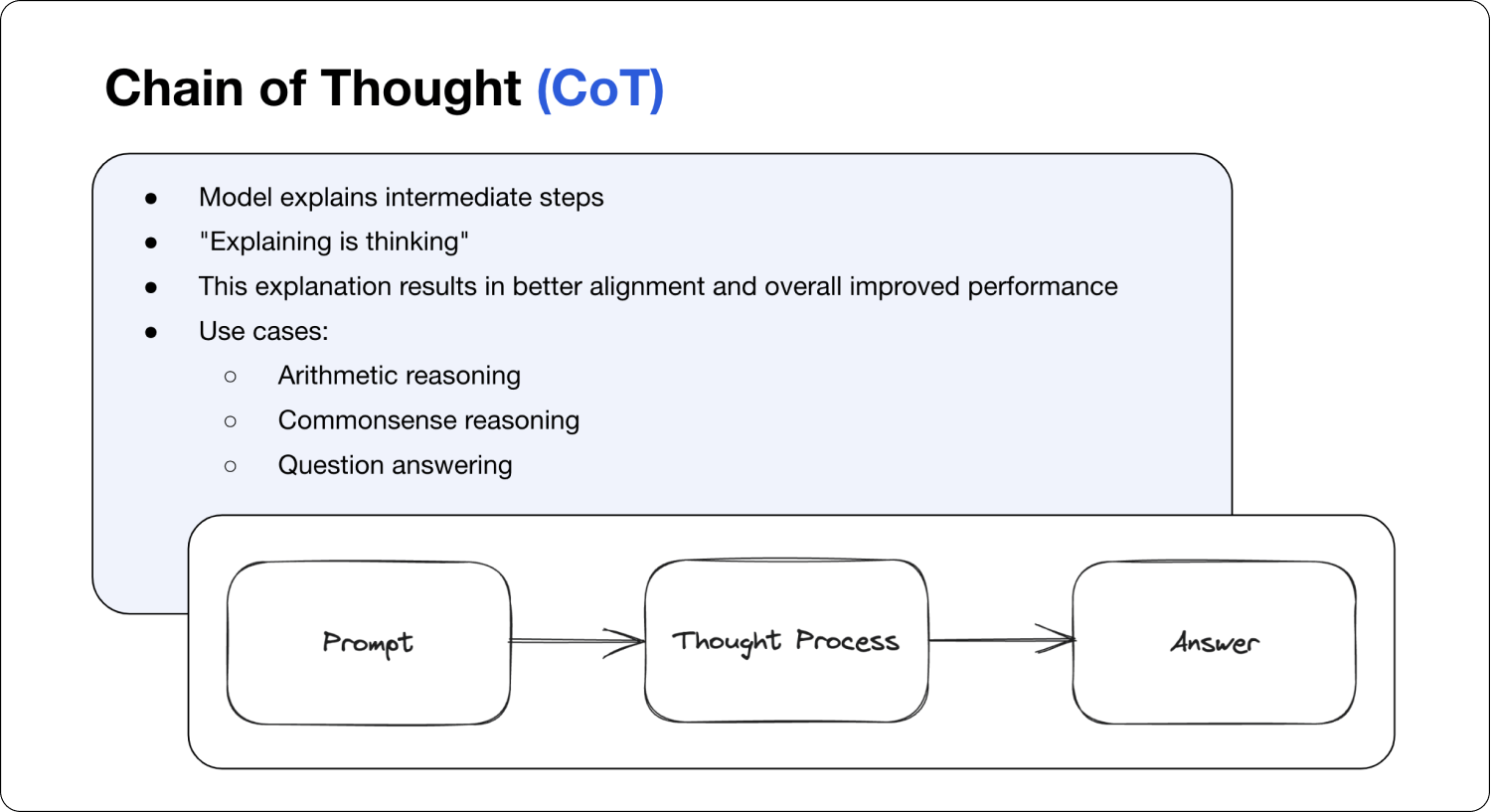

Chain of Thought (CoT)

You make the LLM give out its thought process and then converge all of that thought process into a final answer.

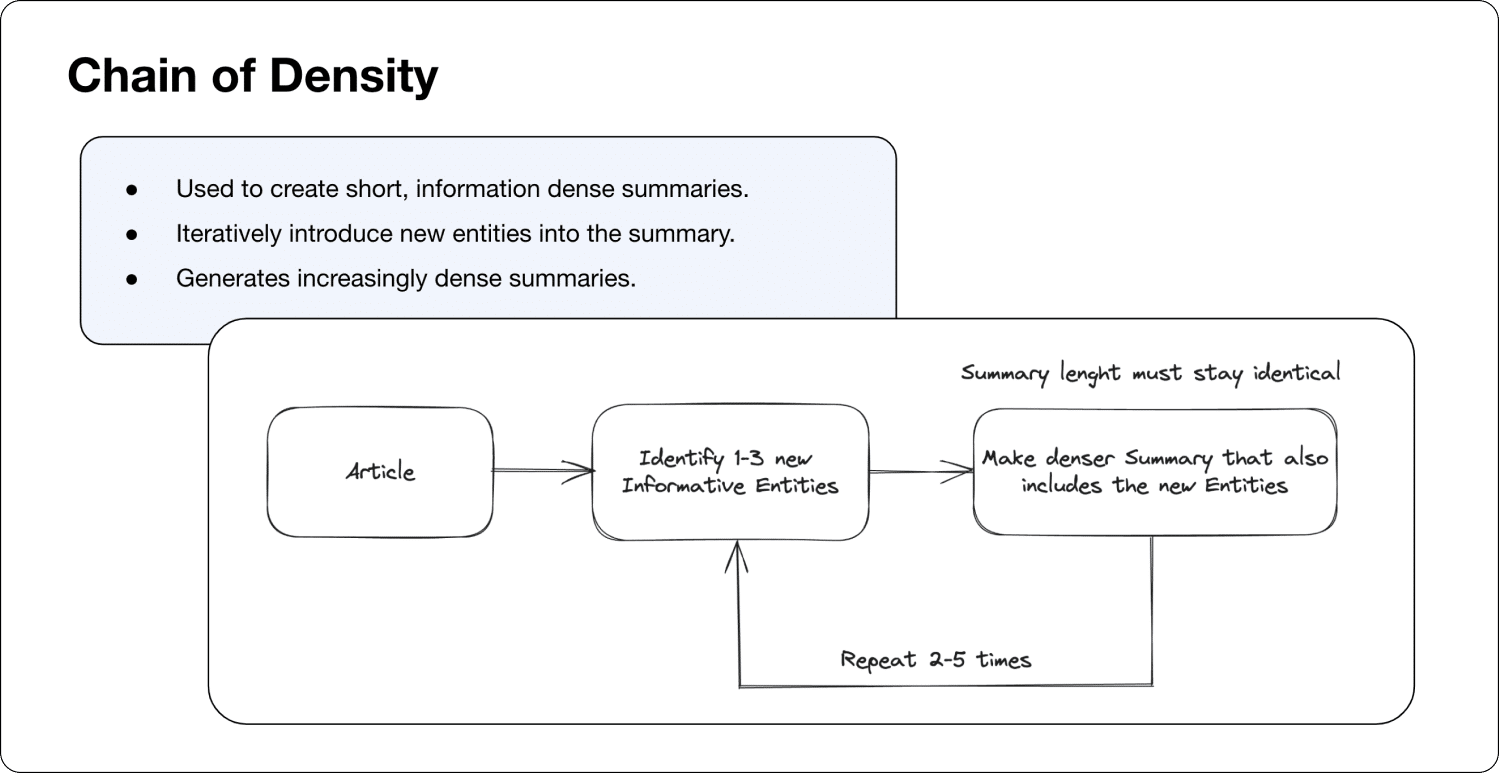

Chain of Density

This is a recent technique that aims to provide a short, concise, and dense summary. It does this by creating summaries iteratively. So first off, we ask it to identify one to three entities, create a summary at a given length, say five sentences, and then identify which new relevant entities it could add and insert them in that summary. It does this over and over – and in the end, we get a very rich and dense summary.

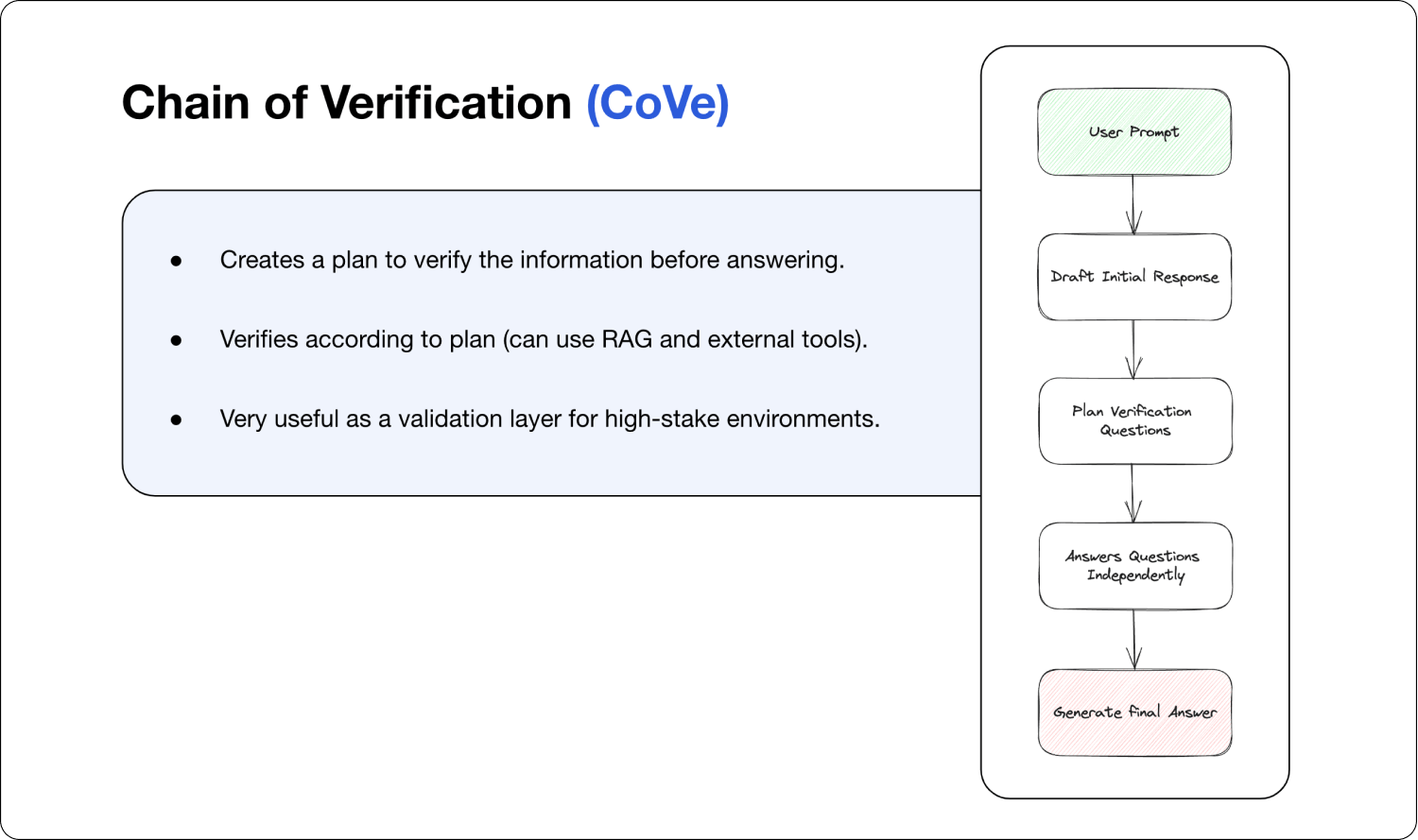

Chain of Verification (CoV)

This technique also aims to make the LLM think like a person would. It makes the LLM give an initial, basic response, but then it tries to verify that response. What questions would a person ask to verify something is true? It blends out these questions, answers them independently, and then aggregates all of this into the final answer so that the final answer can be verified.

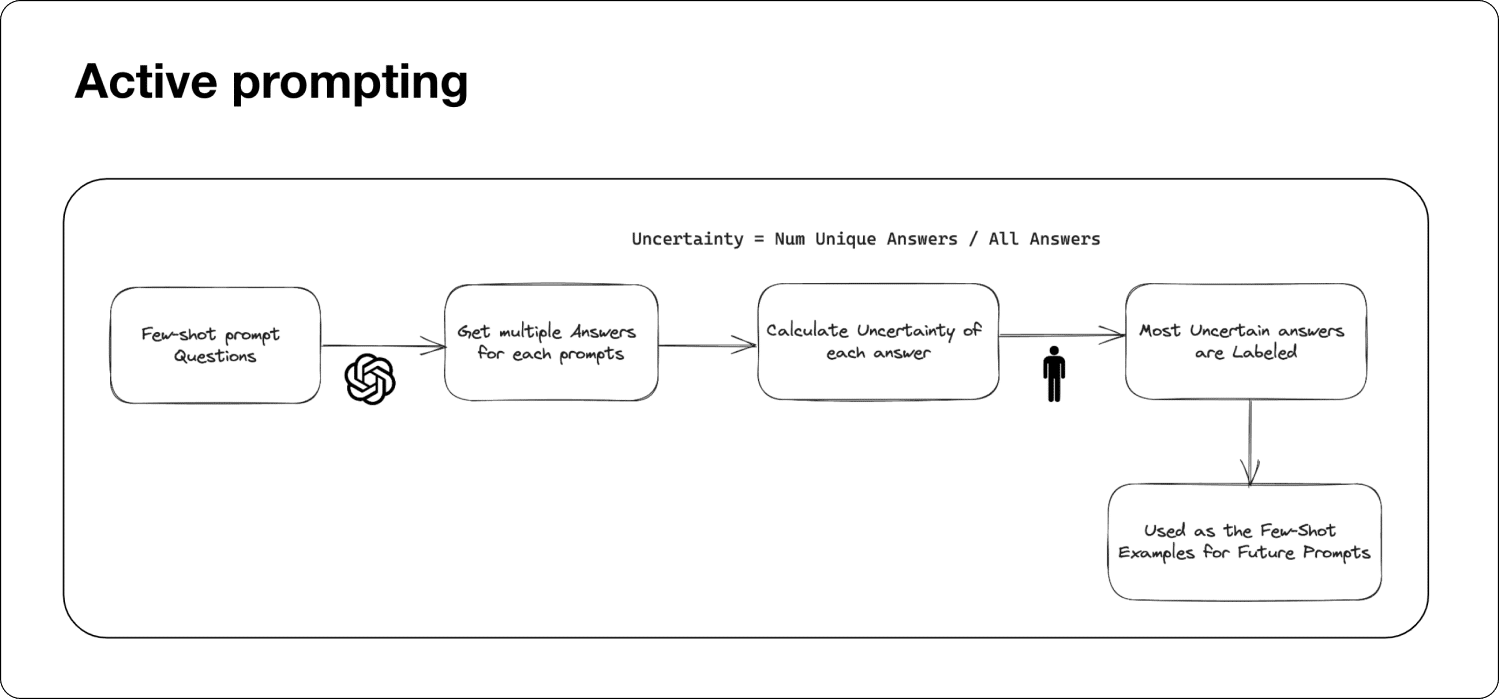

Active prompting

It aims to find the best examples automatically. It does this by first picking up a few shots, getting them answered, calculating the uncertainty for each, and asking humans to label the most uncertain questions. These labeled questions, which were supposedly harder, are then used as examples.

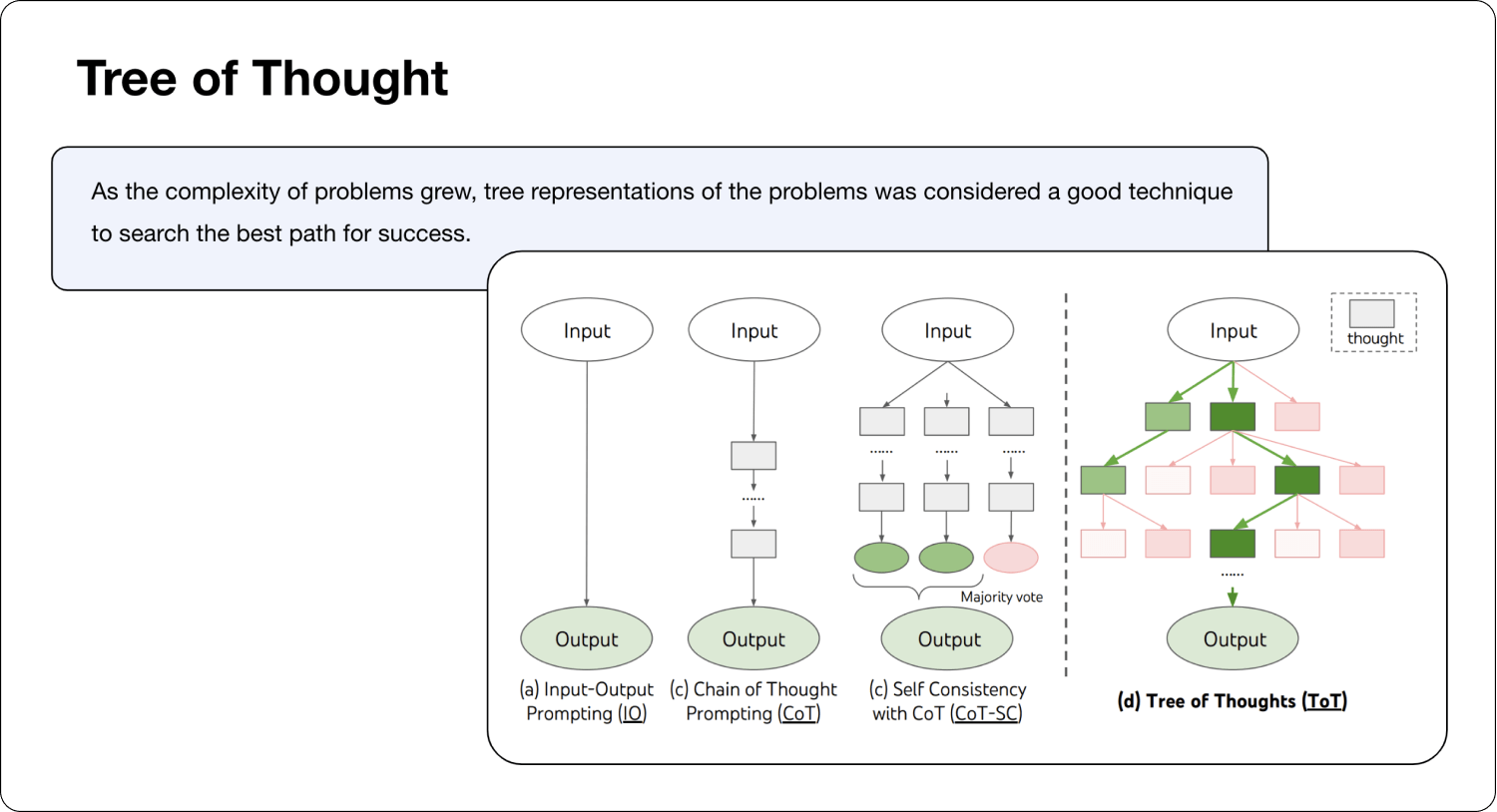

Tree of Thought

This automatically generates several responses around additional information that it needs. Each is evaluated automatically either by another LLM, by some sort of rule, mathematical rule, etc. Then, you pick the answer that looks the most promising. Following typical algorithms where you just pick the highest evaluation and follow through, you get this tree-like structure. Furthermore, the tree-like structure allows for backtracking if needed and other types of tree traversing techniques that are fairly known and widely studied.

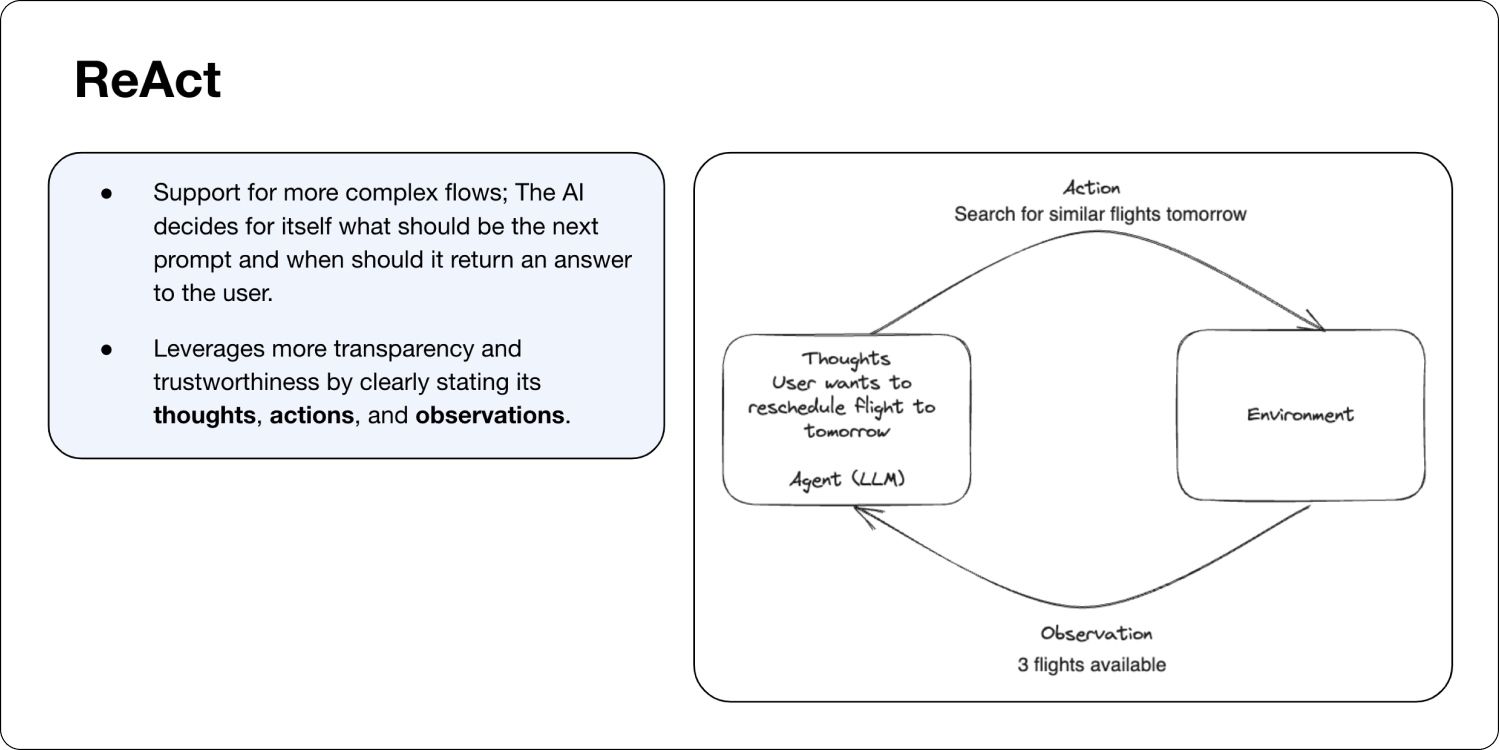

ReAct

ReAct is an example of an autonomous agent. It will always practice thought, action, and observation iteratively until the agent decides when to break out of the loop.

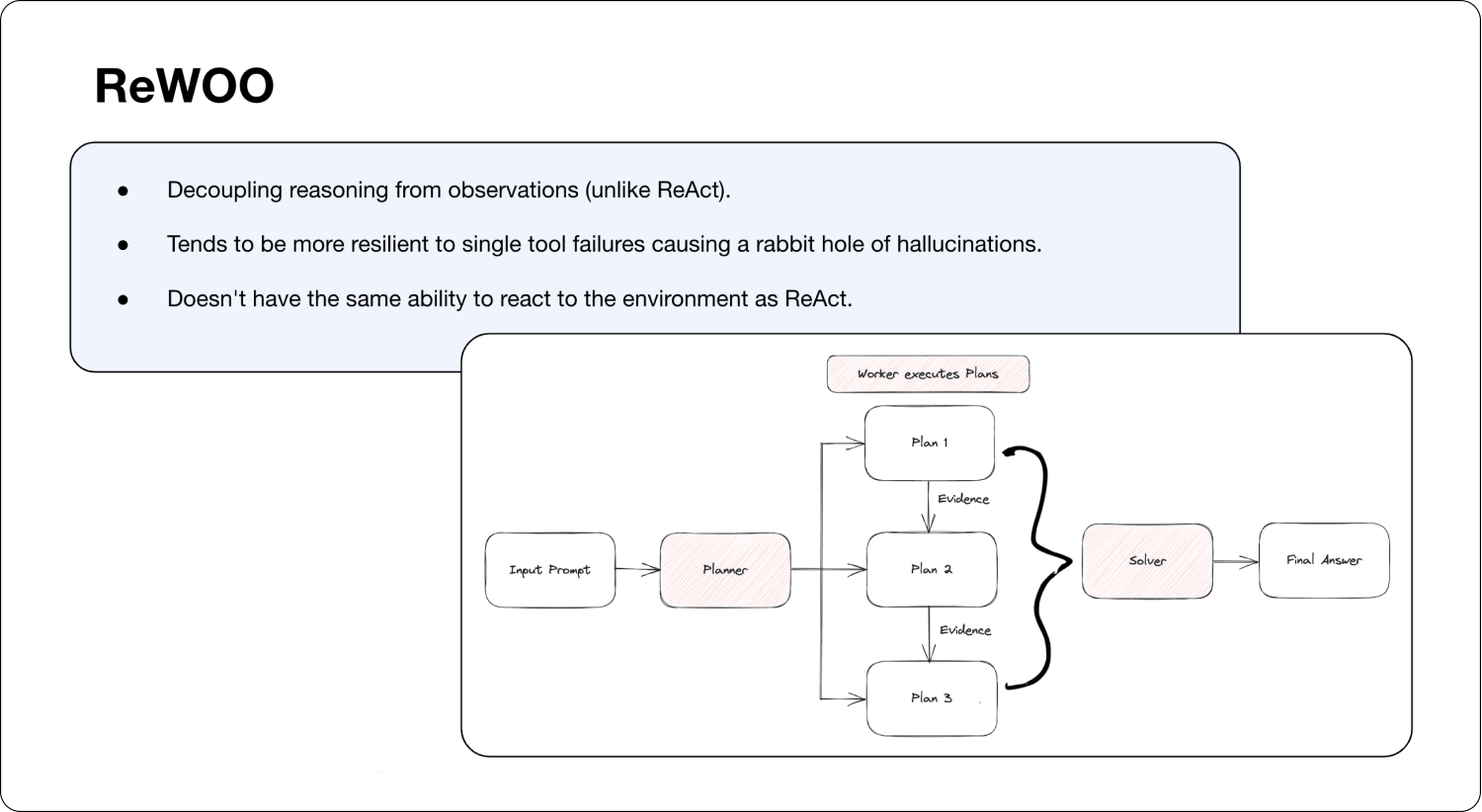

ReWoo

Reason without observation. With the input prompt, the LLM plans ahead what it will do. And with each plan, the output of each plan is passed as input to the next one. It then gives all these plans to a worker, which executes them, and a solver, which aggregates them into a final answer. ReWoo gives a result that is still accurate but with much less token usage, around 60% less.

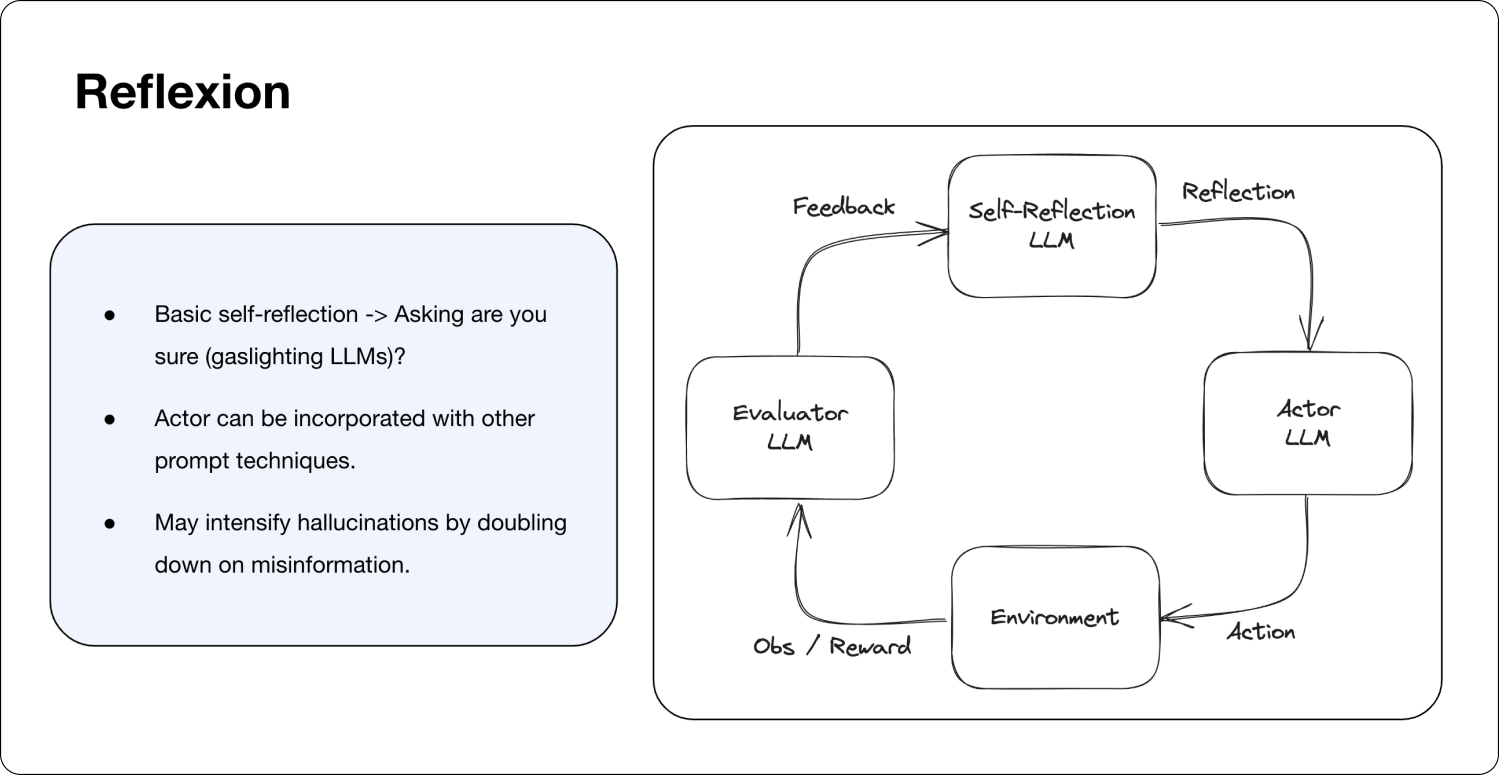

Reflexion

Agents hallucinate all the time, and one hallucination can steer the LLM on a trajectory utterly opposite to our initial goal. There’s an LLM, which evaluates the situation and determines it was a hallucination. Then, another LLM reflects and corrects its trajectory and does it again to keep correcting the trajectory of the agent’s thinking.

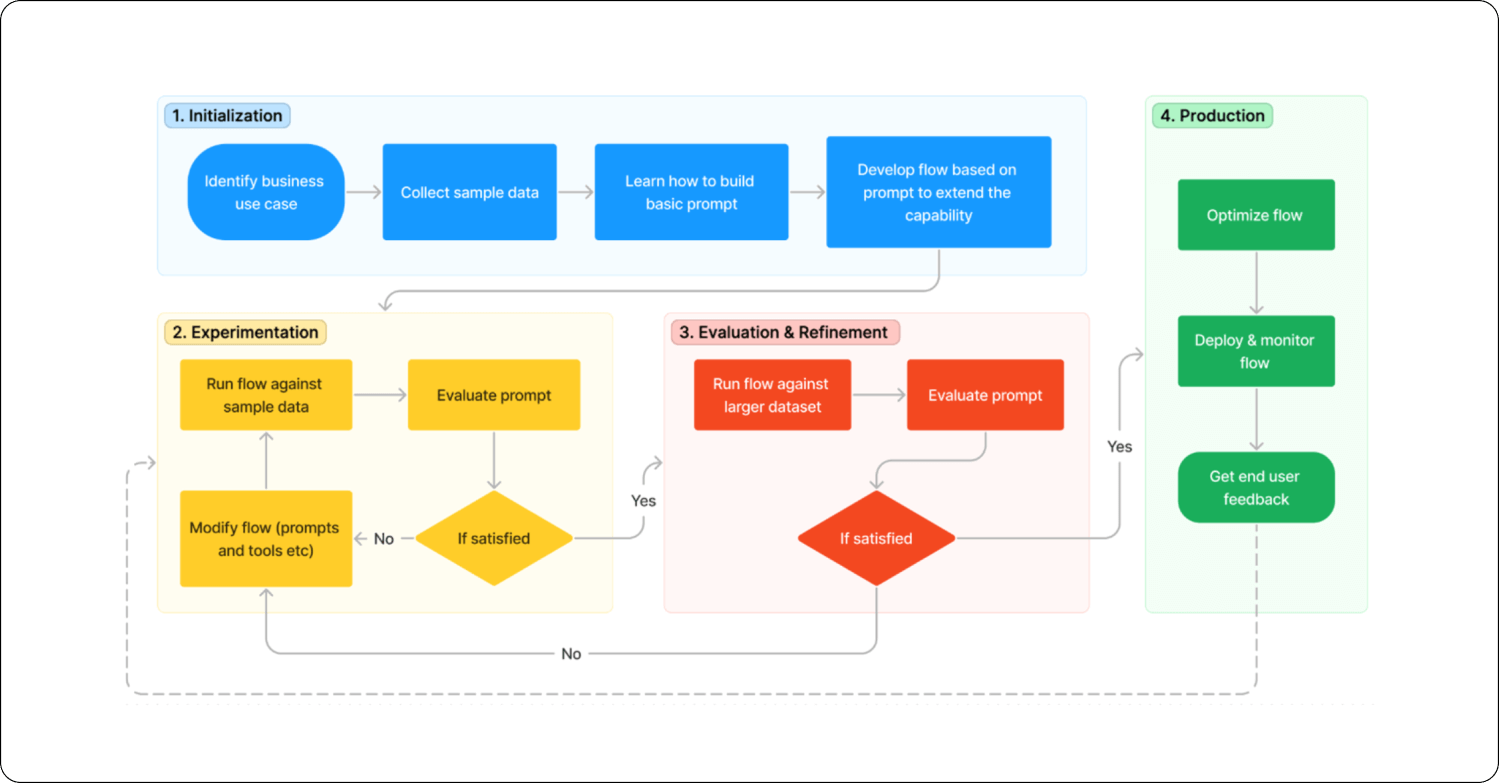

From ML to LLMs

Unlike traditional ML, where you do a lot of planning and iteration until you fine-tune your model to achieve precisely what you want, with LLMs, you need to embrace stochastic behavior. It’s all about iteration and experimentation, and with experimentation, you need to move fast and break things. It’s mostly about trying out these prompt engineering techniques and making sure that you have the right techniques in place, so don’t plan too much. But, in the same breath, don’t over-fixate on trying to build the right prompt from the beginning or the right pipeline from the start. Try a lot of stuff, break things, make comparisons, and embrace the stochastic behavior of LLMs.

As practitioners, we need to break out of the habit that we have from more traditional machine learning, where we invest a lot in the left end of the MLOps lifecycle. With LLMs and prompt engineering, the heavy lifting shifts more to the right, closer to what we’re doing in production. Experimenting and rapidly understanding where we’re failing and using that as an inflection point for further optimization is key for rapid iteration that gets us closer to success with each step.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Visit this link, and our team will show what Superwise can do for your ML and business.