Time and time again, we hear about bias in machine learning models. Just a cursory glance across newsfeeds turns up no lack of recent examples, notably Apple card’s gender discrimination in credit approval and Amazon’s biased recruiting tool, or more chilling examples of facial-recognition algorithms predicting criminality.

While you may come across blanket statements stating that “AI is biased,” it’s important to remember that bias is not inherent to machine learning models as a technology. It reflects our own human biases because these algorithms learn to predict the future based on people and our behavior.

This article discusses what bias is, how it’s related to fairness, why it’s so important to be familiar with data attributes, and how they can impact our ML models. We’ll also take a closer look at examples of the different kinds of bias and how to mitigate them. Our goal is to help you understand why and how bias can easily creep into your model’s decision-making process–even after you go to production–and what it takes to keep your decisions fair and accurate throughout the entire ML lifecycle.

Posts in this series:

- Making sense of bias in machine learning

- A gentle introduction to ML fairness metrics

- Dealing with machine learning bias

Is AI biased by definition?

Here’s how the Oxford Dictionary defines bias:

Now, this is a bit of a pickle because “prejudice in favor of or against one thing, person, or group compared with another” is literally what an ML model does. Let’s swap out some words in the previous sentence and say, “Prejudice loan approvals in favor of applicants likely to return the loan compared to applicants likely to default.” Not only is this statement perfectly fine, but this is also a very valid real-world example of how ML should be used. That is, unless the prediction is made in a way that is considered to be unfair.

Bias vs. fairness

Bias and fairness are often discussed together but are not the same. Bias in ML refers to systematic errors or inaccuracies in the learning process that can lead to incorrect predictions or decisions that discriminate against certain groups. This bias can be introduced by different factors, including the quality and quantity of data used for training, the choice of algorithm, or the assumptions made during model development. For example, if a model is trained on data that doesn’t represent the population it will be used on, its predictions will likely be biased.

Fairness, on the other hand, is the absence of unjust treatment. Fairness is a social and ethical concept that requires careful consideration of different factors, such as the impact of the model’s predictions on other demographic groups, the historical context of the problem being solved, and the potential consequences of the model’s predictions. For example, suppose a bank uses an ML model to predict whether an applicant will likely default on their loan. In that case, it will be fair to base this decision on objective variables like the person’s credit score, income, and employment history. If the model is based on factors outside the person’s control, like race or zip code, then it will be biased against certain groups of people.

In short, fairness is closely related to bias because biased models can result in the unfair treatment of individuals or groups. As a result, it is essential that we consider both bias and fairness when developing and deploying ML models and that we take appropriate measures to identify issues that may arise.

Protected attributes

There is no universally accepted framework for AI regulation today, and many countries are still developing their own policies and guidelines. But, in general, regulating bias in ML and understanding what is fair or unfair should be based on those same laws and policies aimed at preventing discrimination and promoting equality. These usually address various characteristics such as race, gender, religion, sexual orientation, and others.

The characteristics identified as those most commonly leading to bias in artificial intelligence systems are referred to as ‘protected fields.’ The exact list can vary depending on the context and the particular application, but some common ones include:

- Race

- Gender

- Age

- Religion

- Sexual orientation

- National origin

- Disability status

- Marital status

- Pregnancy status

- Genetic information

- Political views or affiliations

- Sexual identity

- Color

- Ethnicity

- Socioeconomic status

- Education level

- Geographical location

- Occupation

- Criminal history

- Health status

It’s not the protected fields themselves that are biased, but rather how they are used in the models. Obviously, you can’t simply eliminate them from the equation to ensure fairness. For example, it could be fatal to leave out details regarding the patient’s health or pregnancy status in drug prescriptions. To complicate things further, what’s considered ‘protected’ when it comes to bias may differ depending on where you are in the world. These policies will likely change as people learn more about fairness and discrimination.

Proxy attributes

Not all protected attributes are as straightforward as race, gender, and religion. Proxy attributes are features that are indirectly related to a protected attribute but can still be used to make predictions or decisions. Here are some examples of common proxy attributes:

| Attribute | Can be a proxy for |

| Zipcode | Income, socioeconomic status |

| Neighborhood | Race, income, socioeconomic status |

| Occupation | Gender, race, socioeconomic status |

| Marital status | Gender, sexual orientation, family status |

| Education level | Race, gender, socioeconomic status |

| Age | Income, disability status |

| Name | Race, ethnicity, religion |

| Physical characteristics | Race, ethnicity, gender, disability status |

The use of proxy attributes can perpetuate existing biases and discrimination, so you definitely want to consider carefully the features used in your machine learning models. You’ll also need to consider removing or modifying features that can serve as proxy attributes.

Types of bias in machine learning

The performance of machine learning algorithms is based on the data used to train them. If the training data contains biases, such as prejudice, stereotypes, or discrimination, the algorithms can learn and amplify these biases. Given that our own human history is rich with bias, at some point, you’re likely to encounter some type of bias from one or more of the categories listed below. And when that happens, you’ll need to take action to mitigate it.

Reporting bias

Reporting bias can occur when the results of a model are selectively reported, either intentionally or unintentionally, in a way that misrepresents the model’s true performance. This can happen if only the best results are reported while the failures or limitations of the model are not reported. For example, a company may report high accuracy rates for a machine learning model used to screen job candidates but ignore the fact that the model is biased against certain groups of applicants. This can lead to a false sense of confidence in the model’s performance, ultimately resulting in unfair treatment of job candidates.

Automation bias

Automation bias occurs when we, as humans, blindly trust the decisions made by ML algorithms without critically evaluating their accuracy or fairness. This happens when people assume that the algorithm is more objective or accurate than a human decision-maker, even when there is evidence to the contrary. Imagine a medical diagnosis system that uses machine learning to detect certain diseases based on a patient’s symptoms and medical history. If a doctor relies solely on the output of the ML model without verifying the results or considering other factors, they may be susceptible to automation bias. This can lead to incorrect diagnoses or treatments, especially if the model is biased or trained on incomplete or inaccurate data.

Selection bias

Selection bias in machine learning occurs when the sample data used to train or test the ML model is not representative of the target population. This can lead to inaccurate or biased predictions and decisions because the model is trained on incomplete or unrepresentative data. For example, if a bank has a system to predict good loan candidates, and it’s only been trained on data from people ages 35 to 60, it will not perform well when younger people aged 25 to 35 apply for loans.

Coverage bias is a type of selection bias that occurs when the data used to train the model doesn’t cover the entire target population. This can happen, for example, if the data is only collected from certain geographic regions or if certain subgroups of the population are underrepresented in the data, as in the example above.

No-response or participation bias is another type of selection bias that occurs when certain individuals or groups are less likely to participate in a study or provide data. This can lead to a biased sample that does not accurately represent the target population. For example, if a survey is only distributed online, it may not capture the opinions or experiences of individuals without internet access.

Sampling bias is a third type of selection bias that occurs when the sample data used to train the model is not randomly selected from the target population. This can lead to a sample that is not representative of the population and may overemphasize certain characteristics or subgroups. For example, if a study only collects data from a specific age range or socioeconomic status, the results may not accurately reflect the experiences of other groups.

Group attribution bias

Group attribution bias occurs when individuals make assumptions or judgments about a person or group based on their membership in a particular social group or category rather than on their individual characteristics or actions. This can lead to unfair or inaccurate decisions, especially in situations where group membership is irrelevant or should not be a factor.

In-group bias is a type of group attribution bias that occurs when individuals have a preference for people who are similar to them or belong to the same social group. This can lead to unfair treatment or discrimination against individuals who are perceived as being different or not belonging to the in-group. In the context of machine learning, in-group bias can manifest in the form of biased decision-making or predictions that favor certain groups over others.

Out-group homogeneity bias is another type of group attribution bias that occurs when individuals perceive members of an out-group as being more similar to each other than they really are. This can lead to stereotyping and generalization of out-group members, which can result in discriminatory or unfair treatment. In the context of machine learning, out-group homogeneity bias can manifest in the form of biased predictions or decisions that treat all members of an out-group as if they are the same rather than recognizing individual differences and characteristics.

Implicit bias

Implicit bias refers to people’s unconscious or automatic associations and attitudes toward certain groups or categories. These biases can influence decision-making and behavior, often without people even realizing it. In the context of machine learning, implicit bias can manifest in the form of biased data or models that perpetuate unfair or discriminatory practices. For example, in a system that vets job applications, the algorithm may learn that candidates who have studied at certain universities or have certain degrees are more likely to be successful in the job. Suppose the historical data shows that more men than women have these qualifications. In that case, the algorithm may learn to prioritize male candidates over female candidates, even if the qualifications are not actually relevant to the job.

Confirmation bias is a type of bias that occurs when individuals selectively interpret information or evidence in a way that confirms their pre-existing beliefs or expectations. This can lead to overemphasizing certain aspects of the data while ignoring or downplaying other important information. In the context of machine learning, confirmation bias can lead to models that only consider certain features or variables while ignoring others that may be equally or more important.

Experimenter’s bias is another type of bias that occurs when the experimenter’s beliefs, expectations, or actions influence the study’s outcome. This can manifest in different ways, such as how the data is collected or analyzed or how the results are interpreted or presented. In the context of machine learning, a data scientist’s bias can lead to models that overemphasize certain variables or features or models that are interpreted in a way that confirms the experimenter’s pre-existing beliefs.

Bias is a business constraint, not a model constraint!

There is no one way to address bias because fairness is relative. No single fairness metric can capture all aspects of fairness, and the appropriate metric to use will depend on the specific problem and context. Therefore, bias is a business constraint, not a model constraint. How your business measures fairness and bias will result from laws and guidelines, such as affirmative action or even internal business policies derived from ethics guidelines, as they apply to your industry and business context.

Bias in AI examples – AI in education

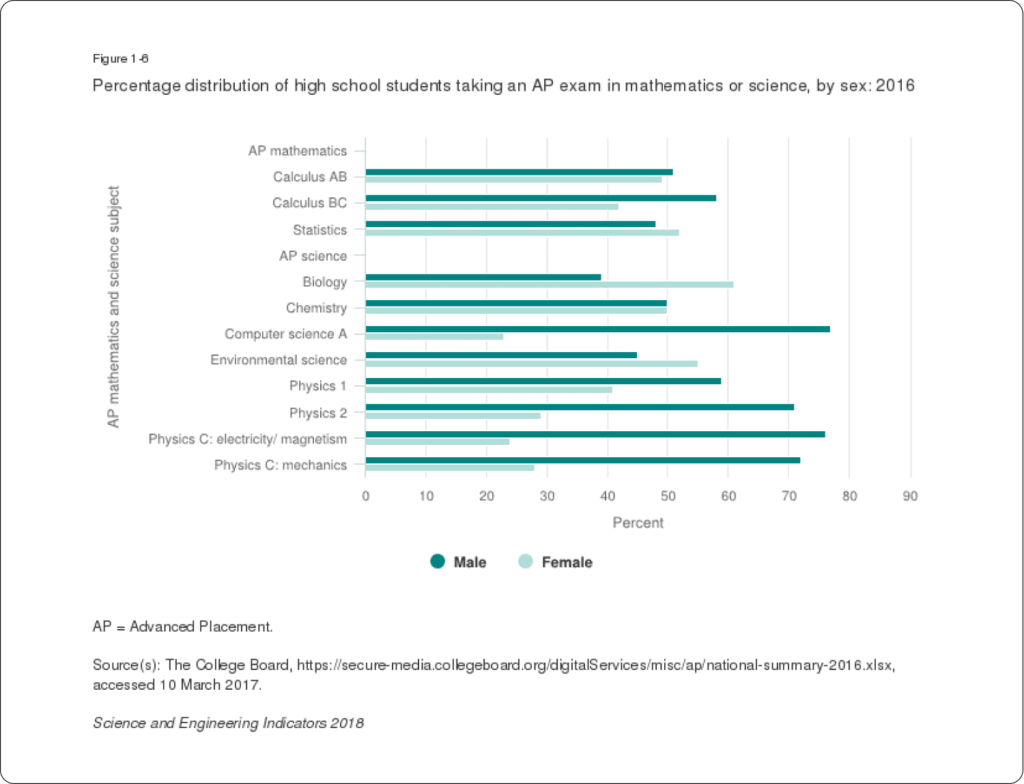

Let’s imagine a use case where AI is used to determine which recent high-school graduates should be interviewed for a STEM training program. The criteria that the program wants the model to use in this decision-making process are STEM courses, grades, AP participation, and performance during high school. For simplicity’s sake, we’ll assume that the only bias issue is sex (male vs. female). This is not an accurate reflection of reality because bias is much more convoluted and nuanced in the real world.

* Please note that the examples do not constitute a legal recommendation or guideline for this particular use case. This example only illustrates some potential considerations that practitioners should address in the context of bias and fairness in ML.

The data suggests that male students will be more likely to pursue advanced-level math and science courses. In contrast, female students are more likely to take biology and environmental science courses. Because far fewer females take advanced STEM courses, the model will inevitably be trained on more data for males, and this will skew the recommendations by preferring male students over female ones. There are many ways to address the issue of bias to ensure the system treats all candidates fairly.

Approach 1: As long as my AI doesn’t know about sex, the system is fair.

Approach 2: As long as graduates of both sexes, with similar qualifications, have an equal chance of being invited for an interview, the system is fair.

At first glance, both approaches seem reasonable and fair, but they don’t consider the real distribution of sex in high school students taking AP exams in math or science. Out of 11 AP courses, 4 have a male/female ratio close to 80/20. If AP exams are important criteria in our model’s decision-making process, we may consider sex=female an underprivileged group that should be assigned a higher-than-specified ratio.

Approach 3: As long as female graduates are invited for interviews at a higher rate than some predefined threshold.

All three approaches are valid, but not all will produce fair and accurate results in every context.

Bias in AI examples – AI in loan approval

A 2019 study on Consumer-Lending Discrimination in the FinTech Era presents a more nuanced use-case example that addresses both regulation and bias by examining discrimination and algorithms in the context of the GSE’s (Government Sponsored Enterprises) pricing of mortgage credit risk.

In the United States, there are laws that prohibit lenders from discriminating against minorities when they’re considering someone’s ability to repay a loan. However, there are still two ways that bias can occur: lenders may reject loan applications from certain minority groups more often, or they may charge higher interest rates for loans to these groups.

So if a lender gets a guarantee from the government that they won’t lose money if a borrower doesn’t pay back their loan, then any loan rejections or differences in interest rates for people with the same credit score and loan-to-value ratio can’t be fully explained by differences in the borrower’s risk of not repaying the loan. Instead, these differences are influenced by factors like the lender’s bias against certain groups of people or their pricing strategy to make more money.

Simply put, the authors suggest the following:

- If a loan that could be GSE approved is denied, then logic suggests that this reflects a biased decision.

- All legitimate business variables are handled within the GSE pricing grid. Consequently, any additional correlation of loan pricing with race or ethnicity is clearly discrimination within the grid. Meaning it’s fair to charge a premium interest rate based on geography as long as you’re discriminating equally against the majority and minority class. That might be ‘fair,’ but it’s still biased for the class of minority correlation–even if it is done unintentionally.

- To qualify as a legitimate business necessity, a proxy variable should not predict a protected characteristic, even after adjusting for hidden fundamental credit-risk variables.

If we were to tie this back to the approaches illustrated in the previous section,

Approaches 1 and 3, which ignore race/ethnicity or ensure a higher rate of acceptance, do not apply in this case because the lenders can legally discriminate against minorities as long as they do not predict the protected class independently.

Approach 2 shows more fairness because minority borrowers have an equal chance of being approved/denied if they show the same level of creditworthiness.

Is there a trade-off between fairness and accuracy?

Within the context of ML, there is a long-held axiom that to achieve fairness, one needs to sacrifice accuracy. In the real world, these two may conflict more often than not. Yet they are not inherently juxtaposed to each other but rather historically at odds with each other. More and more research is challenging the assumption that fairness makes a machine learning system less accurate.

Suggested reading:

- A technique to improve both fairness and accuracy in artificial intelligence, MIT

- Fighting discrimination in mortgage lending, MIT

- Machine Learning Can Be Fair and Accurate, CMU

- Is there a trade-off between fairness and accuracy? A perspective using mismatched hypothesis testing, ICML

As practitioners, it is crucial to recognize that there may be a correlation causation fallacy between fairness and accuracy in the context of our ML decision-making process and strive, together with business, risk, and compliance stakeholders, to equitable balance our results, so they do not negatively impact groups within our population.

Identifying bias in ML

Here are some tips on identifying bias in an operational setting.

- Review the training data: Without realizing it, you can introduce bias during data collection. If the training data doesn’t represent the real-world target population, the model will have trouble generalizing and producing biased results.

- Audit the algorithm: Make sure to review the design of the algorithm and any assumptions it makes so you can catch potential sources of bias. For example, some algorithms may have biases towards variables such as gender or age group or use default values that are inappropriate for your problem.

- Evaluate model performance: Remember to evaluate your model’s performance on different population subgroups to see how this may affect the results. For example, if the model has a higher error rate for males, then it may be biased toward that group.

- Use fairness metrics: There are fairness metrics that can help you assess the model’s results. There are metrics for the data and others for the model itself. Some measure information within a group, and others compare differences across groups. Common fairness metrics include demographic parity, equal opportunity, and statistical parity to ensure each group member has the same chance of receiving a favorable outcome.

- Conduct stakeholder reviews: Be sure you involve stakeholders from different backgrounds to review the model and its results. It’s always a good idea to bring in a fresh look to help identify any unintended results or possible sources of bias.

Measuring fairness

Fairness metrics are designed to measure the biases discussed here by evaluating the extent to which a machine learning algorithm is fair based on the selected fairness evaluation criteria. There are a number of resources and frameworks that can help you understand the different fairness metrics and how to choose the right metric for your fairness use case. For example, Aequitas is an open-source bias audit toolkit for ML developers geared toward helping more equitable decision-making.

Continuous bias monitoring

Although this blog goes through many different kinds of bias and how they can impact your model’s fairness in the real world, measuring or detecting bias is not a ‘once and done’ process. There are many ways to measure bias before you get to production, but experience shows that you need to continuously monitor for bias even after deploying your system to production.

Measuring bias during development or training is not enough to guarantee fairness in production. It’s vital to monitor and evaluate the algorithm’s outcomes in production on an ongoing basis, over time and through different periods and populations, to guard against unfair outcomes. And that’s precisely what we’ll cover in our next post, so make sure to subscribe to our newsletter below 👇

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.