This piece is the second part of a series of articles on production pitfalls and how to rise to the challenge.

–

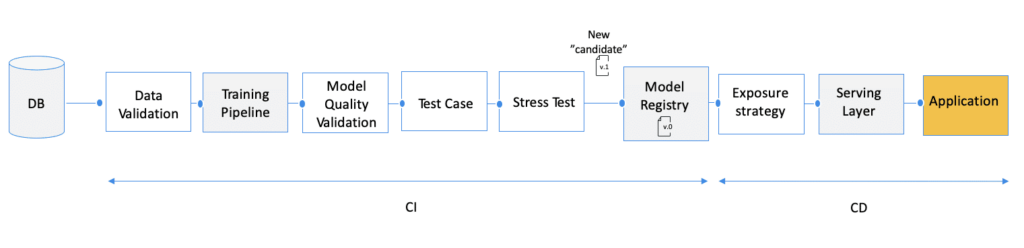

In the first part of this article, we looked at the reasons that make “the ML Orchestra” such a complex one to tune and laid out the best practices and processes to be implemented during the Continuous Integration (CI) phase. We described how to create a new valid candidate model and the offline validation steps needed before committing and registering a new model version to the model registry.

Ensuring that the orchestra works well functionality-wise doesn’t guarantee that the model works as expected and operates at the right level of performance. For a thorough approach to safely rollout models, one should also look at the deployment phase or CD phase.

In traditional software, the CD phase is the process of actually deploying the code to replace the previous versions. This is usually carried out through a “canary deployment” – a methodology for gradually rolling out releases. First, the change is deployed to a small subset of cases, usually rolling out gradually to a subset of servers. Once it is established that the functionality works well, the change is then rolled out to the rest of the servers.

Yet, when talking about releasing a new model, the correctness and the quality of the newly deployed model is the main concern. As such, the CD process for a new model should basically be a gradual one to validate its quality on live data using production systems before letting it kick in and take real automated decisions. Such evaluation is often called “online evaluation”, as opposed to the tests achieved during the CI phase, which are based on historical datasets and may be considered to be “offline evaluation”. The online evaluations of the model online may involve a few basic strategies: shadow model, A/B testing, and multi-armed bandit. In this post, we will describe each strategy, the cases in which they are recommended, what the deployment process looks like, and how they can be achieved using a robust ML model monitoring system.

Shadow evaluation

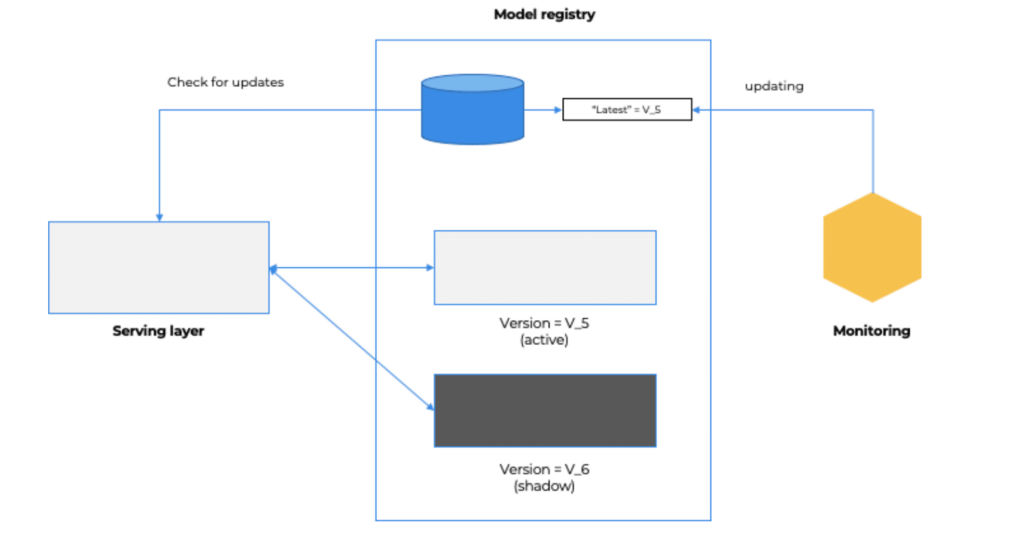

Shadow evaluation (often called “Dark launch”) is a very intuitive and safe strategy. In shadow mode, the new model is added to the registry as a “candidate model”. Any new prediction request is inferred both by the model versions, the one that is currently used in production, and the new candidate version in shadow mode.

In shadow mode, the new version is tested using a new stream of production data, but only the predictions of the latest “production model” version are being used and returned to the user/business.

With this method, the monitoring service should persist through both sets of predictions — and continuously monitor both models until the new model is stable enough to be promoted as the new “production version”. Once the new version is ready, the monitoring service signals to the serving layer that it needs to upgrade the model version. Technically this “promotion” can be done by updating a tag of “latest” for the new version and making the serving layer always work with the “latest” model version tag (similar to the “latest” concept in docker images). Or by working with an explicit model version tag, but this will require the serving layer to always check first what is the latest production-ready version tag, and only then reload the relevant version and use it to perform predictions.

We should compare the new “shadow” version to the current latest production model, using statistical hypothesis

Such a test should verify that the performance of the new version has the required effect size, a.k.a: the difference between the two version performance metrics, under a specific statistical power, a.k.a.: the probability of correctly identifying an effect when there is one. For example: If the latest version had a precision level of 91% in the past week, the new candidate version must have a 0 or more effect size so the new precision rate will be 91% at least.

The overall performance level is not the only metric to look at

Before promoting the model, though, additional factors could be important to monitor: the performance stability, meaning determining the variance in the daily precision performance in our last example, or checking performance constraints for specific sub-population. E.g., the model must have a 95% precision level, at least for our “VIP customers”. When the label collection takes time or might be missing completely, other KPIs can be used to test the new model as a proxy for its quality — i.e., in loan approval use cases, where such feedback loops might take longer than 3–6 months. In these cases, the level of correlation between the predictions of the two versions can be used, as a new version should have a relatively high level of correlation relative to its predecessor. Another option is to test the level of distribution shift in the production data relative to its training dataset to ensure that the new version was trained on a relevant dataset relative to the current production distribution.

Aside from all its benefits, shadow evaluation has limitations and is only partially practical. This is what we will analyze below, as well as review alternative solutions.

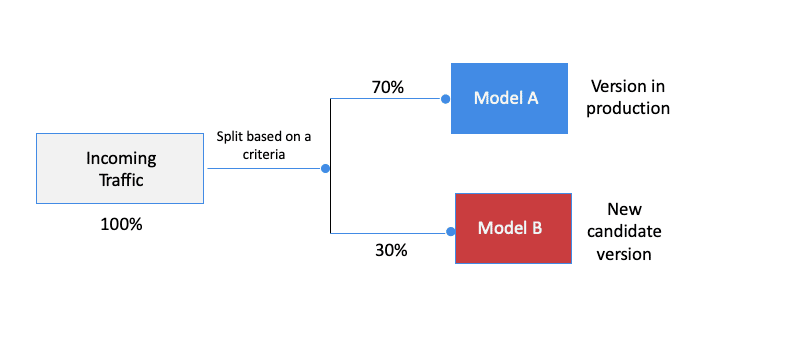

A / B evaluation

In many ML scenarios, the predictions of the model actually impact the surrounding environment it operates in. Let’s take a recommendation model responsible for ranking the top 5 movie suggestions to offer. In most cases, the label depends on whether or not the user indeed watched one of the movies suggested. Here, the model’s prediction impacted the user’s behavior and, therefore, the collected data. If a candidate shadow model outputs 5 very different movies, the fact that the user doesn’t select them doesn’t necessarily mean that they were bad recommendations – only the fact that the model was in shadow mode, and didn’t take any real-life action, made it impossible for the user to actually see these movies. In this scenario, the performance success cannot be compared. This is when an online A/B test evaluation is required.

In an A/B setup, both the current latest version and the new candidate version, which may be referred to as model A / “control” group and model B / “treatment” group, are actually active in production. Each new request is routed to one of the models according to a specific logic, and only the selected model is used for prediction.

These evaluations can be carried out for a certain amount of time, predictions, or until the statistical hypothesis – whether the new version is at least as good as the previous one – is validated. Similar to the shadow strategy, the KPIs to test can be performance, stability, or some other proxy; It is important to note that the monitoring service should be in charge of the management and evaluation of the A/B tests.

The success of this method highly depends on the ability to split the A/B test groups correctly. A good practice for it is to do a random sample for a specific portion to get the “treatment”, meaning the prediction from the new version. For example, with the movie recommendation use case, any new request should have X (e.g., 10%) chance to be served by the new model version until the test is done. Usually, the exposure factor is relatively low, as this strategy actually “exposes” the new model even before the qualitative tests are completed.

Such A/B configuration can be generalized also to A/B/C/… setups – where multiple models are compared simultaneously. Keep in mind, though, that testing a few models together side-by-side can increase the chances for False-Positive outcomes due to the higher number of tests. Besides, given the fact that we are testing a smaller amount of samples in each group, the statistical power of “small effect size” is reduced and, therefore, should be considered carefully.

Multi-armed bandit

Multi-Armed Bandit (MAB) is actually a more “dynamic” kind of A/B test experimentation, but also a more complex one to measure and operate. It’s classical reinforcement learning where one tries to “explore” (try a new model version) while “exploiting” and optimizing the defined performance KPI. Such configuration is based on the implementation of the exploration/exploitation tradeoff inside the monitoring service. The traffic is being dynamically allocated between the two (or more) versions, according to the collected performance KPI – or other proxies as described earlier.

In this situation, the new version is exposed to a certain amount of cases. The more it equals or surpasses the previous version, and based on its performance KPI, the more and more traffic will be directed to it.

Such configuration seems ideal, as we try to optimize the “exposure” dynamically while optimizing our main metric. However, it requires advanced monitoring and automation capabilities to implement the multi-armed bandit solution and adjust the traffic dynamically based on its results. Especially given the difficulties related to the definition of one clear KPI to be exploited by the system – performance, stability,…

To summarize, the sensitivity of the ML orchestra requires a dedicated rollout strategy – which can either be a shadow model, A/B testing or multi-armed bandit, or a mix of a few of them together, depending on your use cases.

The key piece of your orchestra, regardless of the method you select, is a flexible and robust monitoring solution to collect and analyze the status and KPIs of the new rollout version and enable advanced testing for various use cases.

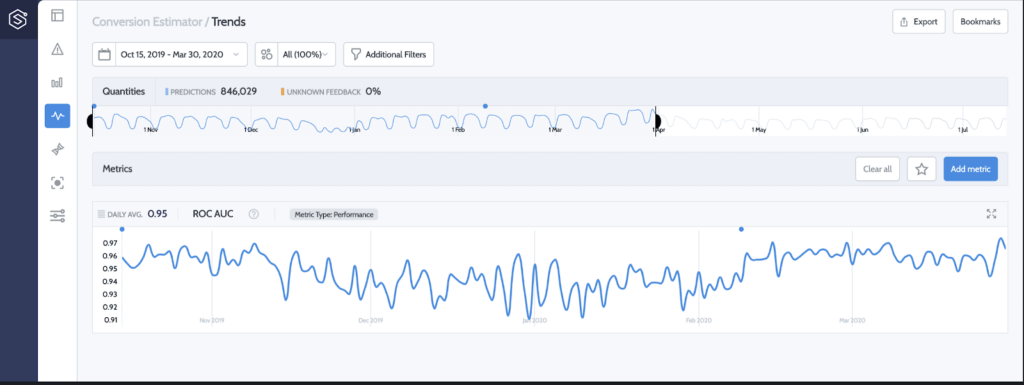

Below we can see the safe delivery of the new version (V2), yielding an improved ROC AUC performance in production.

Even if you set aside the complexities related to the measurements of two models at once and their comparisons to assure safe(r) model rollouts, the monitoring service should be able to measure the distribution of the decision-making and its confidence levels, on top of the performance KPIs to detect the specific level of bias metrics, and to be able to do so across the dataset and for specific high-resolution segments.

Conclusion

Safely rolling out models is about monitoring the orchestration of all the pieces of the ML infrastructure together: it touches upon your data, your pipelines, your models, and the control and visibility you have over each of these pieces.

At the end of the day, the anxiety that accompanies the day you roll your models/versions to production has more to do with the fact that, ironically, data scientists progress without a strong data-driven approach.

By looking at the examples used in traditional software and implementing that onto our own ML paradigm, CI/CD best practices, we learn that there are many steps to be taken to have a better “rollout day”. All of these strategies rely on a strong monitoring component to make the processes more data-driven.

Looking beyond the health of your models in production also requires a thorough and data-driven retraining strategy. While the CI/CD paradigms address the “what” and the “how” of new models roll-out, the “when” is covered by the CT (Continuous Training) paradigm. This will be the focus of our next piece, so stay tuned!

If you have any questions or feedback, or if you want to brainstorm on the content of this article, please reach out to me on LinkedIn

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.