This piece is the first part of a series of articles on production pitfalls and how to rise to the challenge.

–

CI/CD best practices to painlessly deploy ML models and versions

For any data scientist, the day you roll out your model’s new version to production is a day of mixed feelings. On the one hand, you are releasing a new version that is geared towards yielding better results and making a greater impact; on the other, this is a rather scary and tense time. Your new shiny version may contain flaws that you will only be able to detect after they have had a negative impact.

ML is as complex as orchestrating different instruments

Replacing or publishing a new version to production touches upon the core decision-making logic of your business process. With AI adoption rising, the necessity to automatically publish and update models is becoming a common and frequent task, which makes it a top concern for data science teams.

In this two-part article, we will review what makes the rollout of a new version so sensitive, what precautions are required, and how to leverage monitoring to optimize your Continuous Integration pipeline (Part I) as well as your Continuous Deployment (CD) one to safely achieve your goals.

The complexity of the ML orchestra

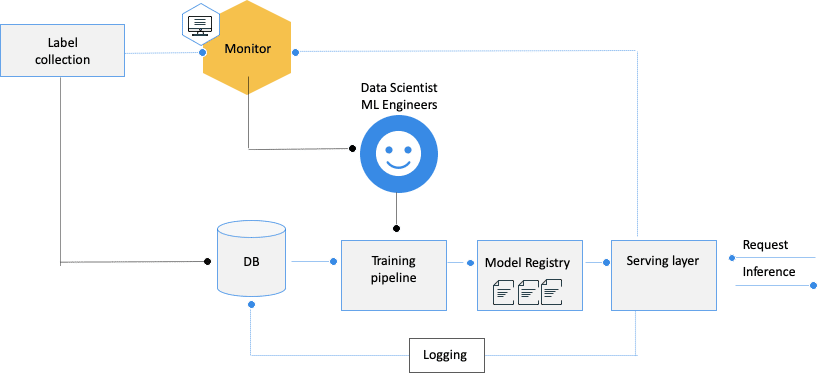

ML systems are composed of multiple moving and independent parts of software that need to work in tune with each other:

- Training pipeline: Includes all processing steps that leverage your historical dataset to produce a working model: data pre-processing, such as embeddings, scaling, feature engineering, feature selection or dimensionality reduction, hyperparameter tuning, and performance evaluation using cross-validation or hold-out sets.

- Model registry: A deployed model can take various forms: specific object serialization, such as pickles, or cross-technology serialization formats, such as PMML. Usually, these files are kept in a registry based on shared file storage (S3, GCS,…) or even in a version repository (git). Another option to persist the model is by saving the new model parameters, i.e., saving a logistic regression coefficient in a database.

- Serving layer: This is the actual prediction service. Such layers can be embedded together with the business logic of the application that relies on the model predictions or can be separated to act as a prediction service decoupled from the business processes it supports. In both cases, the core functionality is to retrieve the relevant predictions according to new incoming requests using the latest model in the model registry. Such a service can work in a batch or stream manner. While inferring the predictions, the preprocessing steps that were taken in the training pipeline should also be aligned and used with the same logic.

- Label collection: A process that collects the ground truth for supervised learning cases. It can be done manually, automatically, or using a mixed approach, such as active learning.

- Monitoring: An external service that monitors the entire process, from the quality of inputs that get into the serving and up to the collected labels, to detect drift, biases, or integrity issues.

Given this relatively high-level and complex orchestration, many things can get out of sync and lead us to deploy an underperforming model. Some of the most common culprits are:

Lack of automation

Most organizations are still manually updating their models. Whether it is the training pipeline or parts of it, such as features selection or the delivery and promotion of the newly created model by the serving layer; doing so manually can lead to errors and unnecessary overheads. To be really efficient: all the processes, from training to monitoring, should be automated to leave less room for error – and more space for efficiency.

A multiplicity of stakeholders

As a plurality of stakeholders and experts are involved, there are more handovers and, thus, more room for misunderstandings and integration issues. While the data scientists design the flows, the ML engineers are usually doing the coding – and without being fully aligned, this may result in having models that work well functionally but fail silently, either by using the wrong scaling method or by implementing an incorrect feature engineering logic.

Hyper-dynamism of real-life data

Research mode (batch) is different from production. Developing and empirically researching the optimal training pipeline to yield the best model is done in offline lab environments, with historical datasets, and by simulating hold-out testings. Needless to say, these environments are very different from the production model, for which only parts of the data are actually available. This usually results in containing data leakages or wrong assumptions that lead to bad performance, bias, or problematic code behavior once the model is in production and needs to serve live data streams. For instance, the newly deployed version’s inability to handle new values in a categorical feature while pre-processing it into an embedded vector during the inference phase.

Silent failures of ML models vs. traditional IT monitoring

Monitoring ML is more complex than monitoring traditional software – the fact that the entire system is working doesn’t mean it actually does what it should do. Because all the culprits listed above may result in functional errors, and these may be “silent failures”, it is only through robust monitoring that you can detect failures before it is too late and your business is already impacted.

The risks associated with these pitfalls are intrinsically related to the nature of ML operations. Yet, the best practices to overcome them may well come from traditional software engineering and their use of CI/ CD (Continuous Integration/ Continuous Deployment) methodologies. To analyze and recommend best practices for the safe rollout of models, we have used the CI/CD grid to explain which steps should be taken.

Best practices for the CI phase

CI practices are about frequently testing the codebases of each of the software modules, or unit tests, and the integrity of the different modules working together by using integration/system tests.

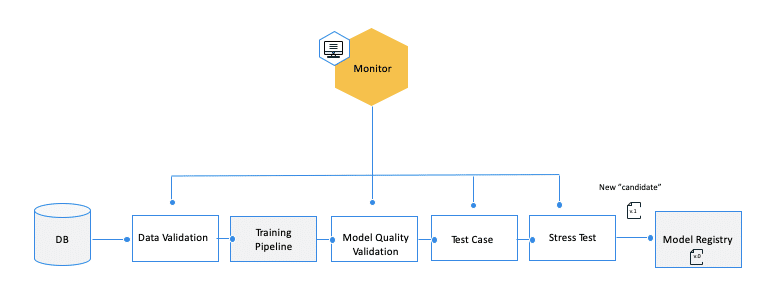

Now let’s translate this to the ML orchestra. A new model, or version, should be considered a software artifact that is a part of the general system. As such, the creation of a new model requires a set of unit and integration tests to ensure that the new “candidate” model is valid before it is integrated into the model registry. But in the ML realms, CI is not only about testing and validating code and components but also about testing and validating data, data schemas, and model quality. While the focus of CI is to maintain valid code bases and modules’ artifacts before building new artifacts for each module, the CD process handles the phase of actually deploying the artifacts into production

Here are some of the main best practices for the CI phase that impact the safe rollout of model/new version implementations:

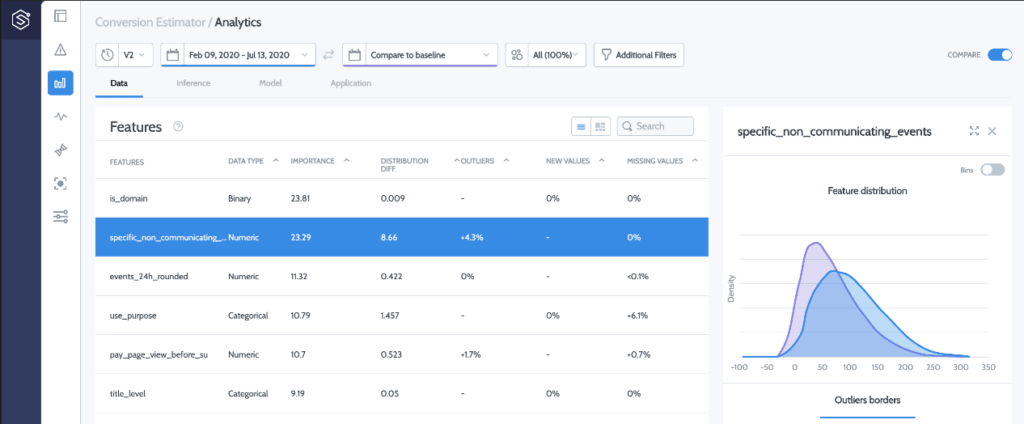

Data validation

Models are retrained/produced using historical data. For the model to be relevant in production, the training data set should adequately represent the data distribution that currently appears in production. This will avoid selection bias or simply irrelevance. To do so, before even starting the training pipeline, the distribution of the training set should be tested to ensure that it is fit for the task. At this stage, the monitoring solution can be leveraged to provide detailed reports on the distributions of the last production cases, and by using statistical tools such as deequ, this type of data verification constraint can be automatically added to the CI process.

Model quality validation

When executing the training pipeline and before submitting the new model as a “candidate” model into the registry, ensure that the new training process undergoes a healthy fit verification.

Even if the training pipeline was automated, it should include a hold-out/cross validation model evaluation step.

Given the selected validation method, one should test that the fitted model convergence doesn’t indicate overfitting, i.e., seeing a reduced loss on the training dataset while it’s increasing on the validation set. The performance should also be above a certain minimal rate – based on a hardcoded threshold, naive model as a baseline, or calculated dynamically by leveraging the monitoring service and extracting the rates of the production model during the used validation set.

Test cases – Model robustness for production data assumptions

Once the model quality validation phase is completed, one should perform integration tests to see how the serving layer integrates with the new model and whether it successfully serves predictions for specific edge cases. For instance: handling null values in the features that could be nullable, handling new categorical levels in categorical features, working on different lengths of text for text inputs, or even working for different image sizes/resolutions,… Here also, the examples can be synthesized manually or taken from the monitoring solution, whose capabilities include identifying and saving valid inputs with data integrity issues.

Model stress test

Changing the model or changing its pre-processing steps or packages could also impact the model’s operational performance. In many use cases, such as real time-bidding, increasing the latency of the model serving might impact dramatically the business.

Therefore, as a final step in the model CI process, a stress test should be performed to measure the average latency to serve a prediction. Such a metric can be evaluated relative to a business constraint or relative to the current production operational model latency, calculated by the monitoring solution.

Conclusion

Whenever a new model is created, before submitting it to the model “registry”, and as a potential “candidate” to replace the production model, applying these practices to the CI pipelines will help ensure that it works and integrates well with the serving layer.

Yet, while testing these functionalities is necessary, it remains insufficient to safely roll out models and address all the pitfalls listed in this article. For a thorough approach, one should also look at the deployment stage.

Next week, in the second part of this article, we will review what model CD strategies can be used to avoid the risks associated with the rollout of new models/versions, what these strategies require, in which cases they are better fitted, and how they can be achieved using a robust model monitoring service. So stay tuned!

If you have any questions, or feedback, or if you want to brainstorm on the content of this article, please reach out to me on LinkedIn