Natural Language Processing (NLP) is hardly anything new, having been around for over 50 years. But its evolution and the way we use it today with the backing of deep learning, GPT, LLMs, and transfer learning has changed radically compared to back in the day of text mining.

With a vast array of ML tasks under its wing, such as text classification, translation, question answering, and so forth, together with the mainstream adoption of NLP across a wide range of industries and direct consumer use – NLP monitoring has never been more needed nor challenging. One only needs to look to the Tay Chatbot debacle or Amazon’s famously biased recruiting tool to see the many reasons why we need to stay on top of these issues.

Monitoring machine learning models, in general, is not trivial (check out some of our posts on drift and bias to learn more). Still, NLP, in particular, produces a few unique challenges that we’ll lay out and examine in this post.

Language drifts

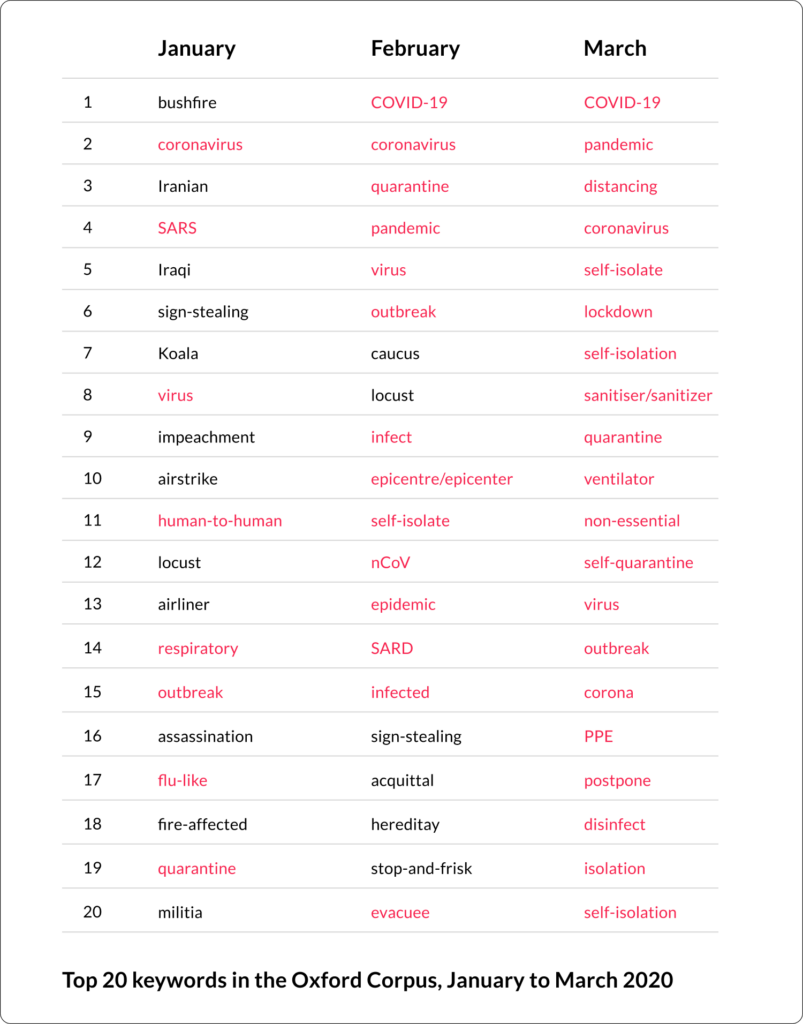

Language is fluid, drifting gradually over time and, in some cases, such as Corona, rapidly. In the 400 years since the time of Shakespeare till today, all aspects of the English language have changed – everything, spelling, punctuation, vocabulary, grammar, and even pronunciation. It took only a few months for COVID-19 to change the context and frequency of a whole host of words related to the pandemic and have a significant linguistic impact

Natural Language Drift in language usage and meaning due to various factors that can make it challenging for NLP models to keep up – which can lead to inaccurate or outdated results when using machine learning models trained on large datasets.

Examples of Natural Language Drift:

- Slang and idiomatic expressions: The meaning of slang and idiomatic expressions can change over time. For example, the word “cool” used to mean “calm and collected,” but now it’s more commonly used to indicate “fashionable” or “impressive.”

- Neologisms: New words and phrases are constantly being added to the language, particularly with the emergence of new technologies and social trends. For instance, the word “selfie” didn’t exist in English a few years ago.

- Semantic change: The meaning of words can change over time due to cultural shifts. For instance, the word “gay” used to mean “happy” or “carefree” but has now become synonymous with homosexuality.

- Grammar and syntax: The rules of grammar and syntax can change over time. For example, the use of contractions in English has become more prevalent over the past few decades, and the word “ain’t,” which was once considered improper English, is now widely used in informal speech.

Sparse feature space

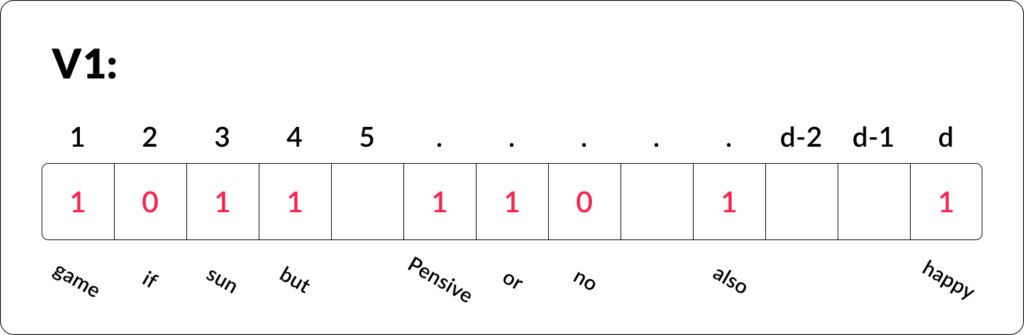

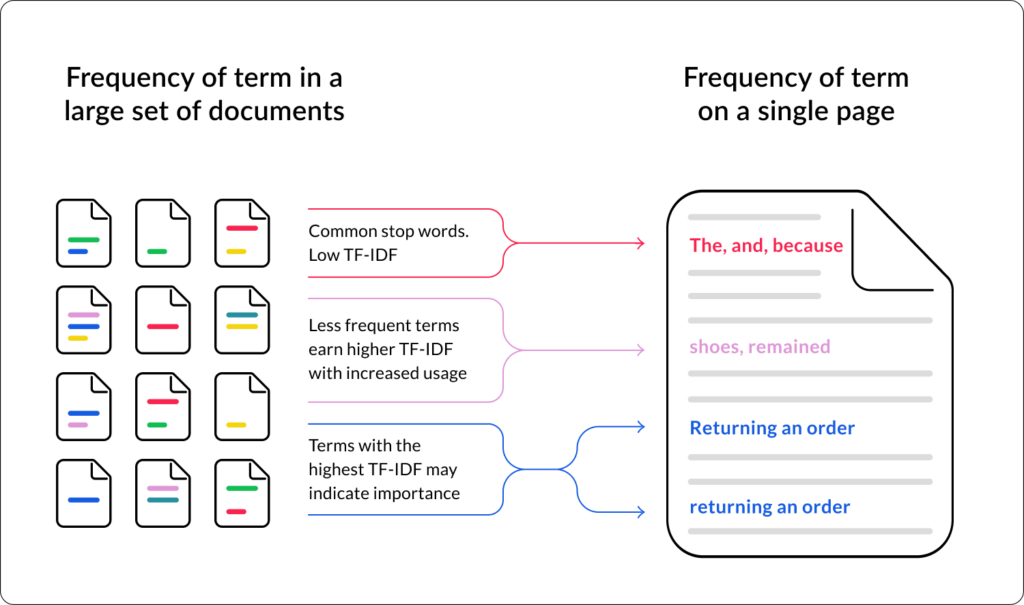

The most intuitive way to “structure” text is to approach each word as a feature and therefore transform unstructured text into structured data, on top of which we can identify meaningful patterns. The techniques to achieve this usually refer to Bag of Words (BoW) and Term Frequency-Inverse Document Frequency (TF-IDF). The BoW technique represents a document as a bag of individual words, creating a sparse matrix where each row represents a document and each column represents a unique word in the corpus. On the other hand, TF-IDF takes into account the importance of words in a document relative to the entire corpus, based on two components: term frequency (TF) and inverse document frequency (IDF). The product of these two values represents the importance of a word in a document.

BoW is easy to implement and interpret, efficient for large datasets, and works well for simple classification tasks. However, it may create a sparse feature space, where many of the cells in the matrix are empty, which can lead to overfitting and a high risk of model instability. Furthermore, BoW does not consider the context or order of words in the document, does not capture the importance of rare words, and tends to give equal importance to common and rare words.

Let’s say you’re a marketing analyst who is analyzing customer feedback from online reviews of a product. You train a BoW model on reviews from 2015-2020 to identify the most common topics and sentiments expressed by customers. However, as time goes on, the language and tone of customer reviews may naturally drift due to changes in the product, competition, and customer expectations.

For example, if the product you’re analyzing is a smartphone, the most common topics and sentiments expressed in reviews from 2015-2020 may be related to the phone’s design, features, and performance compared to other smartphones on the market at that time. However, if you apply the same BoW approach to reviews from 2021, you may find that the most common topics and sentiments have shifted to focus on newer features like 5G connectivity, camera quality, and battery life.

On the other hand, TF-IDF captures the importance of words in a document relative to the entire corpus, reduces the weight of commonly used words, and works well for complex classification tasks. It can also help to address the sparse feature space issue by reducing the impact of commonly occurring but unimportant words. However, TF-IDF is more complex than BoW, requires a large amount of text data to work effectively, can be sensitive to outliers and noise in the data, and may be less scalable to new languages.

Let’s say you’re using TF-IDF to train a sentiment analysis model on social media data. The language used on social media platforms can change rapidly due to emerging slang, memes, and cultural trends. For example, the phrase “on fleek” was a popular slang term in 2014-2015 but has since fallen out of use. If your TF-IDF approach places too much weight on this phrase, it may not perform as well on newer data.

On top of the fact that BoW and TF-IDF models are extremely sensitive to changes, trying to monitor a sparse feature space is just downright unhelpful. Even in the event of a change, attempting to express such a vast collection of words and their frequency is so granular an approach that most practitioners would be at a loss to intuit the context of such a change.

Embedding spaces are fuzzy

The key change point for NLP apps was the advent of word2vec, attention models, and word embeddings in general.

- Word2vec is a neural network-based approach for generating word embeddings, which are representations of words in a high-dimensional vector space. Word embeddings capture the semantic and syntactic meaning of words and are used to improve the performance of various NLP tasks like sentiment analysis, machine translation, and text classification.

- Attention models are a type of neural network architecture that is particularly effective for tasks that involve long sequences of text, such as machine translation or text summarization. These models enable the neural network to selectively focus on different parts of the input sequence, which is particularly useful when the input sequence is long or contains many irrelevant features.

- Word embeddings have also seen significant progress in recent years, with the introduction of pre-trained embeddings like GloVe and BERT. These pre-trained embeddings are trained on large corpora of text data and capture the complex relationships between words in the text. They have proven to be a powerful tool for transfer learning, where pre-trained embeddings are fine-tuned on smaller datasets for specific NLP tasks.

Specifically, embeddings are a great way to represent words in a high-dimensional vector space, where each dimension of the vector corresponds to a feature or attribute of the word, meaning that words that are semantically or syntactically related will have similar vector representations.

One of the main challenges with word embeddings is that they are often difficult to interpret or understand. This is because the high-dimensional vector space in which the embeddings are represented doesn’t have a clear or intuitive interpretation, making it challenging to interpret the meaning of individual dimensions or clusters of dimensions.

For example, it can be difficult to understand what specific features or attributes are being represented in a particular dimension of a word embedding. Additionally, it can be challenging to determine why certain words are located close together or far apart in the embedding space, particularly for words that have multiple meanings or ambiguous relationships with other words.

Detecting distribution changes in the embedding layer many times leaves the user confused regarding the root cause of the specific drift. In addition, it’s difficult to understand how the embeddings are influencing the output of an NLP model or to diagnose issues with the model’s performance. It can also make it challenging to identify biases or errors in the embeddings themselves, particularly when working with large or complex datasets.

While there are dimension reduction and visualization techniques such as t-distributed stochastic neighbor embedding (t-SNE), uniform manifold approximation and projection, or principal component analysis (PCA) (explore this more in TensorFlow’s embedding projector) that come in handy in such cases, they require a lot of manual investigation. Visualizing the points and identifying root cause/s are not straightforward, nor is it necessarily true that we will be able to detect these cases in lower dimensionalities, such as 2 and 3-dimensional space.

Domain-specific language

Domain-specific NLP is the use of NLP techniques and models in a specific industry or domain, such as healthcare or legal. This is important because each domain has its own unique language, terminology, and context, which can be difficult for general-purpose NLP models to understand.

To address this, domain-specific NLP involves developing and training NLP models that are designed to understand the language, concepts, and context of a particular domain. This requires domain-specific text data and metadata, as well as domain-specific features and knowledge, such as ontologies, taxonomies, or lexicons.

For example, in healthcare, NLP models may need to be trained on electronic health records (EHRs) and medical literature to identify and extract information related to patient diagnosis, treatment, and outcomes. In the legal domain, NLP models may need to be trained on legal documents and case law to extract information related to legal concepts, arguments, and decisions.

Domain-specific NLP has many benefits, such as improved accuracy, efficiency, and relevance of NLP models for specific applications and industries. However, it also presents challenges, such as the availability and quality of domain-specific data and the need for domain-specific expertise and knowledge.

In the context of monitoring, it’s critical to recognize the iterative and continuous process that transfer learning presents. Without intensive training and monitoring, many things could easily go wrong out of vocabulary words that the model won’t be able to leverage, and not understanding the base sentiment or context of conversation can easily creep into your inputs.

Language has no defined schema

Text data is unstructured data that does not have a defined schema or structure and does not follow a rigid or predictable structure. To transform text data into data contracts, it is necessary to extract relevant information from the text, such as entities, relationships, and attributes, and to map them to the corresponding elements in the data contract schema. This requires NLP techniques, such as named entity recognition, relationship extraction, and sentiment analysis, to identify and extract meaningful information from the text. Transforming text data to data contracts is a challenging task, one that we usually don’t have time for, but on the other hand, do provide a lot of value (e.g., text length, valid regex).

Because text lacks schema, it’s a serious challenge to enforce correctness. With structured data, a date field is a date field, and anything other than a date dormant input can be enforced and will be dropped. But with text, how does a model differentiate between gibberish, slang, syntax style, etc., What should be enforced?

That said, while ‘schema’ is not well enforced or defined, if at all, these changes have the potential to impact model quality significantly. Moreover, schema changes can also affect the interpretability and explainability of NLP models. Making it difficult to understand how the models have diverged from training or why they are making specific predictions, which can undermine their usefulness and trustworthiness.

Where does NLP monitoring go from here?

NLP, just like any other ML model and/or app, needs to be monitored. Particular with NLP booming at the moment, case in point LLMs and ChatGPT (LLMs introduce a whole new bag of worms and monitoring challenges in addition to the ones we dove into earlier – but we’ll leave that for the next post in this series 🙂). That said, distilling a monitoring policy, understanding a threshold, and identifying an anomaly in the existing embedding space is not interpretable, and that makes it hard to monitor, much less explain. At this point, you should be curious as to what that alternative is. So stay tuned ⏲️.

Want to monitor NLP?

Head over to the Superwise platform and get started with monitoring for free with our community edition (3 free models!).

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.