There’s a lot of confusion between the phrases model evaluation and model monitoring–and it’s time to set the record straight. While the phrases have a lot in common and sound similar, they have fundamental differences that are both theoretical and practical. Let’s take a closer look at these differences, how the challenges associated with evaluation and monitoring diverge, and some of the individual tools used for each.

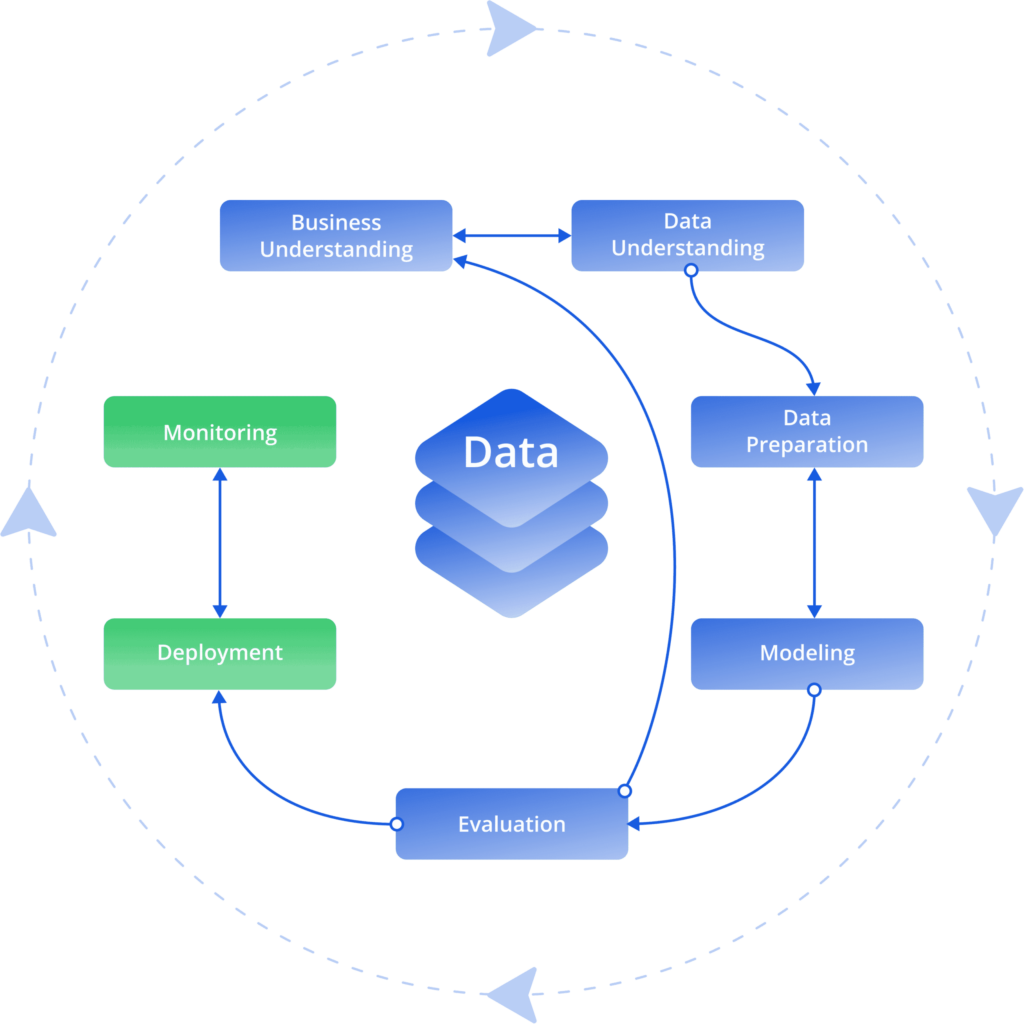

The basic difference between model evaluation and model monitoring is the stage at which each process takes place. Evaluation and monitoring have two very different places in the MLOps cycle. Within the lab cycle, we start with a business goal, move from there to understand what kind of data we need, and then continue on to collect or prepare the data involved. Based on the business goal and the data, data scientists will prepare a model using algorithms that perform the classifications and predictions needed.

Once the model is ready, it is trained and evaluated on data that is set aside specifically for testing. This is when we use performance evaluation metrics to gauge how well the model performs, what it does well, and where it falls short. All of this takes place as part of the lab cycle and the initial research phase.

When the model evaluation metrics indicate that the algorithms are performing well, it’s time to deploy the model to production, but with that said, we need to keep an eye on it behaves in a real-world situation. Enter model monitoring. Model monitoring begins after deployment when you need to provision how your model behaves over time and how relevant it is in a dynamic production environment that changes over time. This kind of production provisioning is much more than simply gauging the model performance over time.

To draw an analogy to software engineering, model evaluation would be similar to unit testing, where the developer tests that the individual components of code are performing according to their requirements. Model monitoring, however, is more parallel to, say, Datadog or New Relic to observe how code behaves when it comes to CPU, memory use, functions, network use, and so on.

In the following section, we take a deeper dive into a few of the main differences between model evaluation and model monitoring.

Stakeholders

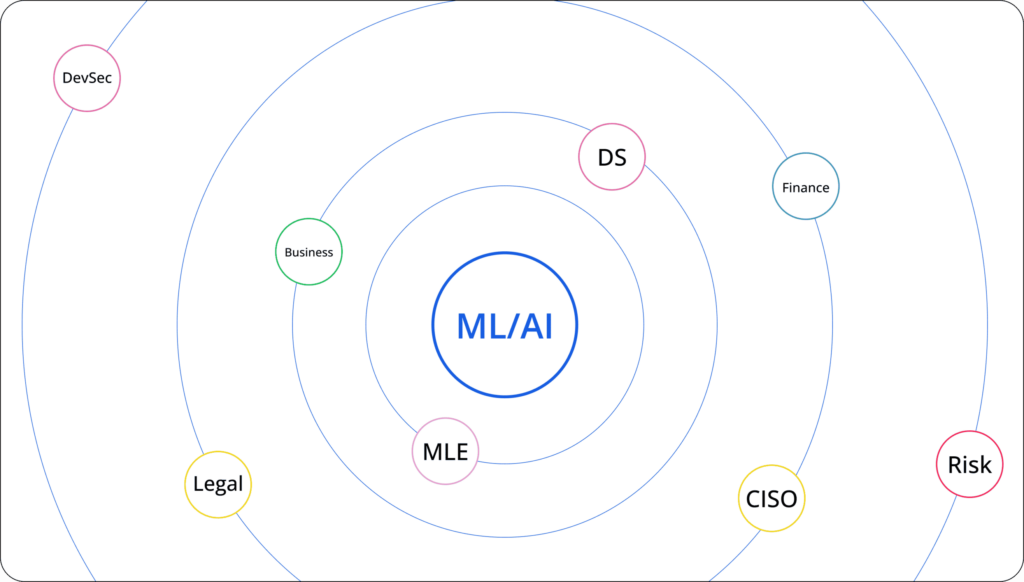

Aside from taking place at different stages, model evaluation and model monitoring also involve different groups of stakeholders. During model evaluation, data scientists who developed the new algorithms need to check how well the model works. After all, assessing a model’s performance early on is an opportunity to find which parameters work best and track down those that have the potential to cause failure or bad results. The data scientists may go ahead and share the results of the model evaluation with the business stakeholders to check whether the results are good enough. Once the results are good, the evaluation process is done.

Model monitoring, on the other hand, has a broader collection of stakeholders. Once a model is in production, data scientists need to be sure the algorithms work well. This includes checking the model’s performance, detecting concept or model drift, and deciding when to retrain the model. Machine learning engineers need to check for any data quality issues or pipeline problems, such as features that are missing values from a specific data source. Business operations will want to know if there is a subpopulation that’s underperforming, such as a specific set of users or cohort being served subpar recommendations. And risk managers need to verify that the business is not being exposed to unwanted risk if, say, the model suggests approving loans to customers with high uncertainty of repayment.

Impact / consequences

Detecting an issue during the model evaluation phase may result in adjustments to the model, more iterations with the data scientists, or even a decision to stop the project. The process of evaluating your model’s performance during the research phase is iterative by design. If done correctly, the data scientist will quickly get feedback regarding the model’s expected performance. If the performance is not good enough, the team will continue to research and find ways to improve the model. This might involve bringing more data sources, additional feature engineering, exploring different modeling techniques, or hyper-parameter tuning. They might even come to the conclusion that with the current data and task definition, there is no way to achieve satisfactory results using the model. All in all said, yes, this has time and resource costs, but the model is still siloed and this point and does not impact business decisions yet.

When things go south during model monitoring, the consequences and ramifications have a completely different magnitude. When models are in production, they are already operational. Any issues found when monitoring the model’s performance will directly impact the business and necessitates an immediate fix in which the different stakeholders (data scientist and ML engineer) deploy a new version to production.

Metrics and measurements

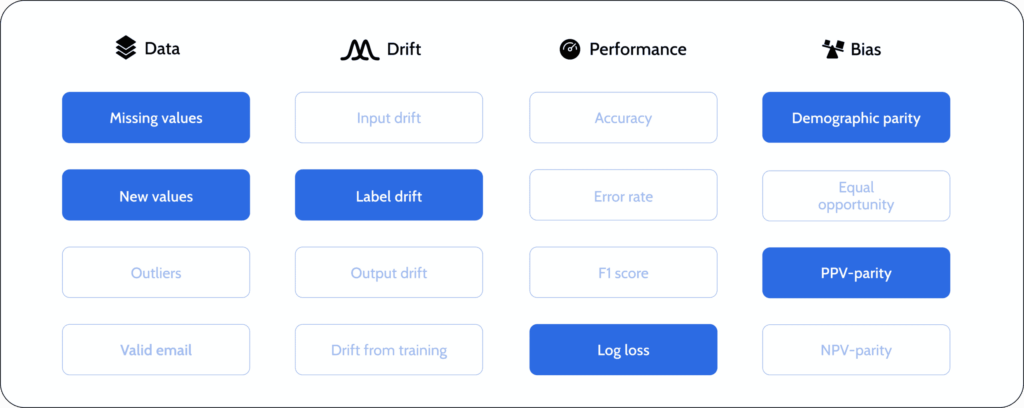

Model evaluation usually deals with the performance aspects of the developed algorithm and its potential business impact. For example, what is the model accuracy, and how is it expected to impact the conversion rate? Or what are the measurements for precision and recall?

Model monitoring covers pretty much anything associated with provisioning a model in production. These measurements must be done in an ongoing fashion and closely checked for a wide range of health aspects. Things like: What’s the model response time, is it responding fast enough, do we see any errors instead of valid predictions? Due to production limitations, in many cases, we won’t be able to measure model performance, or it will only be feasible with an enormous delay. For this reason, we also need to monitor things like the data quality of the system and potential model drifts, biases, or changes in the model decision-making.

Single static environment vs. decentralized dynamic environment

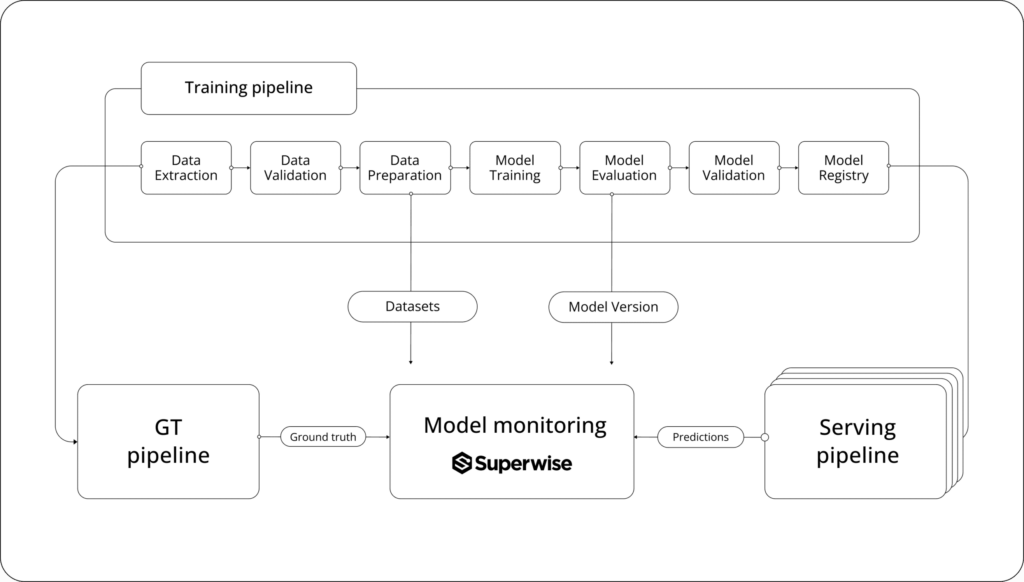

Another technical difference lies in the fact that model evaluation is a single contained process leveraging a static historical dataset, while model monitoring needs to work in a distributed environment. The collection process for the relevant data points in model monitoring is decentralized from a data source perspective. Many different instances of our model serving could work in parallel, some in stream and some in batch. It also diverges from a pipeline perspective since the required data points usually come from three different processes:

- Training – to collect baselines and reference artifacts.

- Serving – where model predictions are collected.

- Ground truth or label collection – where the final business outcome is collected.

Tools and practices

Given the points above, it’s clear that the technology stack used in the model evaluation phase is very different from the stack required in model monitoring. Model evaluation is usually done on the data scientist’s local machine where the training and test datasets are available in flat files (such as CSV, Parquet, etc.,.), and the code is written in plain Python / R either in a standard IDE such as PyCharm or VS Code or a notebook environment such Jupyter notebook or Google Colab. When data is too big to fit into the local machine memory, they can easily spin up a machine or notebook environment in the cloud using services such as Google Vertex or AWS Sagemaker.

The distributed nature of model monitoring and the fact that it requires time series analysis over large data volumes means it can’t be done on a local machine. These kinds of model monitoring implementations will usually require a logger, SDK, or agent to log and persist all ongoing predictions and incoming labels. You’ll need a processing engine to compute all relevant monitoring metrics, such as drift, performance, bias, data quality, and more, over large datasets and a visualization layer to visualize the metrics and build dashboards. This kind of implementation can be done using open source stacks, like Python for logging, Spark as compute engine, and Grafana and Prometheus as time series DB’s and visualization layers. Using existing APM tools like Datadog or New Relic or with a tool that is dedicated to the job like Superwise.

Summary

Model evaluation and model monitoring are two very different tasks that warrant different tools and capabilities. Although there is some overlap when it comes to the metrics used in each task, the way the results are interpreted can be drastically different. To the point where attempting to substitute model evaluation tools in place of model monitoring can result in a dangerously naive understanding of your model’s behavior in production.

Getting the most out of your ML models requires intensive evaluation in the lab cycle and monitoring throughout the lifetime of the model. To get the evaluation right, data scientists can follow well-known best practices. But monitoring in production is more complex and has to be built on a system that can provide reliable and robust monitoring and alerting for many issues. Fortunately, Superwise has already got that wrapped up for you in a community edition (3 free models!) – Head over to the Superwise platform and get started.

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.