As more companies wade into the AI waters and begin taking the first steps to operationalize models, they reach the point where they need to do machine learning at scale. This means scaling up your model operations. And it’s what MLOps is all about. But how do you take the first steps in this direction? Like any burgeoning market, the concepts are still a bit fuzzy. Plenty of solutions are starting to appear, but you probably don’t yet have clear implementation references or a solid understanding of how to build your MLOps roadmap.

In this blog, we examine why teams are struggling to start their first steps in the MLOps world. We’ll also offer some practical advice on the basic steps to get started – in a way that makes sense–now and into the future.

The MLOps discord

The ML ecosystem is flourishing, expanding, and changing all at the same time. Relatively speaking, the ecosystem is still young, and it gets younger the further right you shift on the MLOps lifecycle. There’s a good reason for this. It is only in the past few years that we’ve begun to see mainstream adoption and scaling of ML beyond enterprises. As the ecosystem tackles more and more operational and production challenges, new categories like feature stores and model observability are being built from the ground up. This constantly moving ‘goal’ is why there is still so much confusion when it comes to MLOps roadmaps, what to cover in your implementation plan, the stakeholders you need to serve, the tools that belong to each part of the lifecycle, and the decisions to be made.

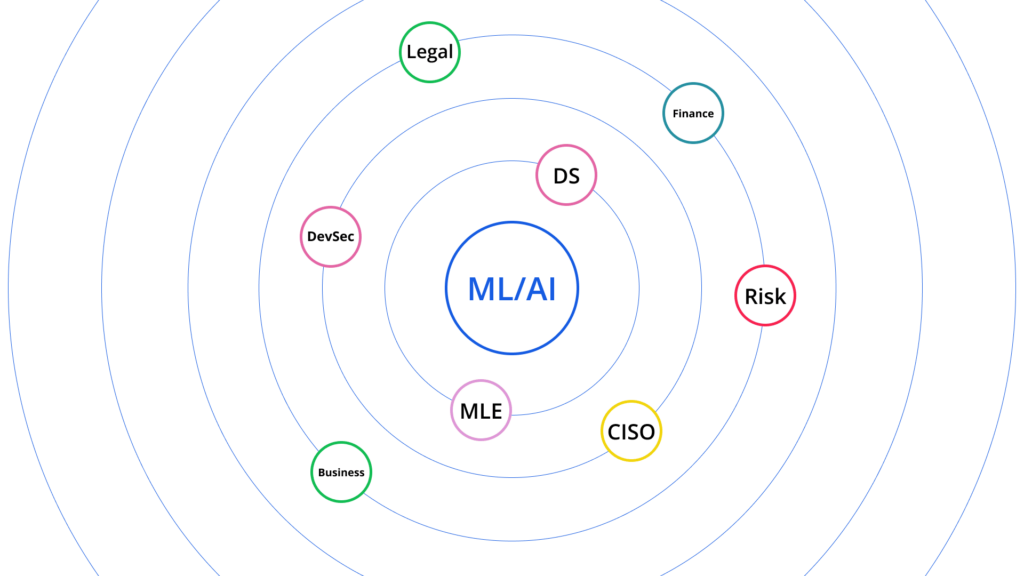

Stack of stakeholders

Powering real-world operations with ML will impact different people in your organization: from the tech team responsible for building operationalized ML to the business team monitoring the company’s health, all the way down to the security team that cares about everything concerning the company’s assets – especially data and IP. These stakeholders will have different interaction points with your MLOps software. Your users, machine learning engineers, and data scientists will be the most apparent stakeholders. But depending on your organizational makeup and the centrality of your ML use case, there are many parties of interest, such as business operations, CISO and DevSec, risk and compliance, and finance. The decisions made regarding your MLOps roadmap must be balanced to incorporate these stakeholder requirements and needs. For example, specific tools or strategies will not be an option if new security policies don’t allow you to move the data outside the premises. Suppose you’ve already committed to such a service. In that case, you’ll need to continue maintaining the tool in-house, possibly using man-hours that would be better devoted to other tasks–racking up that technical debt.

Lack of communication

ML generally starts with business requirements translated into a data science problem. The actualization of a successful ML model involves different areas of expertise, including data analysts, data scientists, researchers, data engineers, backend developers, and more. It is the business and business domain experts that rely on ML to predict behavior accurately. These different experts speak different languages. For a data scientist, success will be measured in F1 accuracy, a risk officer will go for bias rate, and business users will measure conversion rates and business KPIs. These different measures of success not only create confusion in success alignment but may also lead to blurred ownership of ML. Who calls the shots, and how will all this work be coordinated? Once a model is in production, who is responsible for the health of the business process?

Continuously evolving

To scale up, you need tools that automate tasks, from automating your ability to track and compare experiments during the research phase to monitoring your model in production. But as we mentioned earlier, the field of ML tools is still relatively new. ML software has a vast range of potential applications, which makes it understandable that so does the supporting MLOps tools ecosystem. These are early days, many of the tools overlap, and some have specific feature sets that are hard to find in others. What’s more, there are no standards yet.

Let’s compare ML tools to those from the software ecosystem. When it comes to software tools, it’s obvious what the term ‘monitoring’ means, what CI/CD will introduce to your lifecycle, and how to benchmark good performance. It’s a whole different ball game when it comes to MLOps. Most ML tools were built over the course of the last two to three years to help companies achieve machine learning at scale. We just didn’t need them before – but we do now.

Check out Chip Huyen’s breakdown of the ML tools landscape or Ori Cohen’s State of MLOps to see how deep the rabbit hole goes.

5 essential steps to scaling up your ML

Now that you understand what’s causing the confusion, here are some steps to help clear the fog.

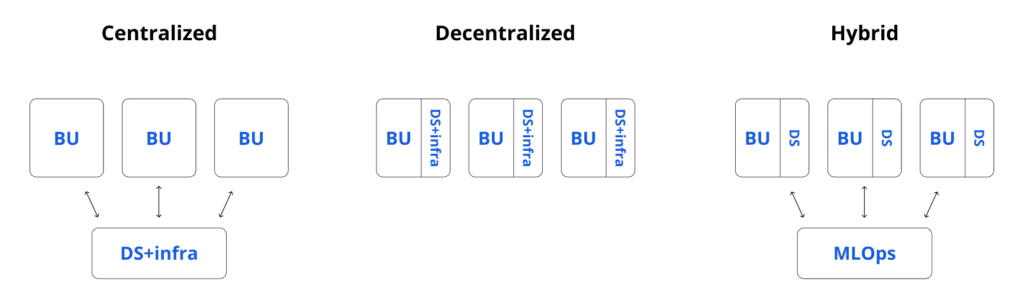

1. Decide on a strategy: centralized, decentralized, or hybrid.

Centralized – In this approach, one central team handles and manages all the models and reports to one head of data science. A central team of experts providing support to different functions has its pros and cons. Working together as a team, your data scientists will be able to brainstorm, collaborate, and ensure that no matter what strategy is decided on, they stick to it as they move forward and the business evolves. On the other hand, these experts will have a degree of separation from product managers, data experts, engineers, and business analysts who are using the models. And this will influence their perspective and priorities.

Decentralized – Models and the data scientists responsible for them are spread throughout the company. Any DS efforts are embedded within the business unit. Essentially, the models are maintained by different teams, depending on their business focus. This, too, has its advantages and disadvantages. Working as part of an integrated team means the data scientists are closer to the product and can tweak or tune the models with that knowledge in mind. On the other hand, a decentralized organization makes maintaining a consistent strategy for ML models more difficult. It also makes it harder to work on a shared infrastructure and ensure that uniform standards are maintained when measuring performance and aligning best practices.

Hybrid – Using a hybrid approach can offer the best of both worlds. In this scenario, the data scientists responsible for the models are part of the team that uses the model. The difference is that the company sets up a central MLOps task force to make decisions regarding the infrastructure tools and practices that everyone shares. This organization strategy works similarly to how DevOps operates in many larger companies.

2. Make a plan for your current and future needs

Before you start searching for ML tools or platforms, try to understand what strategy is planned for ML and AI within your organization. How is it likely to grow, what kind of use cases are planned, and at what scale? Then, speak to all the stakeholders involved to find out what they need.

Here are some practical questions to ask yourself:

- How many data scientists will your organization have?

Large teams of data scientists require much more emphasis on collaboration tools and reproducibility.

- How many models will you deploy to production, and at what pace?

Deploying models more frequently demands automation regarding model orchestration, new model delivery, and monitoring performance.

- How are the models integrated into your company’s products or services?

If the models are part of your core product, you’ll need more extensive monitoring alongside the ability to get real-time reports and alerts.

- What are your main use cases? Are they batch or stream?

- What types of data do you use? Is it structured, text, or images?

Inevitably, you will see that not all tools support all use cases equally. Every tool or platform will have its strengths and weaknesses in different areas. Your job is to determine which areas are more crucial to your business so you can plan accordingly.

3. Establish your MLOps blueprint and prioritize

There are many ways to accomplish MLOps ranging from using one generalized platform to a set of best-of-breed tools. Ask yourself what the main components needed for your ML architecture are. Are you tuning models, conducting experiments, deploying, monitoring, tracking, or generating reports? Try to envision what level of support you need for each of these capabilities.

With the main components identified, you can determine the solution stack that will provide answers for those needs described in your plan. Then, prioritize the stack itself. For example, measuring your model’s performance might be the top priority, and once it is working successfully, you will need to monitor it and track for bias or drift.

Keep in mind that focusing on a single tool or platform is like trying to hit a moving target. As ML technology matures and continues to evolve, these transformations will be reflected inside your company. Your company may have 10 models now, but a year down the road, you’ll scale and may have 100. What’s true today may not be relevant in the future. All we know for sure is that everything is scaling up, and AI regulations will introduce additional new challenges.

Another important step in determining your MLOps roadmap is to look at what others are doing to see what works and what doesn’t. The best way to accomplish this is by taking part in professional forums so you can get answers from people who have ‘been there, done that.’

In a previous post, Build or buy? Choosing the right strategy for your model observability, I noted how just a few short years ago, there was no option to buy ready-made tools to work with AI models in production. We didn’t think about whether it was worth the cost of buying them or if it was the right thing to do. We went ahead and built it. Happily, today, there are lots of amazing MLOps solutions we can get off the shelf. Still, people tend to think it will be easier to build or that only we understand the domain well enough to build a solution. And as engineers, it’s easy to get lured into building it yourself. The question remains: how do you know if it pays off to build or buy?

4. Build vs. Buy

Once you’ve got your MLOps plan, strategy, and blueprint in place, you should be able to narrow the list of tools down to three or four possible options. After examining these more closely, prepare a shortlist of the two best alternatives. There are a number of resources that can be helpful in this task, like those suggested in the Hackermoon blog on how to evaluate MLOps platforms. They offer two tools to help weed through the many choices available: one is a guide for evaluating MLOps platforms, and the other is an open-source evaluation tool.

5. Test it out on a real use case

With your plan and blueprint in hand, and a list of the top tools that meet your needs, it’s time to test them out. It’s not enough to see a demo. You need to see the proof of concept in action on your data.

Examine each tool to see if it ‘plays nicely’ with your data. Check that integration can be done smoothly and that you understand the pricing model. And remember how important it is to work with a company that is responsive to your needs. Give your teams a chance to try the tools out, see how they work, and get a feel for connecting with the tool’s support team because you’ll be working together for a while. The integration of any tool into your MLOps landscape has to be smooth, even if it’s at the expense of some features.

- Examine the tool R&D roadmap.

- See the tool in action.

- Check the support team’s responsiveness.

- Take integration into account (both with your existing stack and future theoretical integrations. Is the tool agnostic or locked into a specific vendor ecosystem?).

- Get a transparent, simple pricing model.

As in any new field, the pricing models for MLOps tools are not always well-defined. They may be confusing because the tools themselves are changing as the scope of ML evolves. Just make sure you understand how the pricing model works now and as you scale so that you can make an informed judgment call.

To sum it up

Plans rarely survive the first engagement. But it is the act of planning that enables us to make changes quickly and plan for flexibility down the road. You need to weigh your choices so that what’s right for you today won’t cost you dearly later on. Every software choice comes with technical debt, and this evaluation will help you scope it and take it into account on your MLOps roadmap.

That said, don’t over-architecture your MLOps stack. Most of us are not Google, Netflix, and Uber – we are prepared to take on debt because it will let us move fast. There is nothing wrong with selecting a one-stop-shop MLOps platform to work with as you get started or even as you scale. But as your business evolves, you may need customized and bespoke solutions. Before you set out on your journey, you need to ensure that when that decision is right for your business, you have everything you need to make that choice with the minimum of sunk cost and debt to pay down.

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

References

What is the most effective way to structure a data science team?

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.