Over the last few years, ML is steadily becoming a cornerstone of business operations. Exiting the sidelines of after-hours projects and research to power core business decisions organizations depend upon to succeed and fuel their growth. With this, the needs and challenges of ML observability that organizations face are also evolving or, to put it more correctly, scaling horizontally.

In ML scale is a multifaceted challenge. Vertical scaling of predictions, horizontal scaling of models per customer, staggered models where the outputs of one model are the inputs of the next, and so forth. To address these advanced challenges, model observability, and ML monitoring must go beyond a singular model approach to facilitate model observability on a higher level of abstraction; observability of models on the project level.

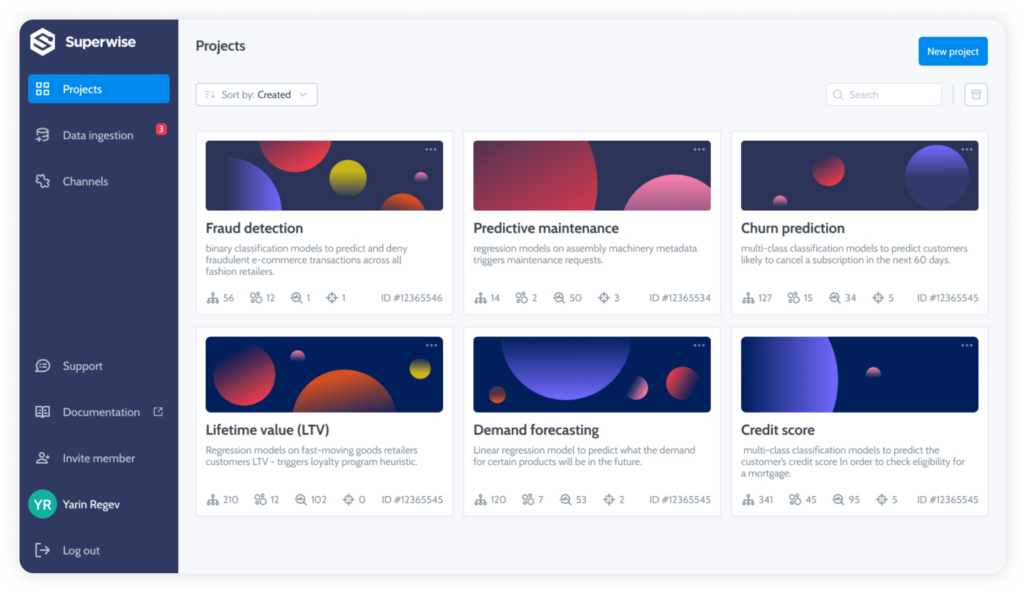

What are projects?

Projects let you group or unite a group of models based on a common denominator. You can group models together around a shared identity, such as a customer or application, to facilitate quick access and visibility into groups, or you can create a functional group around a machine learning use case that will enable cross-model observability and monitoring.

Efficiency at scale

Within a functional project, configurations can be shared and managed collectively to utilize schema, metrics, segments, and policies across the entire project. This will significantly reduce your setup and maintenance time and help you get to value faster.

Metrics – In addition to all of Superwise’s out-of-the-box metrics, any custom metrics pertinent to your organization’s domain expertise and KPIs that you have built will be accessible throughout the project so you can quickly drill into behaviors on any entity level.

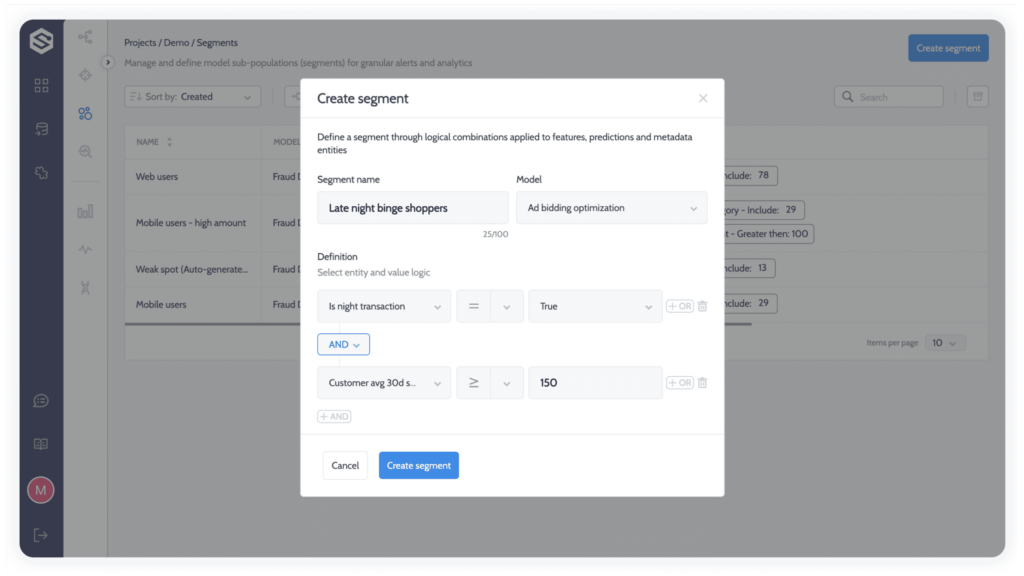

Segments – Segments, or sub-populations, can also be configured on the project level.

Policies – Don’t go and manually configure hundreds of policies and thresholds – turn to cross-project monitoring policies, with automatic threshold setup for each metric and entity based on time-series anomaly detection. You can build any custom policy that you need to support your use case.

Effectiveness at scale

Projects are much more than an accelerated path to efficiency in identifying micro-events, such as input drift on the model level. With projects, you can observe and monitor cross-pipeline macro-events like missing values, performance decay for a specific segment across all models, and so forth. Giving ML practitioners the ability to ask and answer questions like never before.

- How do I monitor a production pipeline with hundreds of models?

- Which anomalies are cross-pipeline issues? Which are bounded to a specific model?

- How do I integrate, monitor, and manage a group of models all at once?

- How do I identify systemic model failures and inefficiencies?

- How do I manage the reporting of events that occur in the pipeline? How do I quickly understand what went wrong?

Ready to get started with Superwise projects?

- Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

- Check out the projects’ documentation.

- Want to learn more about the one-model per-customer approach? Check out this on-demand webinar on multi-tenancy ML architectures!

Prefer a demo?

Pop in your information below, and our team will show what Superwise can do for your ML and business.

Everything you need to know about AI direct to your inbox

Superwise Newsletter

Superwise needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our privacy policy.