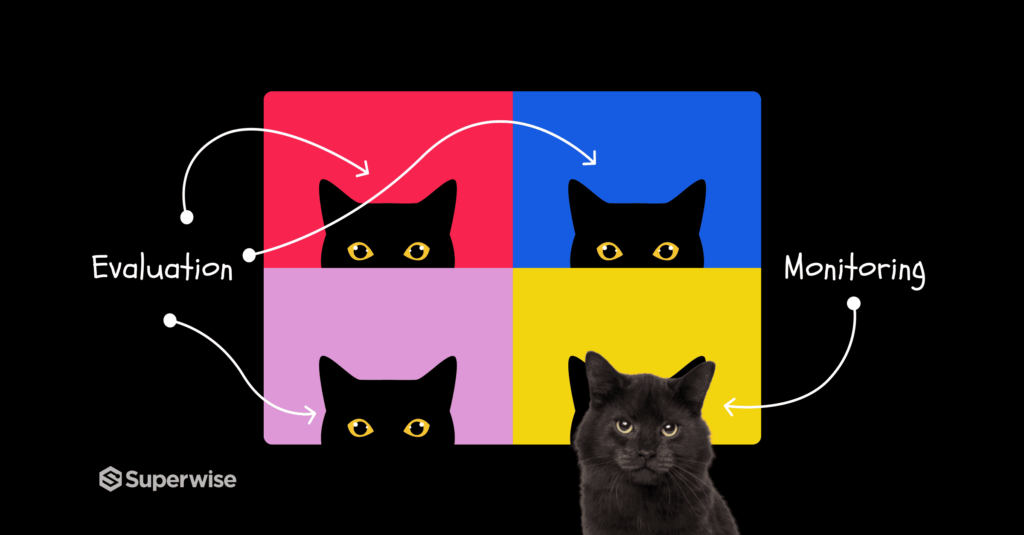

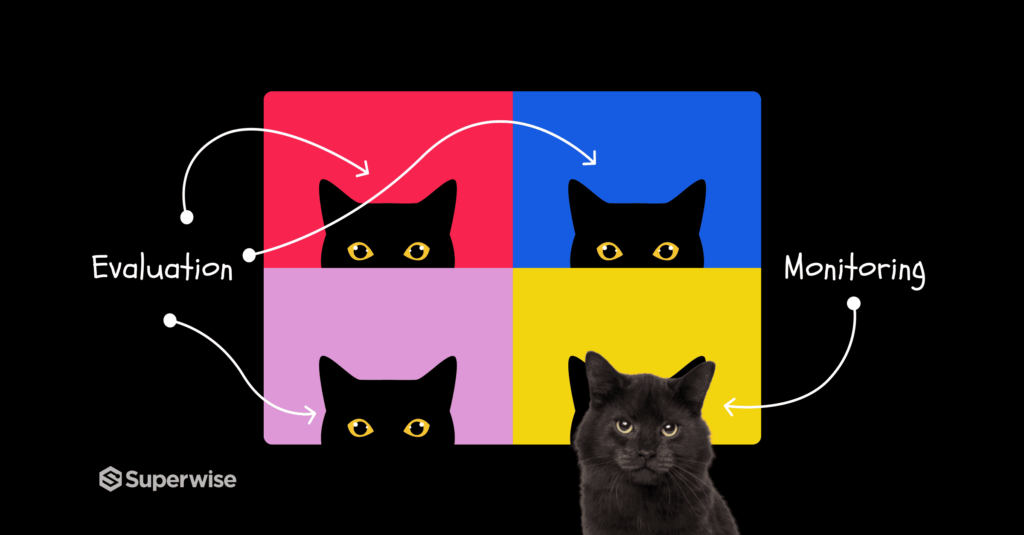

Model evaluation and model monitoring are not the same thing. They may sound similar, but they are fundamentally different. Let’s see how.

Data monitoring

From missing values to dataset completeness, and population decay. Everything you'll need to ensure that your models have what they need to make their best predictions

With data quality monitors your team can quickly uncover data pipeline issues and detect when features, predictions, or actual data points don’t conform to expectations.

Easily secure the structure of your training to inference schema across datasets, data connectors, and streaming to make ensure consistent tracking and visibility of your data and machine learning processes.

Measure the activity levels of your ML models and their operational metrics to catch in real-time variances potentially correlated with model issues and technical bugs.

Stay on top of population representation to achieve the best results for all sub-populations your model serves with monitors to identify growing, decaying, and under-performing segments.

No credit card required.

Featured resources

Model evaluation and model monitoring are not the same thing. They may sound similar, but they are fundamentally different. Let’s see how.

Drift in machine learning comes in many shapes and sizes. Although concept drift is the most widely discussed, data drift is the most frequent, also known as covariate shift. This post covers the basics of understanding, measuring, and monitoring data drift in ML systems. Data drift occurs when the data your model is running on

Data leakage isn’t new. We’ve all heard about it. And, yes, it’s inevitable. But that’s exactly why we can’t afford to ignore it. If data leakage isn’t prevented early on, it ends up spilling over into production, where it’s not quite so easy to fix. Data leakage in machine learning is what we call it