Model observability may begin with metric visibility, but it’s easy to get lost in a sea of metrics and dashboards without proactive monitoring to detect issues. But with so much variability in ML use cases where each may require different metrics to track, it’s challenging to get started with actionable ML monitoring.

If you can’t see the forest for the trees, you have a serious problem.

Over the last few months, we have been collaborating with our customers and community edition users to create the first-of-its-kind model monitoring policy library for common monitors across ML use cases and industries. With our policy library, our users are able to initialize more and more complex policies rapidly, accelerating their time to value. All this is in addition to Superwise’s existing self-service policy builder that lets our users tailor customized monitoring policies based on their domain expertise and business logic.

The deceptively simple challenge of model monitoring

On the face of things, ML monitoring comes across as a relatively straightforward task. Alert me when X starts to misbehave. But, once you take into consideration population segments, model temporality and seasonality, and the sheer volume of features that need to be monitored per use case, the scale of the challenge becomes clear.

Superwise’s ML monitoring policy library

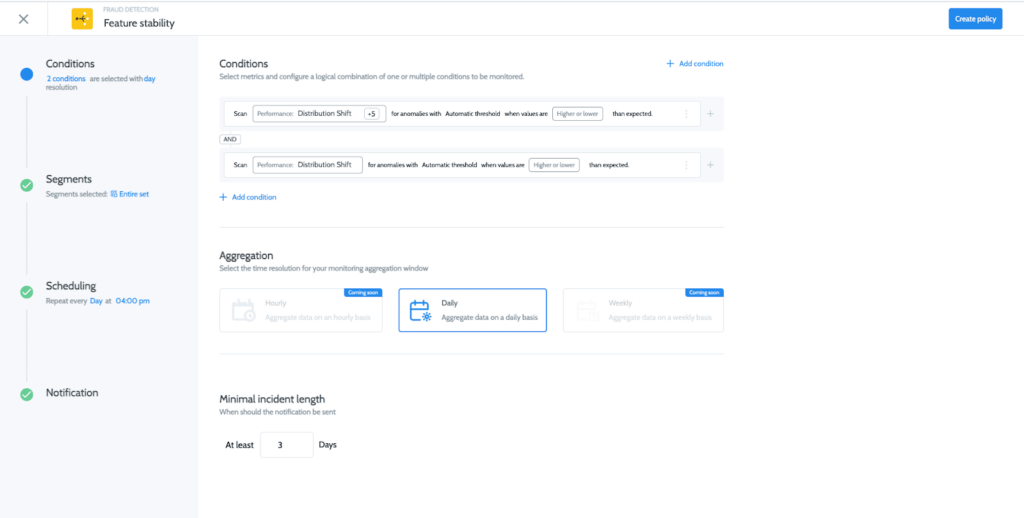

The key to developing our policy library was ensuring ML monitoring accuracy and robustness while enabling any customization in a few clicks. All policies come pre-configured, letting you hit the ground running and get immediate high-quality monitoring that you can customize on the fly.

The monitoring policy library

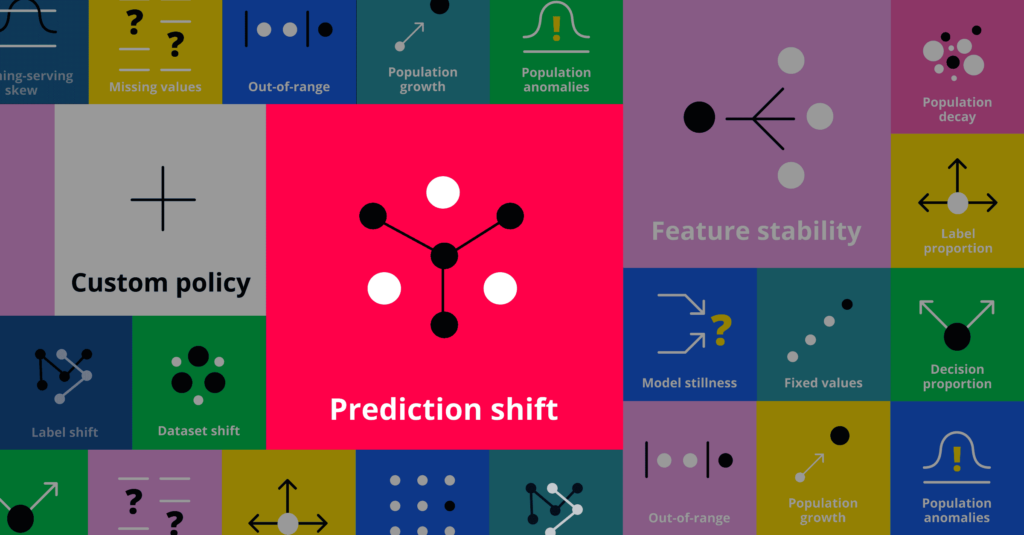

The policy library covers all of the typical monitoring use cases ranging from data drift to model performance and data quality.

Drift

The drift monitor measures how different the selected data distribution is from the baseline.

Model performance

Model performance monitors significant changes in the model’s output and provides feedback as compared to the expected trends.

Model performance documentation

Activity

The Activity monitor measures your model activity level and its operational metrics, as variance often correlates with potential model issues and technical bugs.

Quality

Data quality monitors enable teams to quickly detect when features, predictions, or actual data points don’t conform to what is expected.

Custom

Superwise provides you with the ability to build your own custom policy based on your model’s existing metrics.

Any use case, and logic, any metic fully customizable to what’s important to you.

Read more in our documentation

Ready to get started with Superwise?

Head over to the Superwise platform and get started with easy, customizable, scalable, and secure model observability for free with our community edition.

Prefer a demo?

Request a demo and our team will show what Superwise can do for your ML and business.