Unified AI Governance

Control AI in Realtime. Trust the Outcomes.

Real-time guardrails and policy enforcement that let your team deploy AI faster—with complete visibility and control.

Starter Edition, Free Forever, No Credit Card Required

Controlled, Private, AI Chat, Early Access Available Now

The Challenge

AI Adoption Without Control Creates Risk

Organizations are deploying AI faster than they can govern it. Shadow AI usage, compliance failures, and uncontrolled AI behavior create real business risk—especially in regulated industries.

of enterprises have shadow AI

Uncontrolled AI usage outside IT oversight

average compliance failure cost

AI governance failures in regulated industries

of boards lack AI visibility

No unified view of AI usage across the organization

How Teams Start, Grow, and Scale

Start with /chat

Teams begin with SUPERWISE /chat—purchase via Stripe, then our team onboards and configures your environment within 24 hours. You get enterprise AI chat with guardrails from day one.

Customize & Integrate

Departments customize workflows and integrate SUPERWISE /chat with existing systems. The Platform provides the control plane for policy, guardrails, and integrations.

Govern & Scale

Enterprises govern AI usage at scale across teams and departments. Complete visibility, real-time guardrails, and audit trails ensure trust and control as AI adoption grows.

AI Governance that Actually Works

See violations before your users do. Runtime safety to any agentic application in 5 minutes.

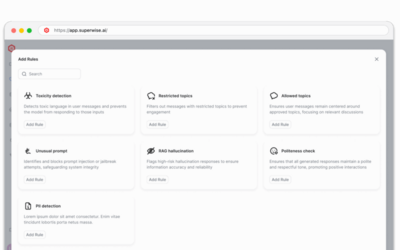

Guardrails

Runtime protection that stops harmful outputs before they reach users

Policies

Define what to govern, when to alert, and how to respond automatically

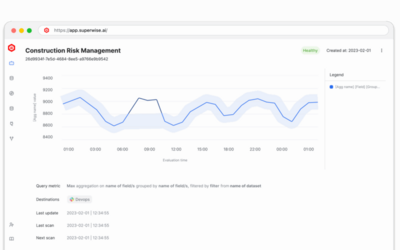

Observability

Complete visibility into your AI systems with real-time monitoring and insights

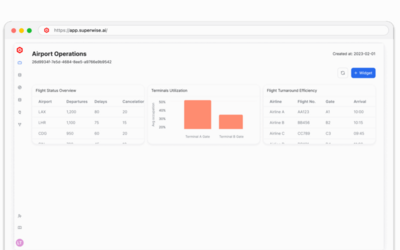

SUPERWISE /chat: The Governed Workspace

ChatGPT-like experience your team already knows—with enterprise guardrails, policies, and audit trails built in. Chat executes. The platform governs.

Give your business team private, safe, compliant AI chat—without the enterprise tax or governance gaps. Every interaction is protected by real-time guardrails, complete audit trails, and policy enforcement.

Built-in Guardrails

PII detection, toxicity filtering, and jailbreak protection on every interaction.

Complete Audit Trail

Every interaction is attributable and reviewable for regulated workflows.

Business Assistant Included

Production-ready AI assistant in every tier—doesn't count toward Customizable Agents.

Model Flexibility

Use any LLM provider with consistent governance across all models.

SUPERWISE Platform

The System Behind the Experience

SUPERWISE Platform is the AI control plane; authority/policy/accountability lives here. Control and trust mean different things to different organizations.

Platform(Control Plane)

Unified governance across your entire AI environment. Track every model, agent, and decision with complete visibility.

Learn MoreAgent Studio

Design, deploy, and govern AI agents with visual workflows, automated testing, and production-grade controls.

Learn MoreGuardrails

Runtime protection that stops harmful outputs before they reach users. Policy evaluation in under 10ms.

Learn MoreObservability

Supporting signals that inform governance decisions. Know the operational state of your governed AI systems.

Learn MorePolicies

Define what to govern, when to alert, and how to respond. Your governance rules, enforced automatically.

Learn MoreIntegrations

Connect to 50+ LLM providers, vector databases, and enterprise tools in minutes.

Learn MoreControl AI. Trust the Results.

Deploy AI with confidence. Complete visibility, real-time guardrails, and audit trails for every model and agent.

Free forever. No credit card required.