It’s reasonable to assume that, sooner or later, every machine learning model will fall subject to bias, as we know that the history and people they learn from are far from fair. Given this understanding, it’s essential to understand where throughout the ML lifecycle, bias can creep in and the various methods that can be applied to deal with machine learning bias.

Post in this series:

- Making sense of bias in machine learning

- A gentle introduction to ML fairness metrics

- Dealing with machine learning bias

Bias in the ML lifecycle

Bias is a pervasive issue in ML, and it can arise at various stages of the ML lifecycle. Even if you successfully mitigate bias in one phase of the lifecycle, that does not ensure that you will not encounter bias later. Unfortunately, there is no one-shot solution. Even should your data collection be the fairest of them all, later in production, you may find that your training set did not represent the population well enough, leading it to decide unfairly against minority classes. Businesses must continuously evaluate ML decision-making processes to mitigate bias affecting their operations negatively.

Measuring fairness

Selecting fairness metrics in ML can be difficult because various fairness criteria can be used, each with its strengths and weaknesses. The fairness metric choice depends on the problem’s specific context and the stakeholder’s goals. Here are some reasons why selecting fairness metrics in ML can be challenging:

- Trade-offs: Fairness metrics often involve trade-offs between competing objectives. For example, ensuring equal treatment across different groups may lead to lower overall accuracy or increased false positives/negatives. Determining which trade-offs are acceptable depends on the specific problem and the stakeholder’s goals.

- Contextual considerations: Fairness metrics need to be carefully chosen to suit the specific context of the problem. For example, in the context of criminal justice, the stakes are high, and the impact of biased predictions can be severe. In this context, ensuring equal treatment across different groups may be more important than maximizing overall accuracy.

- Data availability: Some fairness metrics require specific information about the protected attribute, which may not be available or may be difficult to collect.

- Multiple definitions: There are multiple definitions of fairness, and different definitions can lead to different fairness metrics. For example, one definition of fairness is to ensure that individuals with similar risk profiles are treated similarly. Another definition is to ensure that individuals from different groups are treated similarly. These different definitions can lead to different fairness metrics, making choosing the most appropriate one difficult.

To overcome these challenges, it is important to involve diverse stakeholders in selecting fairness metrics, carefully considering the context of the problem, and evaluating the impact of different fairness metrics on the model’s performance. Only then is it possible to choose metrics that strike an appropriate balance between competing objectives.

Debiasing ML

Debiasing ML refers to the process of mitigating or removing the effects of bias from the model or the data used to train the model. However, it is important to note that debiasing is an ongoing process and that new sources of bias may emerge as the model is deployed in new contexts or with new data. Therefore, continual monitoring and evaluation are necessary to ensure that the model remains unbiased and fair.

Non-bias through ignorance?

Removing protected attributes from data is a common approach to mitigate bias in ML. However, this approach may not always solve bias and can even introduce new forms of bias.

- Correlated features: Even if the protected attribute is removed, other features in the data can be highly correlated with it and can serve as a proxy for the protected attribute. For example, if race is removed as a feature, the model may still use zip code as a proxy for race, as certain zip codes may be predominantly inhabited by certain racial groups.

- Historical bias: Removing protected attributes from data may not account for historical biases that have affected certain groups in the past. For example, if a bank has historically discriminated against people of a certain race, removing the race attribute from the data will not address this past bias.

- Discrimination by association: Removing protected attributes from data can also lead to discrimination by association, where individuals who are not part of the protected group but are associated with them are also subject to discrimination. For example, if a model is trained to approve loan applications based on a person’s job history, and a protected group is less likely to have stable job histories due to systemic discrimination, removing the protected attribute may not mitigate this form of bias.

- Proxy attributes: Removing a protected attribute may not be enough if the model can still use other features that are proxies for the protected attribute. For example, if gender is removed as a feature, the model may still use the applicant’s name or title as a proxy for gender.

Furthermore, retaining protected and proxy attributes in data can help mitigate ML bias later in the lifecycle by enabling more targeted bias analysis and remediation. It is important to note that retaining protected and proxy attributes in data should be done in a way that respects privacy and ethical considerations. Protected and proxy attributes should be handled carefully to avoid perpetuating or exacerbating existing biases.

Collect more data

Collecting more data is the most commonly suggested solution to mitigate bias in ML. The idea is that with more data, the model will have a more representative sample of the population, which can reduce the impact of bias on the model’s predictions. However, collecting more data may not always be a viable or effective solution to address bias. Here are some points to consider:

- Data collection bias: Collecting more data may not necessarily mitigate bias, especially if the data collection process itself is biased. For example, if the data collection process disproportionately targets certain groups or excludes others, the resulting data will still be biased, even with more data.

- Inherent bias in the data: Collecting more data may not always address the underlying sources of bias in the data. If the data is inherently biased due to factors such as imbalanced data or missing data, collecting more data may not address these issues.

- Cost and time: Collecting more data can be costly and time-consuming, especially if the data collection process involves collecting data from diverse sources or populations. This can make collecting more data an impractical solution for many applications.

- Data quality: The quality of the additional data collected needs to be assessed to ensure it is fit for purpose. The quality of the data may vary and may not improve the model’s performance.

Data augmentation

Data augmentation is a technique used to increase the size and diversity of the training dataset by generating synthetic samples. For example, if there is an under-representation of a particular group in the original dataset, data augmentation can be used to generate more samples of that group. This can be done by applying various transformations to the existing samples, such as flipping or rotating images, adding noise to audio recordings, or slightly modifying text.

By creating more samples of underrepresented groups, the resulting augmented dataset becomes more balanced and representative of the real-world population, reducing the likelihood of bias in the machine-learning model. Moreover, data augmentation can also help reduce overfitting by increasing the size and diversity of the training dataset, which can improve the model’s generalization performance on unseen data.

However, it’s important to note that data augmentation should be done with care to avoid creating synthetic samples that are too dissimilar from the original data, as this may introduce new forms of bias into the model.

Data balancing

Data balancing is a technique used to ensure that the number of samples in each class is roughly equal. This can help reduce bias in machine learning models by preventing the model from being biased toward the majority class and increasing the importance of the minority class. Data balancing can be achieved by oversampling the minority class or undersampling the majority class. Oversampling involves generating additional samples for the minority class, while undersampling involves reducing the number of samples in the majority class.

There are several techniques for oversampling and undersampling, including random oversampling/undersampling, SMOTE (Synthetic Minority Over-sampling Technique), and ADASYN (Adaptive Synthetic Sampling). These techniques aim to generate synthetic samples for the minority class that are representative of the original data distribution while avoiding overfitting or introducing new biases into the model.

Counterfactual data generation

Counterfactual data generation is a technique used to generate hypothetical data samples that represent how the data would have been if certain events or conditions had been different. This can help mitigate bias in machine learning models by creating more diverse and representative data and allowing for the exploration of alternative scenarios and decision-making processes.

In many real-world datasets, bias can arise due to confounding variables or external factors that are not directly observable or controllable. Counterfactual data generation involves creating hypothetical samples that represent how the data would have been if these variables had been different. This can be achieved through various techniques such as simulation, modeling, or inference.

Adversarial debiasing

Adversarial debiasing involves training the model to simultaneously predict the target variable and detect and counteract any potential bias in the input features. This is done by training two neural networks simultaneously: a generator network that generates fake data samples and a discriminator network that tries to distinguish between real and fake data samples. The generator network is trained to generate samples that are indistinguishable from the real data, while the discriminator network is trained to detect any potential bias in the input features.

Active learning

Active learning works by iteratively selecting a subset of the most informative and uncertain data samples for annotation and incorporating them into the training dataset. The model is then retrained using the updated dataset, and the process is repeated until the desired level of accuracy or performance is achieved.

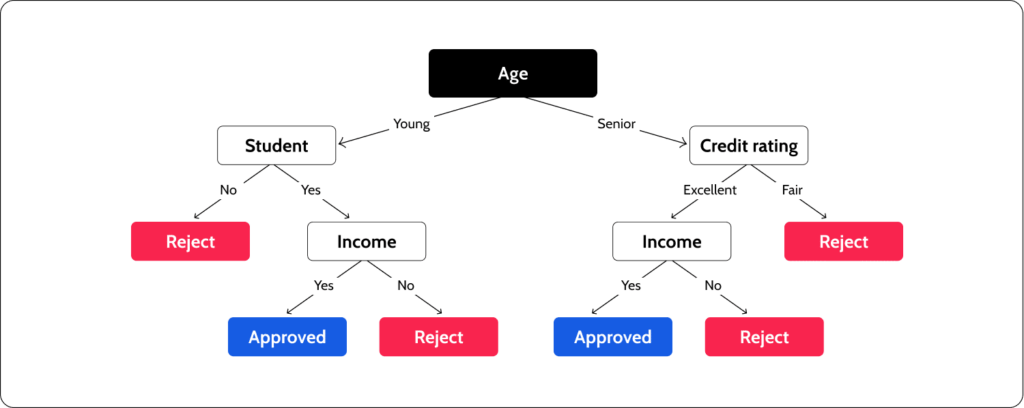

Whitebox modeling

In many machine learning applications, bias can arise due to the opacity and complexity of the models, which can make it difficult to identify and correct any potential biases in the input data or algorithmic decisions. Whitebox modeling involves designing and implementing machine learning models that are transparent and interpretable and that allow for the inspection and validation of their internal mechanisms and decision-making processes.

Whitebox modeling can be achieved through various techniques such as decision trees, rule-based systems, linear models, or interpretable deep learning models. These models are designed to be simple, modular, and interpretable, and they allow for the identification and correction of any potential bias in the input data or algorithmic decisions.

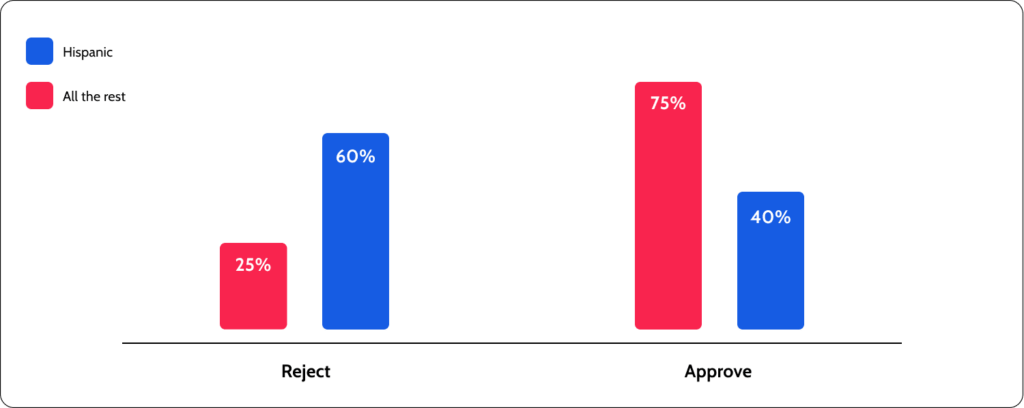

Reject option classification

Reject option classification modeling is a technique used to mitigate bias in machine learning models by allowing the machine learning model to abstain from making a prediction and instead return a rejection or uncertainty score for the data sample.

Reject option classification modeling works by modifying the machine learning algorithm to include a threshold or criterion for rejecting uncertain or ambiguous data samples. The algorithm is trained to minimize the error rate on the accepted data samples while maximizing the uncertainty or rejection rate on the rejected data samples. The rejection or uncertainty score can be used to indicate the confidence or reliability of the model’s prediction and can be used to guide further analysis or human intervention.

Fairness constraints

Fairness constraints explicitly specify and enforce fairness requirements or criteria during the model training and evaluation processes. This incorporates fairness considerations into the model design and optimization process and ensures that the resulting machine-learning models are accurate, fair, and transparent.

Fairness constraints work by specifying a set of fairness criteria or requirements that the machine learning model must satisfy based on the particular context or domain of the application. These criteria may include statistical measures such as demographic parity, equal opportunity, or equalized odds, which aim to ensure that the model’s predictions are not systematically biased against certain subgroups of the population.

During the model training and evaluation process, the fairness constraints are integrated into the optimization objective or loss function, and the model is trained to minimize the prediction error while satisfying the fairness constraints. Additionally, the model is evaluated and validated using various fairness metrics and statistical tests to ensure that the model’s performance is consistent with the specified fairness requirements.

Overruling

Overruling modeling works by integrating the machine learning algorithm with a human-in-the-loop decision-making mechanism, where the output of the algorithm is presented to a human expert for review and potential overruling. The human expert can provide feedback or correction to the algorithm’s decision based on their domain expertise, ethical considerations, or legal requirements. The human expert’s feedback can be used to update the machine learning algorithm or guide further analysis or intervention.

Safety nets

Safety nets are fail-safe mechanisms that monitor model performance and compare it to a set of predefined criteria or benchmarks. If the model’s performance falls below the expected or acceptable range, the safety net triggers an alert or intervention, such as halting the model’s operation, notifying a human expert for review, or providing alternative options for decision-making.

Summary

Bias in ML is a big issue. One that is persistent in data, modeling, and production, but while it is not trivial, it does not mean it is not manageable. At its core, managing and dealing with bias in ML requires business stakeholders to come together and evaluate how fairness applies to their business, regulatory requirements, governance, and customers. With these responsible AI guidelines in place, there is a clear path to selecting fairness metrics, implementing bias monitoring, and implementing debiasing methods.

Want to monitor bias?

Head over to the Superwise platform and get started with monitoring for free with our community edition (3 free models!).

Prefer a demo?

Request your demo here and our team will show what Superwise can do for your ML and business.