Data is the new oil, but just like oil, to trade on it, there are standards and regulations that you need to meet. As companies advance their MLOps and DataOps roadmaps, increasingly use ML and data systems to drive their businesses, and adopt more tooling to enhance their data processing, modeling, monitoring, and so forth, they face a very real challenge around maintaining data isolation from their external vendors.

Companies have enough on their plates with adopting new practices, methodologies, and tools to speed up their ability to deliver and maintain high-quality data-driven products. So the burden of data isolation and privacy (rightfully) falls on the shoulders of the MLOps ecosystem. In this post, we’ll share some of the key lessons and tips we learned at Superwise as we made our model observability platform a portable application.

What is a portable app?

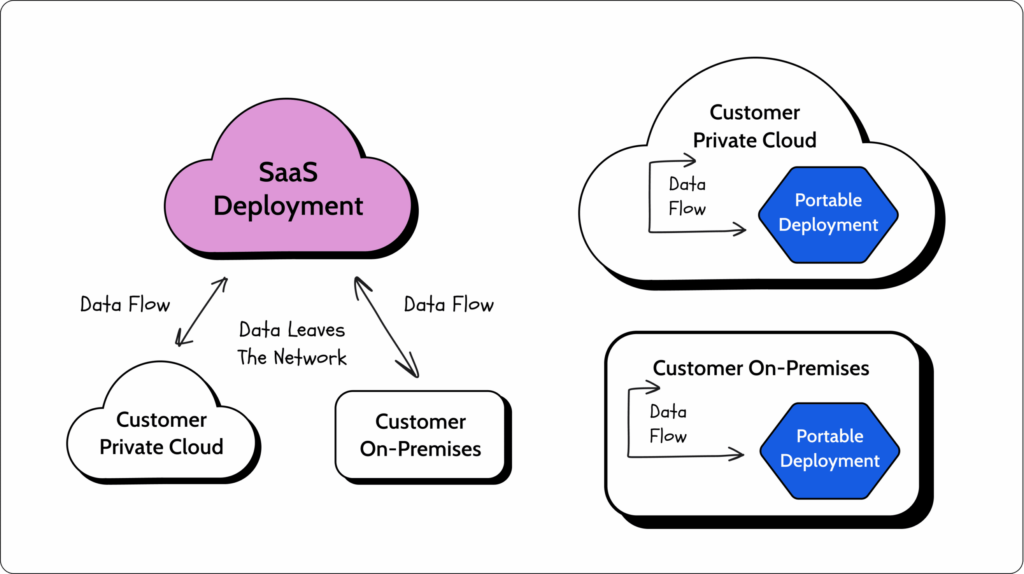

A portable app, or a standalone system, is a system designed to be easily deployed on different private clouds inside a customer’s VPCs (Virtual Private Cloud) and on-premises environments without significant extra effort. In this type of setup, the data flow is contained within a customer’s in-house environment. This is the opposite of SaaS deployments, where the vendor owns and manages the environment.

SaaS is a typical and very convenient way to deploy applications. From the customer’s perspective, they get a fully managed solution where they don’t need to maintain anything (Zero Total Cost of Ownership). From the vendor’s perspective, this means full control of the environment and the ability to manage it.

But there are use cases where the customer will need to keep their data isolated and secured inside their organizational network. This is very common in verticals such as banking and healthcare, which are highly regulated, or government institutions with demanding security standards.

In addition, there are use cases that leverage dedicated data infrastructure or hardware that require on-premise service deployments. In these cases, there are not necessarily privacy or security concerns but rather computing or network limitations such as those we see in edge devices and federated learning. This is typical for device monitoring in manufacturing, where devices are not continuously connected to cloud services.

The portable app approach enables us to easily deploy our app inside different customer environments, which will allow them to keep their data inside their organization without costing them or us considerable effort.

So your cloud or mine?

In cases where the use cases or industries do not require private cloud or on-prem deployment, don’t do it. These types of installations have constraints, so if it’s not really needed, it’s recommended not to go down that road. Because when we turn to on-prem or managed\unmanaged installations for customers, we are entering their world of restrictions and tech stack.

Customers could ask for architectural and tech stack changes to comply with their cost structure, security, and maintenance needs. Not to mention that each customer could ask for a different implementation. For instance, you may be asked to restrict the number of EC2 instances or k8s nodes to fit the customer budget or replace Apache Kafka with Azure EventHubs, because the customer’s engineers already work with it, making for easier maintenance on their part.

Another potential con of these installations is the support and maintenance required for each environment. Different deployments mean dedicated customer support and maintenance for each environment. We can’t simply hit a button and upgrade the customer to a newer version of our software, assuming that the instance is managed in the first place. Customers may have different versions of our app, so we’ll need to support multiple versions simultaneously. We’ll also need to schedule upgrades and maintenance, take into account downtime, and work with external stakeholders to ensure a seamless transition each time a change is deployed on a customer-by-customer basis.

Portability tips & tricks

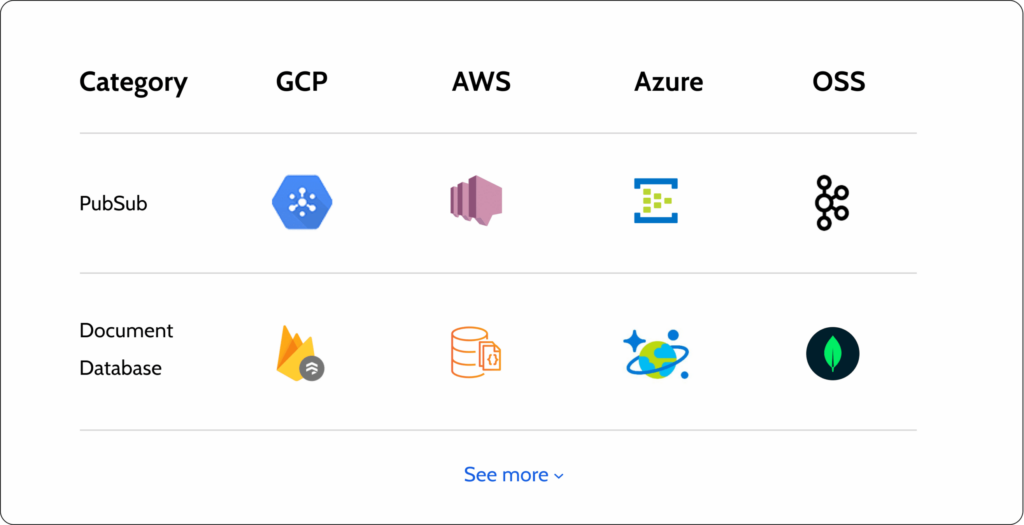

Cloud proprietary products can come in handy when implementing SaaS solutions, but it’s more complicated if we want to avoid vendor lockdown. For instance, Amazon SNS, Google Cloud PubSub, and many more – are proprietary to their own clouds and are not available on other cloud vendors. Meaning you’d need to re-implement parts of the system to work with different proprietary products for each vendor. This will result in considerable development, testing, and maintenance effort. More importantly, this isn’t an equal trade. We’re not just changing endpoints or using different APIs/SDKs. The products themselves (like Google BigQuery vs. Amazon Redshift) are fundamentally different on the actual core capabilities and features level, leading to different solutions for each deployment.

A good practice in this scenario is to use standalone and\or open-source products, like Apache Kafka, PostgreSQL, Apache Druid, etc. Then the implementation of the system won’t change between environments, only the infrastructure underneath. An important caveat here! Yes, we want to avoid technology lock-in, but we can definitely use a managed version of a product; for example, we can use RDS with a PostgreSQL backend.

There are also products that share the same API. In these cases, we can leverage dedicated cloud technology for speedy development and still use different technologies for specific deployments with equivalent APIs like Apache Kafka and Azure Event Hubs, as mentioned above.

P.S. We found an excellent resource for AWS vs. OSS but didn’t come across one for GCP or Azure. If you know of one, give us a ping at moran.halevi@superwise.ai, and we’ll update the links here.

IaC

Infrastructure as Code (IaC) is a method of provisioning and maintaining the infrastructure (VMs, cloud resources, network, etc.) of software and IT systems as configuration code that can be stored in codebases and run with automations.

IaCs like Terraform, Pulumi, and so forth are generally considered the way to go for maintaining environments. In the case of portable apps, it’s even more crucial to have an automated and generic way to deploy your system in different environments, as you’ll do it more intensively. This goes to the previous point that if the tech stack is not bound to some specific vendor, the IaC underneath can be much more generic and easy to maintain, leading the overall system to be much more standalone.

Another consideration in unmanaged and on-premises installations is that we’ll need to provide our IaC to customers so they can deploy the app themselves. In this case, the IaC should be in a state-of-the-art condition, as simple and easy to use as possible.

Virtualization and Kubernetes

One of the best ways to make your system cloud-agnostic and standalone is to containerize your app and deploy it on Kubernetes using Helm. Containers provide a virtualization layer in which we can proactively declare the set of installations or dependencies that our app needs, bundled in our deployable app, without assuming anything regarding the designated infrastructure. A common use case here is that a service or application may need to assume the specific OS it will run on without knowing in advance on which hardware it will actually be executed. All the big cloud vendors have a managed k8s solution, like GKE and EKS, and apps can also be deployed on local k8s clusters inside on-prem environments. Then you can easily deploy it with Helm on any cluster on the customer side.

For deploying images in k8s, custom Python\Node.js\Java images are the recommended way to go. Other technologies should be evaluated before deploying in k8s because not all have a stable and convenient operator for k8s. That said, if there is a managed solution, evaluate it first. Why spend manpower and energy on maintaining tech deployed in your own cluster if you can avoid it?

For instance, until a few years ago, Apache Kafka didn’t have a standard best-practice operator for k8s (today, there are good operators, like Strimzi), so choosing other solutions like deploying it on VMs, or using a managed service was a safer choice.

Right abstractions in the code

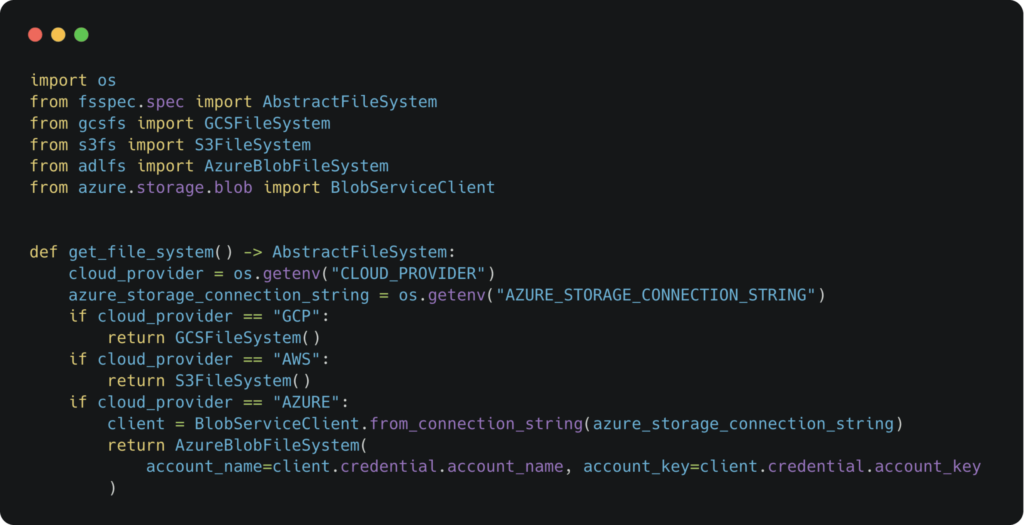

This leads me to the next point – how to implement your code internally in your system and SDKs. Dependency Injection is one of the basic concepts of SOLID design. In this case, it’s super important because our code will be deployed on different environments, and we want it to be able to handle all of them. To do so, it’s recommended to implement dependency injection wherever you’re interacting with specific vendor logic and make the code as generic as possible.

In this Python code snippet for abstracting the cloud storage from the code, we use simple environment variables to identify which cloud vendor we are running on and then generate an authenticated object (In this case, GCP and AWS auth will be automatically inferred from the environment) with an abstract file system API for later use.

Example of object storage

Then we use the function we implemented above to read a parquet file into a Pandas data frame without having to worry about specific cloud authentication.

However, this is a specific use-case of reading a parquet file into a pandas data frame. We should keep in mind that object storage is not a file system, so this abstraction may not behave well for all production use cases, as it adds overhead to the I/O operations.

Authentication

Another concept that can vary from vendor to vendor is the authentication methods inside the customer’s VPC or on-premises, where the system is deployed.

A good practice is to use workload-identity or default credentials methods (like in the example above), which are usually automatically inferred from the environment it runs on, without the need to maintain custom environment variables and add more code for authentication. The idea here is to be as less dependent as possible on the authentication methods of the customer environment.

Superwise’s portable app journey

A new app requires flexibility, and as you progress, your understanding of your customers and market will change. It’s hard to get all the security and portable elements in place right from the start (at least not without sacrificing GTM velocity), but it is important to have portability in the back of your mind as you move forward to minimize technical debt at a later date, hopefully.

There’s no one right path to portability, especially as you are getting started. With that said, this is the path that we at Superwise took:

Building our first MVP

We put a lot of thought into our tech stack selection to avoid vendor lockdown and enable easy scale and management. While we were building the first architecture of the platform, we decided to be vendor agnostic from the start and build the platform only using open source and standalone tools, like Apache Kafka, managed k8s (GKE), PostgreSQL, and more.

Automation and IaC

As our team expanded, building a mature CI/CD processes became crucial. We needed the ability to deploy a specific version of our microservices app for debugging and development. Therefore it was essential to have automation and IaC to enable us to spin up / down a new environment with the click of a button. Doing so increased our development flexibility and speed and baked in the ability to do customer-side deployments down the road.

SOC2 and full encryption

Onboarding more customers into the platform triggered the need to emphasize our security elements and the maturity of our development processes. Therefore we performed a SOC2 audit, added a centralized authentication service to enable SSO, and added encryption of all our external and internal endpoints using Istio – which let us provide external and internal encrypted communication.

Quickstart on-prem deployment kits

Some of our customers, specifically in banking and financial institutions, required on-premises deployment already from the POC stage. With that said, it was still a POC at that point, and we didn’t want to overload their DevOps or over-engineer the deployment for that point in time. To cover this, we created lightweight POC kits where everything is installed within k8s.

High availability on-prem kits

In the end, we needed to develop an HA (High Availability) kit for production-ready installations, which required us to refactor certain areas in our system to fit generically with other cloud vendors and test it on the different vendors.

We mapped all the areas which were not generic and assumed a vendor, like Google Cloud’s Dataproc for running Spark jobs, and even on the code level, by using Google Cloud libraries and dedicated environment variables. We then evaluated different solutions that could fit better, like deploying a Spark operator on k8s or even using a self-hosted Databricks cluster as part of our standalone kit.

You can check out the kit here if you want to dive into the details.

Should your app be portable?

The most important thing you need to ask yourself is if your app needs to be portable in the first place or if SaaS is perfectly ok. Here are some of the questions you should be asking to piece that together:

- What type of ML company are you? Are you a vendor? A service provider? An applied AI company?

- With what data do you need to provide value? Is it the customer’s data? Is that data proprietary? Sensitive?

- Who are your customers? Are they regulated? On-prem by nature? Enterprise or startup?

If the answer leads you to answer yes to portability, then invest in it from the get-go. But if you don’t really need portability, then make your lives easier and go SaaS.